- About MogDB

- Quick Start

- MogDB Playground

- Container-based MogDB Installation

- Installation on a Single Node

- MogDB Access

- Use CLI to Access MogDB

- Use GUI to Access MogDB

- Use Middleware to Access MogDB

- Use Programming Language to Access MogDB

- Using Sample Dataset Mogila

- Characteristic Description

- High Performance

- High Availability (HA)

- Maintainability

- Database Security

- Access Control Model

- Separation of Control and Access Permissions

- Database Encryption Authentication

- Data Encryption and Storage

- Database Audit

- Network Communication Security

- Resource Label

- Unified Audit

- Dynamic Data Anonymization

- Row-Level Access Control

- Password Strength Verification

- Equality Query in a Fully-encrypted Database

- Ledger Database Mechanism

- Enterprise-Level Features

- Support for Functions and Stored Procedures

- SQL Hints

- Full-Text Indexing

- Copy Interface for Error Tolerance

- Partitioning

- Support for Advanced Analysis Functions

- Materialized View

- HyperLogLog

- Creating an Index Online

- Autonomous Transaction

- Global Temporary Table

- Pseudocolumn ROWNUM

- Stored Procedure Debugging

- JDBC Client Load Balancing and Read/Write Isolation

- In-place Update Storage Engine

- Application Development Interfaces

- AI Capabilities

- Installation Guide

- Container Installation

- Simplified Installation Process

- Standard Installation

- Manual Installation

- Administrator Guide

- Routine Maintenance

- Starting and Stopping MogDB

- Using the gsql Client for Connection

- Routine Maintenance

- Checking OS Parameters

- Checking MogDB Health Status

- Checking Database Performance

- Checking and Deleting Logs

- Checking Time Consistency

- Checking The Number of Application Connections

- Routinely Maintaining Tables

- Routinely Recreating an Index

- Data Security Maintenance Suggestions

- Log Reference

- Primary and Standby Management

- MOT Engine

- Introducing MOT

- Using MOT

- Concepts of MOT

- Appendix

- Column-store Tables Management

- Backup and Restoration

- Importing and Exporting Data

- Importing Data

- Exporting Data

- Upgrade Guide

- Routine Maintenance

- AI Features Guide

- Overview

- Predictor: AI Query Time Forecasting

- X-Tuner: Parameter Optimization and Diagnosis

- SQLdiag: Slow SQL Discovery

- A-Detection: Status Monitoring

- Index-advisor: Index Recommendation

- DeepSQL

- AI-Native Database (DB4AI)

- Security Guide

- Developer Guide

- Application Development Guide

- Development Specifications

- Development Based on JDBC

- Overview

- JDBC Package, Driver Class, and Environment Class

- Development Process

- Loading the Driver

- Connecting to a Database

- Connecting to the Database (Using SSL)

- Running SQL Statements

- Processing Data in a Result Set

- Closing a Connection

- Managing Logs

- Example: Common Operations

- Example: Retrying SQL Queries for Applications

- Example: Importing and Exporting Data Through Local Files

- Example 2: Migrating Data from a MY Database to MogDB

- Example: Logic Replication Code

- Example: Parameters for Connecting to the Database in Different Scenarios

- JDBC API Reference

- java.sql.Connection

- java.sql.CallableStatement

- java.sql.DatabaseMetaData

- java.sql.Driver

- java.sql.PreparedStatement

- java.sql.ResultSet

- java.sql.ResultSetMetaData

- java.sql.Statement

- javax.sql.ConnectionPoolDataSource

- javax.sql.DataSource

- javax.sql.PooledConnection

- javax.naming.Context

- javax.naming.spi.InitialContextFactory

- CopyManager

- Development Based on ODBC

- Development Based on libpq

- Development Based on libpq

- libpq API Reference

- Database Connection Control Functions

- Database Statement Execution Functions

- Functions for Asynchronous Command Processing

- Functions for Canceling Queries in Progress

- Example

- Connection Characters

- Psycopg-Based Development

- Commissioning

- Appendices

- Stored Procedure

- User Defined Functions

- PL/pgSQL-SQL Procedural Language

- Scheduled Jobs

- Autonomous Transaction

- Logical Replication

- Logical Decoding

- Foreign Data Wrapper

- Materialized View

- Materialized View Overview

- Full Materialized View

- Incremental Materialized View

- Resource Load Management

- Overview

- Resource Management Preparation

- Application Development Guide

- Performance Tuning Guide

- System Optimization

- SQL Optimization

- WDR Snapshot Schema

- TPCC Performance Tuning Guide

- Reference Guide

- System Catalogs and System Views

- Overview of System Catalogs and System Views

- System Catalogs

- GS_AUDITING_POLICY

- GS_AUDITING_POLICY_ACCESS

- GS_AUDITING_POLICY_FILTERS

- GS_AUDITING_POLICY_PRIVILEGES

- GS_CLIENT_GLOBAL_KEYS

- GS_CLIENT_GLOBAL_KEYS_ARGS

- GS_COLUMN_KEYS

- GS_COLUMN_KEYS_ARGS

- GS_ENCRYPTED_COLUMNS

- GS_ENCRYPTED_PROC

- GS_GLOBAL_CHAIN

- GS_MASKING_POLICY

- GS_MASKING_POLICY_ACTIONS

- GS_MASKING_POLICY_FILTERS

- GS_MATVIEW

- GS_MATVIEW_DEPENDENCY

- GS_OPT_MODEL

- GS_POLICY_LABEL

- GS_RECYCLEBIN

- GS_TXN_SNAPSHOT

- GS_WLM_INSTANCE_HISTORY

- GS_WLM_OPERATOR_INFO

- GS_WLM_PLAN_ENCODING_TABLE

- GS_WLM_PLAN_OPERATOR_INFO

- GS_WLM_EC_OPERATOR_INFO

- PG_AGGREGATE

- PG_AM

- PG_AMOP

- PG_AMPROC

- PG_APP_WORKLOADGROUP_MAPPING

- PG_ATTRDEF

- PG_ATTRIBUTE

- PG_AUTHID

- PG_AUTH_HISTORY

- PG_AUTH_MEMBERS

- PG_CAST

- PG_CLASS

- PG_COLLATION

- PG_CONSTRAINT

- PG_CONVERSION

- PG_DATABASE

- PG_DB_ROLE_SETTING

- PG_DEFAULT_ACL

- PG_DEPEND

- PG_DESCRIPTION

- PG_DIRECTORY

- PG_ENUM

- PG_EXTENSION

- PG_EXTENSION_DATA_SOURCE

- PG_FOREIGN_DATA_WRAPPER

- PG_FOREIGN_SERVER

- PG_FOREIGN_TABLE

- PG_INDEX

- PG_INHERITS

- PG_JOB

- PG_JOB_PROC

- PG_LANGUAGE

- PG_LARGEOBJECT

- PG_LARGEOBJECT_METADATA

- PG_NAMESPACE

- PG_OBJECT

- PG_OPCLASS

- PG_OPERATOR

- PG_OPFAMILY

- PG_PARTITION

- PG_PLTEMPLATE

- PG_PROC

- PG_RANGE

- PG_RESOURCE_POOL

- PG_REWRITE

- PG_RLSPOLICY

- PG_SECLABEL

- PG_SHDEPEND

- PG_SHDESCRIPTION

- PG_SHSECLABEL

- PG_STATISTIC

- PG_STATISTIC_EXT

- PG_SYNONYM

- PG_TABLESPACE

- PG_TRIGGER

- PG_TS_CONFIG

- PG_TS_CONFIG_MAP

- PG_TS_DICT

- PG_TS_PARSER

- PG_TS_TEMPLATE

- PG_TYPE

- PG_USER_MAPPING

- PG_USER_STATUS

- PG_WORKLOAD_GROUP

- PLAN_TABLE_DATA

- STATEMENT_HISTORY

- System Views

- GET_GLOBAL_PREPARED_XACTS

- GS_AUDITING

- GS_AUDITING_ACCESS

- GS_AUDITING_PRIVILEGE

- GS_CLUSTER_RESOURCE_INFO

- GS_INSTANCE_TIME

- GS_LABELS

- GS_MASKING

- GS_MATVIEWS

- GS_SESSION_MEMORY

- GS_SESSION_CPU_STATISTICS

- GS_SESSION_MEMORY_CONTEXT

- GS_SESSION_MEMORY_DETAIL

- GS_SESSION_MEMORY_STATISTICS

- GS_SQL_COUNT

- GS_WLM_CGROUP_INFO

- GS_WLM_PLAN_OPERATOR_HISTORY

- GS_WLM_REBUILD_USER_RESOURCE_POOL

- GS_WLM_RESOURCE_POOL

- GS_WLM_USER_INFO

- GS_STAT_SESSION_CU

- GS_TOTAL_MEMORY_DETAIL

- MPP_TABLES

- PG_AVAILABLE_EXTENSION_VERSIONS

- PG_AVAILABLE_EXTENSIONS

- PG_COMM_DELAY

- PG_COMM_RECV_STREAM

- PG_COMM_SEND_STREAM

- PG_COMM_STATUS

- PG_CONTROL_GROUP_CONFIG

- PG_CURSORS

- PG_EXT_STATS

- PG_GET_INVALID_BACKENDS

- PG_GET_SENDERS_CATCHUP_TIME

- PG_GROUP

- PG_GTT_RELSTATS

- PG_GTT_STATS

- PG_GTT_ATTACHED_PIDS

- PG_INDEXES

- PG_LOCKS

- PG_NODE_ENV

- PG_OS_THREADS

- PG_PREPARED_STATEMENTS

- PG_PREPARED_XACTS

- PG_REPLICATION_SLOTS

- PG_RLSPOLICIES

- PG_ROLES

- PG_RULES

- PG_SECLABELS

- PG_SETTINGS

- PG_SHADOW

- PG_STATS

- PG_STAT_ACTIVITY

- PG_STAT_ALL_INDEXES

- PG_STAT_ALL_TABLES

- PG_STAT_BAD_BLOCK

- PG_STAT_BGWRITER

- PG_STAT_DATABASE

- PG_STAT_DATABASE_CONFLICTS

- PG_STAT_USER_FUNCTIONS

- PG_STAT_USER_INDEXES

- PG_STAT_USER_TABLES

- PG_STAT_REPLICATION

- PG_STAT_SYS_INDEXES

- PG_STAT_SYS_TABLES

- PG_STAT_XACT_ALL_TABLES

- PG_STAT_XACT_SYS_TABLES

- PG_STAT_XACT_USER_FUNCTIONS

- PG_STAT_XACT_USER_TABLES

- PG_STATIO_ALL_INDEXES

- PG_STATIO_ALL_SEQUENCES

- PG_STATIO_ALL_TABLES

- PG_STATIO_SYS_INDEXES

- PG_STATIO_SYS_SEQUENCES

- PG_STATIO_SYS_TABLES

- PG_STATIO_USER_INDEXES

- PG_STATIO_USER_SEQUENCES

- PG_STATIO_USER_TABLES

- PG_TABLES

- PG_TDE_INFO

- PG_THREAD_WAIT_STATUS

- PG_TIMEZONE_ABBREVS

- PG_TIMEZONE_NAMES

- PG_TOTAL_MEMORY_DETAIL

- PG_TOTAL_USER_RESOURCE_INFO

- PG_TOTAL_USER_RESOURCE_INFO_OID

- PG_USER

- PG_USER_MAPPINGS

- PG_VARIABLE_INFO

- PG_VIEWS

- PLAN_TABLE

- GS_FILE_STAT

- GS_OS_RUN_INFO

- GS_REDO_STAT

- GS_SESSION_STAT

- GS_SESSION_TIME

- GS_THREAD_MEMORY_CONTEXT

- Functions and Operators

- Logical Operators

- Comparison Operators

- Character Processing Functions and Operators

- Binary String Functions and Operators

- Bit String Functions and Operators

- Mode Matching Operators

- Mathematical Functions and Operators

- Date and Time Processing Functions and Operators

- Type Conversion Functions

- Geometric Functions and Operators

- Network Address Functions and Operators

- Text Search Functions and Operators

- JSON/JSONB Functions and Operators

- HLL Functions and Operators

- SEQUENCE Functions

- Array Functions and Operators

- Range Functions and Operators

- Aggregate Functions

- Window Functions

- Security Functions

- Ledger Database Functions

- Encrypted Equality Functions

- Set Returning Functions

- Conditional Expression Functions

- System Information Functions

- System Administration Functions

- Configuration Settings Functions

- Universal File Access Functions

- Server Signal Functions

- Backup and Restoration Control Functions

- Snapshot Synchronization Functions

- Database Object Functions

- Advisory Lock Functions

- Logical Replication Functions

- Segment-Page Storage Functions

- Other Functions

- Undo System Functions

- Statistics Information Functions

- Trigger Functions

- Hash Function

- Prompt Message Function

- Global Temporary Table Functions

- Fault Injection System Function

- AI Feature Functions

- Dynamic Data Masking Functions

- Other System Functions

- Internal Functions

- Obsolete Functions

- Supported Data Types

- Numeric Types

- Monetary Types

- Boolean Types

- Enumerated Types

- Character Types

- Binary Types

- Date/Time Types

- Geometric

- Network Address Types

- Bit String Types

- Text Search Types

- UUID

- JSON/JSONB Types

- HLL

- Array Types

- Range

- OID Types

- Pseudo-Types

- Data Types Supported by Column-store Tables

- XML Types

- Data Type Used by the Ledger Database

- SQL Syntax

- ABORT

- ALTER AGGREGATE

- ALTER AUDIT POLICY

- ALTER DATABASE

- ALTER DATA SOURCE

- ALTER DEFAULT PRIVILEGES

- ALTER DIRECTORY

- ALTER EXTENSION

- ALTER FOREIGN TABLE

- ALTER FUNCTION

- ALTER GROUP

- ALTER INDEX

- ALTER LANGUAGE

- ALTER LARGE OBJECT

- ALTER MASKING POLICY

- ALTER MATERIALIZED VIEW

- ALTER OPERATOR

- ALTER RESOURCE LABEL

- ALTER RESOURCE POOL

- ALTER ROLE

- ALTER ROW LEVEL SECURITY POLICY

- ALTER RULE

- ALTER SCHEMA

- ALTER SEQUENCE

- ALTER SERVER

- ALTER SESSION

- ALTER SYNONYM

- ALTER SYSTEM KILL SESSION

- ALTER SYSTEM SET

- ALTER TABLE

- ALTER TABLE PARTITION

- ALTER TABLE SUBPARTITION

- ALTER TABLESPACE

- ALTER TEXT SEARCH CONFIGURATION

- ALTER TEXT SEARCH DICTIONARY

- ALTER TRIGGER

- ALTER TYPE

- ALTER USER

- ALTER USER MAPPING

- ALTER VIEW

- ANALYZE | ANALYSE

- BEGIN

- CALL

- CHECKPOINT

- CLEAN CONNECTION

- CLOSE

- CLUSTER

- COMMENT

- COMMIT | END

- COMMIT PREPARED

- CONNECT BY

- COPY

- CREATE AGGREGATE

- CREATE AUDIT POLICY

- CREATE CAST

- CREATE CLIENT MASTER KEY

- CREATE COLUMN ENCRYPTION KEY

- CREATE DATABASE

- CREATE DATA SOURCE

- CREATE DIRECTORY

- CREATE EXTENSION

- CREATE FOREIGN TABLE

- CREATE FUNCTION

- CREATE GROUP

- CREATE INCREMENTAL MATERIALIZED VIEW

- CREATE INDEX

- CREATE LANGUAGE

- CREATE MASKING POLICY

- CREATE MATERIALIZED VIEW

- CREATE MODEL

- CREATE OPERATOR

- CREATE PACKAGE

- CREATE ROW LEVEL SECURITY POLICY

- CREATE PROCEDURE

- CREATE RESOURCE LABEL

- CREATE RESOURCE POOL

- CREATE ROLE

- CREATE RULE

- CREATE SCHEMA

- CREATE SEQUENCE

- CREATE SERVER

- CREATE SYNONYM

- CREATE TABLE

- CREATE TABLE AS

- CREATE TABLE PARTITION

- CREATE TABLE SUBPARTITION

- CREATE TABLESPACE

- CREATE TEXT SEARCH CONFIGURATION

- CREATE TEXT SEARCH DICTIONARY

- CREATE TRIGGER

- CREATE TYPE

- CREATE USER

- CREATE USER MAPPING

- CREATE VIEW

- CREATE WEAK PASSWORD DICTIONARY

- CURSOR

- DEALLOCATE

- DECLARE

- DELETE

- DO

- DROP AGGREGATE

- DROP AUDIT POLICY

- DROP CAST

- DROP CLIENT MASTER KEY

- DROP COLUMN ENCRYPTION KEY

- DROP DATABASE

- DROP DATA SOURCE

- DROP DIRECTORY

- DROP EXTENSION

- DROP FOREIGN TABLE

- DROP FUNCTION

- DROP GROUP

- DROP INDEX

- DROP LANGUAGE

- DROP MASKING POLICY

- DROP MATERIALIZED VIEW

- DROP MODEL

- DROP OPERATOR

- DROP OWNED

- DROP PACKAGE

- DROP PROCEDURE

- DROP RESOURCE LABEL

- DROP RESOURCE POOL

- DROP ROW LEVEL SECURITY POLICY

- DROP ROLE

- DROP RULE

- DROP SCHEMA

- DROP SEQUENCE

- DROP SERVER

- DROP SYNONYM

- DROP TABLE

- DROP TABLESPACE

- DROP TEXT SEARCH CONFIGURATION

- DROP TEXT SEARCH DICTIONARY

- DROP TRIGGER

- DROP TYPE

- DROP USER

- DROP USER MAPPING

- DROP VIEW

- DROP WEAK PASSWORD DICTIONARY

- EXECUTE

- EXECUTE DIRECT

- EXPLAIN

- EXPLAIN PLAN

- FETCH

- GRANT

- INSERT

- LOCK

- MOVE

- MERGE INTO

- PREDICT BY

- PREPARE

- PREPARE TRANSACTION

- PURGE

- REASSIGN OWNED

- REFRESH INCREMENTAL MATERIALIZED VIEW

- REFRESH MATERIALIZED VIEW

- REINDEX

- RELEASE SAVEPOINT

- RESET

- REVOKE

- ROLLBACK

- ROLLBACK PREPARED

- ROLLBACK TO SAVEPOINT

- SAVEPOINT

- SELECT

- SELECT INTO

- SET

- SET CONSTRAINTS

- SET ROLE

- SET SESSION AUTHORIZATION

- SET TRANSACTION

- SHOW

- SHUTDOWN

- SNAPSHOT

- START TRANSACTION

- TIMECAPSULE TABLE

- TRUNCATE

- UPDATE

- VACUUM

- VALUES

- SQL Reference

- MogDB SQL

- Keywords

- Constant and Macro

- Expressions

- Type Conversion

- Full Text Search

- Introduction

- Tables and Indexes

- Controlling Text Search

- Additional Features

- Parser

- Dictionaries

- Configuration Examples

- Testing and Debugging Text Search

- Limitations

- System Operation

- Controlling Transactions

- DDL Syntax Overview

- DML Syntax Overview

- DCL Syntax Overview

- Appendix

- GUC Parameters

- GUC Parameter Usage

- File Location

- Connection and Authentication

- Resource Consumption

- Parallel Import

- Write Ahead Log

- HA Replication

- Memory Table

- Query Planning

- Error Reporting and Logging

- Alarm Detection

- Statistics During the Database Running

- Load Management

- Automatic Vacuuming

- Default Settings of Client Connection

- Lock Management

- Version and Platform Compatibility

- Faut Tolerance

- Connection Pool Parameters

- MogDB Transaction

- Developer Options

- Auditing

- Upgrade Parameters

- Miscellaneous Parameters

- Wait Events

- Query

- System Performance Snapshot

- Security Configuration

- Global Temporary Table

- HyperLogLog

- Scheduled Task

- Thread Pool

- User-defined Functions

- Backup and Restoration

- Undo

- DCF Parameters Settings

- Flashback

- Rollback Parameters

- Reserved Parameters

- AI Features

- Appendix

- Schema

- Information Schema

- DBE_PERF

- Overview

- OS

- Instance

- Memory

- File

- Object

- STAT_USER_TABLES

- SUMMARY_STAT_USER_TABLES

- GLOBAL_STAT_USER_TABLES

- STAT_USER_INDEXES

- SUMMARY_STAT_USER_INDEXES

- GLOBAL_STAT_USER_INDEXES

- STAT_SYS_TABLES

- SUMMARY_STAT_SYS_TABLES

- GLOBAL_STAT_SYS_TABLES

- STAT_SYS_INDEXES

- SUMMARY_STAT_SYS_INDEXES

- GLOBAL_STAT_SYS_INDEXES

- STAT_ALL_TABLES

- SUMMARY_STAT_ALL_TABLES

- GLOBAL_STAT_ALL_TABLES

- STAT_ALL_INDEXES

- SUMMARY_STAT_ALL_INDEXES

- GLOBAL_STAT_ALL_INDEXES

- STAT_DATABASE

- SUMMARY_STAT_DATABASE

- GLOBAL_STAT_DATABASE

- STAT_DATABASE_CONFLICTS

- SUMMARY_STAT_DATABASE_CONFLICTS

- GLOBAL_STAT_DATABASE_CONFLICTS

- STAT_XACT_ALL_TABLES

- SUMMARY_STAT_XACT_ALL_TABLES

- GLOBAL_STAT_XACT_ALL_TABLES

- STAT_XACT_SYS_TABLES

- SUMMARY_STAT_XACT_SYS_TABLES

- GLOBAL_STAT_XACT_SYS_TABLES

- STAT_XACT_USER_TABLES

- SUMMARY_STAT_XACT_USER_TABLES

- GLOBAL_STAT_XACT_USER_TABLES

- STAT_XACT_USER_FUNCTIONS

- SUMMARY_STAT_XACT_USER_FUNCTIONS

- GLOBAL_STAT_XACT_USER_FUNCTIONS

- STAT_BAD_BLOCK

- SUMMARY_STAT_BAD_BLOCK

- GLOBAL_STAT_BAD_BLOCK

- STAT_USER_FUNCTIONS

- SUMMARY_STAT_USER_FUNCTIONS

- GLOBAL_STAT_USER_FUNCTIONS

- Workload

- Session/Thread

- SESSION_STAT

- GLOBAL_SESSION_STAT

- SESSION_TIME

- GLOBAL_SESSION_TIME

- SESSION_MEMORY

- GLOBAL_SESSION_MEMORY

- SESSION_MEMORY_DETAIL

- GLOBAL_SESSION_MEMORY_DETAIL

- SESSION_STAT_ACTIVITY

- GLOBAL_SESSION_STAT_ACTIVITY

- THREAD_WAIT_STATUS

- GLOBAL_THREAD_WAIT_STATUS

- LOCAL_THREADPOOL_STATUS

- GLOBAL_THREADPOOL_STATUS

- SESSION_CPU_RUNTIME

- SESSION_MEMORY_RUNTIME

- STATEMENT_IOSTAT_COMPLEX_RUNTIME

- LOCAL_ACTIVE_SESSION

- Transaction

- Query

- STATEMENT

- SUMMARY_STATEMENT

- STATEMENT_COUNT

- GLOBAL_STATEMENT_COUNT

- SUMMARY_STATEMENT_COUNT

- GLOBAL_STATEMENT_COMPLEX_HISTORY

- GLOBAL_STATEMENT_COMPLEX_HISTORY_TABLE

- GLOBAL_STATEMENT_COMPLEX_RUNTIME

- STATEMENT_RESPONSETIME_PERCENTILE

- STATEMENT_USER_COMPLEX_HISTORY

- STATEMENT_COMPLEX_RUNTIME

- STATEMENT_COMPLEX_HISTORY_TABLE

- STATEMENT_COMPLEX_HISTORY

- STATEMENT_WLMSTAT_COMPLEX_RUNTIME

- STATEMENT_HISTORY

- Cache/IO

- STATIO_USER_TABLES

- SUMMARY_STATIO_USER_TABLES

- GLOBAL_STATIO_USER_TABLES

- STATIO_USER_INDEXES

- SUMMARY_STATIO_USER_INDEXES

- GLOBAL_STATIO_USER_INDEXES

- STATIO_USER_SEQUENCES

- SUMMARY_STATIO_USER_SEQUENCES

- GLOBAL_STATIO_USER_SEQUENCES

- STATIO_SYS_TABLES

- SUMMARY_STATIO_SYS_TABLES

- GLOBAL_STATIO_SYS_TABLES

- STATIO_SYS_INDEXES

- SUMMARY_STATIO_SYS_INDEXES

- GLOBAL_STATIO_SYS_INDEXES

- STATIO_SYS_SEQUENCES

- SUMMARY_STATIO_SYS_SEQUENCES

- GLOBAL_STATIO_SYS_SEQUENCES

- STATIO_ALL_TABLES

- SUMMARY_STATIO_ALL_TABLES

- GLOBAL_STATIO_ALL_TABLES

- STATIO_ALL_INDEXES

- SUMMARY_STATIO_ALL_INDEXES

- GLOBAL_STATIO_ALL_INDEXES

- STATIO_ALL_SEQUENCES

- SUMMARY_STATIO_ALL_SEQUENCES

- GLOBAL_STATIO_ALL_SEQUENCES

- GLOBAL_STAT_DB_CU

- GLOBAL_STAT_SESSION_CU

- Utility

- REPLICATION_STAT

- GLOBAL_REPLICATION_STAT

- REPLICATION_SLOTS

- GLOBAL_REPLICATION_SLOTS

- BGWRITER_STAT

- GLOBAL_BGWRITER_STAT

- GLOBAL_CKPT_STATUS

- GLOBAL_DOUBLE_WRITE_STATUS

- GLOBAL_PAGEWRITER_STATUS

- GLOBAL_RECORD_RESET_TIME

- GLOBAL_REDO_STATUS

- GLOBAL_RECOVERY_STATUS

- CLASS_VITAL_INFO

- USER_LOGIN

- SUMMARY_USER_LOGIN

- GLOBAL_GET_BGWRITER_STATUS

- GLOBAL_SINGLE_FLUSH_DW_STATUS

- GLOBAL_CANDIDATE_STATUS

- Lock

- Wait Events

- Configuration

- Operator

- Workload Manager

- Global Plancache

- RTO

- Appendix

- DBE_PLDEBUGGER Schema

- Overview

- DBE_PLDEBUGGER.turn_on

- DBE_PLDEBUGGER.turn_off

- DBE_PLDEBUGGER.local_debug_server_info

- DBE_PLDEBUGGER.attach

- DBE_PLDEBUGGER.next

- DBE_PLDEBUGGER.continue

- DBE_PLDEBUGGER.abort

- DBE_PLDEBUGGER.print_var

- DBE_PLDEBUGGER.info_code

- DBE_PLDEBUGGER.step

- DBE_PLDEBUGGER.add_breakpoint

- DBE_PLDEBUGGER.delete_breakpoint

- DBE_PLDEBUGGER.info_breakpoints

- DBE_PLDEBUGGER.backtrace

- DBE_PLDEBUGGER.finish

- DBE_PLDEBUGGER.set_var

- DB4AI Schema

- Tool Reference

- Tool Overview

- Client Tool

- Server Tools

- Tools Used in the Internal System

- gaussdb

- gs_backup

- gs_basebackup

- gs_ctl

- gs_initdb

- gs_install

- gs_install_plugin

- gs_install_plugin_local

- gs_postuninstall

- gs_preinstall

- gs_sshexkey

- gs_tar

- gs_uninstall

- gs_upgradectl

- gs_expansion

- gs_dropnode

- gs_probackup

- gstrace

- kdb5_util

- kadmin.local

- kinit

- klist

- krb5kdc

- kdestroy

- pg_config

- pg_controldata

- pg_recvlogical

- pg_resetxlog

- pg_archivecleanup

- pssh

- pscp

- transfer.py

- FAQ

- System Catalogs and Views Supported by gs_collector

- Extension Reference

- Error Code Reference

- Description of SQL Error Codes

- Third-Party Library Error Codes

- GAUSS-00001 - GAUSS-00100

- GAUSS-00101 - GAUSS-00200

- GAUSS 00201 - GAUSS 00300

- GAUSS 00301 - GAUSS 00400

- GAUSS 00401 - GAUSS 00500

- GAUSS 00501 - GAUSS 00600

- GAUSS 00601 - GAUSS 00700

- GAUSS 00701 - GAUSS 00800

- GAUSS 00801 - GAUSS 00900

- GAUSS 00901 - GAUSS 01000

- GAUSS 01001 - GAUSS 01100

- GAUSS 01101 - GAUSS 01200

- GAUSS 01201 - GAUSS 01300

- GAUSS 01301 - GAUSS 01400

- GAUSS 01401 - GAUSS 01500

- GAUSS 01501 - GAUSS 01600

- GAUSS 01601 - GAUSS 01700

- GAUSS 01701 - GAUSS 01800

- GAUSS 01801 - GAUSS 01900

- GAUSS 01901 - GAUSS 02000

- GAUSS 02001 - GAUSS 02100

- GAUSS 02101 - GAUSS 02200

- GAUSS 02201 - GAUSS 02300

- GAUSS 02301 - GAUSS 02400

- GAUSS 02401 - GAUSS 02500

- GAUSS 02501 - GAUSS 02600

- GAUSS 02601 - GAUSS 02700

- GAUSS 02701 - GAUSS 02800

- GAUSS 02801 - GAUSS 02900

- GAUSS 02901 - GAUSS 03000

- GAUSS 03001 - GAUSS 03100

- GAUSS 03101 - GAUSS 03200

- GAUSS 03201 - GAUSS 03300

- GAUSS 03301 - GAUSS 03400

- GAUSS 03401 - GAUSS 03500

- GAUSS 03501 - GAUSS 03600

- GAUSS 03601 - GAUSS 03700

- GAUSS 03701 - GAUSS 03800

- GAUSS 03801 - GAUSS 03900

- GAUSS 03901 - GAUSS 04000

- GAUSS 04001 - GAUSS 04100

- GAUSS 04101 - GAUSS 04200

- GAUSS 04201 - GAUSS 04300

- GAUSS 04301 - GAUSS 04400

- GAUSS 04401 - GAUSS 04500

- GAUSS 04501 - GAUSS 04600

- GAUSS 04601 - GAUSS 04700

- GAUSS 04701 - GAUSS 04800

- GAUSS 04801 - GAUSS 04900

- GAUSS 04901 - GAUSS 05000

- GAUSS 05001 - GAUSS 05100

- GAUSS 05101 - GAUSS 05200

- GAUSS 05201 - GAUSS 05300

- GAUSS 05301 - GAUSS 05400

- GAUSS 05401 - GAUSS 05500

- GAUSS 05501 - GAUSS 05600

- GAUSS 05601 - GAUSS 05700

- GAUSS 05701 - GAUSS 05800

- GAUSS 05801 - GAUSS 05900

- GAUSS 05901 - GAUSS 06000

- GAUSS 06001 - GAUSS 06100

- GAUSS 06101 - GAUSS 06200

- GAUSS 06201 - GAUSS 06300

- GAUSS 06301 - GAUSS 06400

- GAUSS 06401 - GAUSS 06500

- GAUSS 06501 - GAUSS 06600

- GAUSS 06601 - GAUSS 06700

- GAUSS 06701 - GAUSS 06800

- GAUSS 06801 - GAUSS 06900

- GAUSS 06901 - GAUSS 07000

- GAUSS 07001 - GAUSS 07100

- GAUSS 07101 - GAUSS 07200

- GAUSS 07201 - GAUSS 07300

- GAUSS 07301 - GAUSS 07400

- GAUSS 07401 - GAUSS 07480

- GAUSS 50000 - GAUSS 50999

- GAUSS 51000 - GAUSS 51999

- GAUSS 52000 - GAUSS 52999

- GAUSS 53000 - GAUSS 53699

- Error Log Reference

- System Catalogs and System Views

- Common Faults and Identification Guide

- Common Fault Locating Methods

- Common Fault Locating Cases

- Core Fault Locating

- Permission/Session/Data Type Fault Location

- Service/High Availability/Concurrency Fault Location

- Table/Partition Table Fault Location

- File System/Disk/Memory Fault Location

- After You Run the du Command to Query Data File Size In the XFS File System, the Query Result Is Greater than the Actual File Size

- File Is Damaged in the XFS File System

- Insufficient Memory

- "Error:No space left on device" Is Displayed

- When the TPC-C is running and a disk to be injected is full, the TPC-C stops responding

- Disk Space Usage Reaches the Threshold and the Database Becomes Read-only

- SQL Fault Location

- Index Fault Location

- Source Code Parsing

- FAQs

- Glossary

MOT Administration

The following describes various MOT administration topics.

MOT Durability

Durability refers to long-term data protection (also known as disk persistence). Durability means that stored data does not suffer from any kind of degradation or corruption, so that data is never lost or compromised. Durability ensures that data and the MOT engine are restored to a consistent state after a planned shutdown (for example, for maintenance) or an unplanned crash (for example, a power failure).

Memory storage is volatile, meaning that it requires power to maintain the stored information. Disk storage, on the other hand, is non-volatile, meaning that it does not require power to maintain stored information, thus, it can survive a power shutdown. MOT uses both types of storage - it has all data in memory, while persisting transactional changes to disk MOT Durability and by maintaining frequent periodic MOT Checkpoints in order to ensure data recovery in case of shutdown.

The user must ensure sufficient disk space for the logging and Checkpointing operations. A separated drive can be used for the Checkpoint to improve performance by reducing disk I/O load.

You may refer to the MOT Key Technologies section for an overview of how durability is implemented in the MOT engine.

To configure durability -

To ensure strict consistency, configure the synchronous_commit parameter to On in the postgres.conf configuration file.

MOTs WAL Redo Log and Checkpoints enable durability, as described below -

MOT Logging - WAL Redo Log

To ensure Durability, MOT is fully integrated with the MogDB's Write-Ahead Logging (WAL) mechanism, so that MOT persists data in WAL records using MogDB's XLOG interface. This means that every addition, update, and deletion to an MOT table’s record is recorded as an entry in the WAL. This ensures that the most current data state can be regenerated and recovered from this non-volatile log. For example, if three new rows were added to a table, two were deleted and one was updated, then six entries would be recorded in the log.

MOT log records are written to the same WAL as the other records of MogDB disk-based tables.

MOT only logs an operation at the transaction commit phase.

MOT only logs the updated delta record in order to minimize the amount of data written to disk.

During recovery, data is loaded from the last known or a specific Checkpoint; and then the WAL Redo log is used to complete the data changes that occur from that point forward.

The WAL (Redo Log) retains all the table row modifications until a Checkpoint is performed (as described above). The log can then be truncated in order to reduce recovery time and to save disk space.

Note - In order to ensure that the log IO device does not become a bottleneck, the log file must be placed on a drive that has low latency.

MOT Logging Types

Two synchronous transaction logging options and one asynchronous transaction logging option are supported (these are also supported by the standard MogDB disk engine). MOT also supports synchronous Group Commit logging with NUMA-awareness optimization, as described below.

According to your configuration, one of the following types of logging is implemented -

-

Synchronous Redo Logging

The Synchronous Redo Logging option is the simplest and most strict redo logger. When a transaction is committed by a client application, the transaction redo entries are recorded in the WAL (Redo Log), as follows -

- While a transaction is in progress, it is stored in the MOT's memory.

- After a transaction finishes and the client application sends a Commit command, the transaction is locked and then written to the WAL Redo Log on the disk. This means that while the transaction log entries are being written to the log, the client application is still waiting for a response.

- As soon as the transaction's entire buffer is written to the log, the changes to the data in memory take place and then the transaction is committed. After the transaction has been committed, the client application is notified that the transaction is complete.

Summary

The Synchronous Redo Logging option is the safest and most strict because it ensures total synchronization of the client application and the WAL Redo log entries for each transaction as it is committed; thus ensuring total durability and consistency with absolutely no data loss. This logging option prevents the situation where a client application might mark a transaction as successful, when it has not yet been persisted to disk.

The downside of the Synchronous Redo Logging option is that it is the slowest logging mechanism of the three options. This is because a client application must wait until all data is written to disk and because of the frequent disk writes (which typically slow down the database).

-

Group Synchronous Redo Logging

The Group Synchronous Redo Logging option is very similar to the Synchronous Redo Logging option, because it also ensures total durability with absolutely no data loss and total synchronization of the client application and the WAL (Redo Log) entries. The difference is that the Group Synchronous Redo Logging option writes _groups of transaction_r edo entries to the WAL Redo Log on the disk at the same time, instead of writing each and every transaction as it is committed. Using Group Synchronous Redo Logging reduces the amount of disk I/Os and thus improves performance, especially when running a heavy workload.

The MOT engine performs synchronous Group Commit logging with Non-Uniform Memory Access (NUMA)-awareness optimization by automatically grouping transactions according to the NUMA socket of the core on which the transaction is running.

You may refer to the NUMA Awareness Allocation and Affinity section for more information about NUMA-aware memory access.

When a transaction commits, a group of entries are recorded in the WAL Redo Log, as follows -

-

While a transaction is in progress, it is stored in the memory. The MOT engine groups transactions in buckets according to the NUMA socket of the core on which the transaction is running. This means that all the transactions running on the same socket are grouped together and that multiple groups will be filling in parallel according to the core on which the transaction is running.

Writing transactions to the WAL is more efficient in this manner because all the buffers from the same socket are written to disk together.

Note - Each thread runs on a single core/CPU which belongs to a single socket and each thread only writes to the socket of the core on which it is running.

-

After a transaction finishes and the client application sends a Commit command, the transaction redo log entries are serialized together with other transactions that belong to the same group.

-

After the configured criteria are fulfilled for a specific group of transactions (quantity of committed transactions or timeout period as describes in the REDO LOG (MOT) section), the transactions in this group are written to the WAL on the disk. This means that while these log entries are being written to the log, the client applications that issued the commit are waiting for a response.

-

As soon as all the transaction buffers in the NUMA-aware group have been written to the log, all the transactions in the group are performing the necessary changes to the memory store and the clients are notified that these transactions are complete.

Summary

The Group Synchronous Redo Logging option is a an extremely safe and strict logging option because it ensures total synchronization of the client application and the WAL Redo log entries; thus ensuring total durability and consistency with absolutely no data loss. This logging option prevents the situation where a client application might mark a transaction as successful, when it has not yet been persisted to disk.

On one hand this option has fewer disk writes than the Synchronous Redo Logging option, which may mean that it is faster. The downside is that transactions are locked for longer, meaning that they are locked until after all the transactions in the same NUMA memory have been written to the WAL Redo Log on the disk.

The benefits of using this option depend on the type of transactional workload. For example, this option benefits systems that have many transactions (and less so for systems that have few transactions, because there are few disk writes anyway).

-

-

Asynchronous Redo Logging

The Asynchronous Redo Logging option is the fastest logging method, However, it does not ensure no data loss, meaning that some data that is still in the buffer and was not yet written to disk may get lost upon a power failure or database crash. When a transaction is committed by a client application, the transaction redo entries are recorded in internal buffers and written to disk at preconfigured intervals. The client application does not wait for the data being written to disk. It continues to the next transaction. This is what makes asynchronous redo logging the fastest logging method.

When a transaction is committed by a client application, the transaction redo entries are recorded in the WAL Redo Log, as follows -

- While a transaction is in progress, it is stored in the MOT's memory.

- After a transaction finishes and the client application sends a Commit command, the transaction redo entries are written to internal buffers, but are not yet written to disk. Then changes to the MOT data memory take place and the client application is notified that the transaction is committed.

- At a preconfigured interval, a redo log thread running in the background collects all the buffered redo log entries and writes them to disk.

Summary

The Asynchronous Redo Logging option is the fastest logging option because it does not require the client application to wait for data being written to disk. In addition, it groups many transactions redo entries and writes them together, thus reducing the amount of disk I/Os that slow down the MOT engine.

The downside of the Asynchronous Redo Logging option is that it does not ensure that data will not get lost upon a crash or failure. Data that was committed, but was not yet written to disk, is not durable on commit and thus cannot be recovered in case of a failure. The Asynchronous Redo Logging option is most relevant for applications that are willing to sacrifice data recovery (consistency) over performance.

Configuring Logging

Two synchronous transaction logging options and one asynchronous transaction logging option are supported by the standard MogDB disk engine.

To configure logging -

- The determination of whether synchronous or asynchronous transaction logging is performed is configured in the synchronous_commit (On = Synchronous) parameters in the postgres.conf configuration file.

If a synchronous mode of transaction logging has been selected (synchronous_commit = On, as described above), then the enable_group_commit parameter in the mot.conf configuration file determines whether the Group Synchronous Redo Logging option or the Synchronous Redo Logging option is used. For Group Synchronous Redo Logging, you must also define in the mot.conf file which of the following thresholds determine when a group of transactions is recorded in the WAL

-

group_commit_size - The quantity of committed transactions in a group. For example, 16 means that when 16 transactions in the same group have been committed by a client application, then an entry is written to disk in the WAL Redo Log for all 16 transactions.

-

group_commit_timeout - A timeout period in ms. For example, 10 means that after 10 ms, an entry is written to disk in the WAL Redo Log for each of the transactions in the same group that have been committed by their client application in the last 10 ms.

NOTE: You may refer to the REDO LOG (MOT) for more information about configuration settings.

NOTE: You may refer to the REDO LOG (MOT) for more information about configuration settings.

MOT Checkpoints

A Checkpoint is the point in time at which all the data of a table's rows is saved in files on persistent storage in order to create a full durable database image. It is a snapshot of the data at a specific point in time.

A Checkpoint is required in order to reduce a database's recovery time by shortening the quantity of WAL (Redo Log) entries that must be replayed in order to ensure durability. Checkpoint's also reduce the storage space required to keep all the log entries.

If there were no Checkpoints, then in order to recover a database, all the WAL redo entries would have to be replayed from the beginning of time, which could take days/weeks depending on the quantity of records in the database. Checkpoints record the current state of the database and enable old redo entries to be discarded.

Checkpoints are essential during recovery scenarios (especially for a cold start). First, the data is loaded from the last known or a specific Checkpoint; and then the WAL is used to complete the data changes that occurred since then.

For example - If the same table row is modified 100 times, then 100 entries are recorded in the log. When Checkpoints are used, then even if a specific table row was modified 100 times, it is recorded in the Checkpoint a single time. After the recording of a Checkpoint, recovery can be performed on the basis of that Checkpoint and only the WAL Redo Log entries that occurred since the Checkpoint need be played.

MOT Recovery

The main objective of MOT Recovery is to restore the data and the MOT engine to a consistent state after a planned shutdown (for example, for maintenance) or an unplanned crash (for example, after a power failure).

MOT recovery is performed automatically with the recovery of the rest of the MogDB database and is fully integrated into MogDB recovery process (also called a Cold Start).

MOT recovery consists of two stages -

Checkpoint Recovery - First, data must be recovered from the latest Checkpoint file on disk by loading it into memory rows and creating indexes.

WAL Redo Log Recovery - Afterwards, the recent data (which was not captured in the Checkpoint) must be recovered from the WAL Redo Log by replaying records that were added to the log since the Checkpoint that was used in the Checkpoint Recovery (described above).

The WAL Redo Log recovery is managed and triggered by MogDB.

-

To configure recovery.

-

While WAL recovery is performed in a serial manner, the Checkpoint recovery can be configured to run in a multi-threaded manner (meaning in parallel by multiple workers).

-

Configure the Checkpoint_recovery_workers parameter in the mot.conf file, which is described in the RECOVERY (MOT) section.

MOT Replication and High Availability

Since MOT is integrated into MogDB and uses/supports its replication and high availability, both synchronous and asynchronous replication are supported out of the box.

The MogDB gs_ctl tool is used for availability control and to operate the cluster. This includes gs_ctl switchover, gs_ctl failover, gs_ctl build and so on.

You may refer to the MogDB Tools Reference document for more information.

- To configure replication and high availability.

- Refer to the relevant MogDB documentation.

MOT Memory Management

For planning and finetuning, see the MOT Memory and Storage Planning and MOT Configuration Settings sections.

MOT Vacuum

Use VACUUM for garbage collection and optionally to analyze a database, , as follows -

-

[PG]

In Postgress (PG), the VACUUM reclaims storage occupied by dead tuples. In normal PG operation, tuples that are deleted or that are made obsolete by an update are not physically removed from their table. They remain present until a VACUUM is done. Therefore, it is necessary to perform a VACUUM periodically, especially on frequently updated tables.

-

[MOT Extension]

MOT tables do not need a periodic VACUUM operation, since dead/empty tuples are re-used by new ones. MOT tables require VACUUM operations only when their size is significantly reduced and they do not expect to grow to their original size in the near future.

For example, an application that periodically (for example, once in a week) performs a large deletion of a table/tables data while inserting new data takes days and does not necessarily require the same quantity of rows. In such cases, it makes sense to activate the VACUUM.

The VACUUM operation on MOT tables is always transformed into a VACUUM FULL with an exclusive table lock.

-

Supported Syntax and Limitations

Activation of the VACUUM operation is performed in a standard manner.

VACUUM [FULL | ANALYZE] [ table ];Only the FULL and ANALYZE VACUUM options are supported. The VACUUM operation can only be performed on an entire MOT table.

The following PG vacuum options are not supported:

-

FREEZE

-

VERBOSE

-

Column specification

-

LAZY mode (partial table scan)

Additionally, the following functionality is not supported

-

AUTOVACUUM

-

MOT Statistics

Statistics are intended for performance analysis or debugging. It is uncommon to turn them ON in a production environment (by default, they are OFF). Statistics are primarily used by database developers and to a lesser degree by database users.

There is some impact on performance, particularly on the server. Impact on the user is negligible.

The statistics are saved in the database server log. The log is located in the data folder and named postgresql-DATE-TIME.log.

Refer to STATISTICS (MOT) for detailed configuration options.

MOT Monitoring

All syntax for monitoring of PG-based FDW tables is supported. This includes Table or Index sizes (as described below). In addition, special functions exist for monitoring MOT memory consumption, including MOT Global Memory, MOT Local Memory and a single client session.

Table and Index Sizes

The size of tables and indexes can be monitored by querying pg_relation_size.

For example

Data Size

select pg_relation_size('customer');Index

select pg_relation_size('customer_pkey');MOT GLOBAL Memory Details

Check the size of MOT global memory, which includes primarily the data and indexes.

select * from mot_global_memory_detail();Result -

numa_node | reserved_size | used_size

----------------+----------------+-------------

-1 | 194716368896 | 25908215808

0 | 446693376 | 446693376

1 | 452984832 | 452984832

2 | 452984832 | 452984832

3 | 452984832 | 452984832

4 | 452984832 | 452984832

5 | 364904448 | 364904448

6 | 301989888 | 301989888

7 | 301989888 | 301989888Where -

- -1 is the total memory.

- 0..7 are NUMA memory nodes.

MOT LOCAL Memory Details

Check the size of MOT local memory, which includes session memory.

select * from mot_local_memory_detail();Result -

numa_node | reserved_size | used_size

----------------+----------------+-------------

-1 | 144703488 | 144703488

0 | 25165824 | 25165824

1 | 25165824 | 25165824

2 | 18874368 | 18874368

3 | 18874368 | 18874368

4 | 18874368 | 18874368

5 | 12582912 | 12582912

6 | 12582912 | 12582912

7 | 12582912 | 12582912Where -

- -1 is the total memory.

- 0..7 are NUMA memory nodes.

Session Memory

Memory for session management is taken from the MOT local memory.

Memory usage by all active sessions (connections) is possible using the following query -

select * from mot_session_memory_detail();Result -

sessid | total_size | free_size | used_size

----------------------------------------+-----------+----------+----------

1591175063.139755603855104 | 6291456 | 1800704 | 4490752

Legend -

- total_size - is allocated for the session

- free_size - not in use

- used_size - In actual use

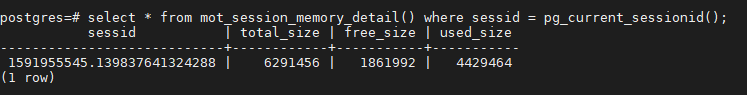

The following query enables a DBA to determine the state of local memory used by the current session -

select * from mot_session_memory_detail()

where sessid = pg_current_sessionid();Result -

MOT Error Messages

Errors may be caused by a variety of scenarios. All errors are logged in the database server log file. In addition, user-related errors are returned to the user as part of the response to the query, transaction or stored procedure execution or to database administration action.

- Errors reported in the Server log include - Function, Entity, Context, Error message, Error description and Severity.

- Errors reported to users are translated into standard PostgreSQL error codes and may consist of an MOT-specific message and description.

The following lists the error messages, error descriptions and error codes. The error code is actually an internal code and not logged or returned to users.

Errors Written the Log File

All errors are logged in the database server log file. The following lists the errors that are written to the database server log file and are not returned to the user. The log is located in the data folder and named postgresql-DATE-TIME.log.

Table 1 Errors Written Only to the Log File

| Message in the Log | Error Internal Code |

|---|---|

| Error code denoting success | MOT_NO_ERROR 0 |

| Out of memory | MOT_ERROR_OOM 1 |

| Invalid configuration | MOT_ERROR_INVALID_CFG 2 |

| Invalid argument passed to function | MOT_ERROR_INVALID_ARG 3 |

| System call failed | MOT_ERROR_SYSTEM_FAILURE 4 |

| Resource limit reached | MOT_ERROR_RESOURCE_LIMIT 5 |

| Internal logic error | MOT_ERROR_INTERNAL 6 |

| Resource unavailable | MOT_ERROR_RESOURCE_UNAVAILABLE 7 |

| Unique violation | MOT_ERROR_UNIQUE_VIOLATION 8 |

| Invalid memory allocation size | MOT_ERROR_INVALID_MEMORY_SIZE 9 |

| Index out of range | MOT_ERROR_INDEX_OUT_OF_RANGE 10 |

| Error code unknown | MOT_ERROR_INVALID_STATE 11 |

Errors Returned to the User

The following lists the errors that are written to the database server log file and are returned to the user.

MOT returns PG standard error codes to the envelope using a Return Code (RC). Some RCs cause the generation of an error message to the user who is interacting with the database.

The PG code (described below) is returned internally by MOT to the database envelope, which reacts to it according to standard PG behavior.

NOTE: %s, %u and %lu in the message are replaced by relevant error information, such as query, table name or another information. - %s - String - %u - Number - %lu - Number

Table 2 Errors Returned to the User and Logged to the Log File

| Short and Long Description Returned to the User | PG Code | Internal Error Code |

|---|---|---|

| Success.Denotes success | ERRCODE_SUCCESSFUL_COMPLETIONCOMPLETION | RC_OK = 0 |

| FailureUnknown error has occurred. | ERRCODE_FDW_ERROR | RC_ERROR = 1 |

| Unknown error has occurred.Denotes aborted operation. | ERRCODE_FDW_ERROR | RC_ABORT |

| Column definition of %s is not supported.Column type %s is not supported yet. | ERRCODE_INVALID_COLUMN_DEFINITION | RC_UNSUPPORTED_COL_TYPE |

| Column definition of %s is not supported.Column type Array of %s is not supported yet. | ERRCODE_INVALID_COLUMN_DEFINITION | RC_UNSUPPORTED_COL_TYPE_ARR |

| Column size %d exceeds max tuple size %u.Column definition of %s is not supported. | ERRCODE_FEATURE_NOT_SUPPORTED | RC_EXCEEDS_MAX_ROW_SIZE |

| Column name %s exceeds max name size %u.Column definition of %s is not supported. | ERRCODE_INVALID_COLUMN_DEFINITION | RC_COL_NAME_EXCEEDS_MAX_SIZE |

| Column size %d exceeds max size %u.Column definition of %s is not supported. | ERRCODE_INVALID_COLUMN_DEFINITION | RC_COL_SIZE_INVLALID |

| Cannot create table.Cannot add column %s; as the number of declared columns exceeds the maximum declared columns. | ERRCODE_FEATURE_NOT_SUPPORTED | RC_TABLE_EXCEEDS_MAX_DECLARED_COLS |

| Cannot create index.Total column size is greater than maximum index size %u. | ERRCODE_FDW_KEY_SIZE_EXCEEDS_MAX_ALLOWED | RC_INDEX_EXCEEDS_MAX_SIZE |

| Cannot create index.Total number of indexes for table %s is greater than the maximum number of indexes allowed %u. | ERRCODE_FDW_TOO_MANY_INDEXES | RC_TABLE_EXCEEDS_MAX_INDEXES |

| Cannot execute statement.Maximum number of DDLs per transaction reached the maximum %u. | ERRCODE_FDW_TOO_MANY_DDL_CHANGES_IN_TRANSACTION_NOT_ALLOWED | RC_TXN_EXCEEDS_MAX_DDLS |

| Unique constraint violationDuplicate key value violates unique constraint "%s"".Key %s already exists. | ERRCODE_UNIQUE_VIOLATION | RC_UNIQUE_VIOLATION |

| Table "%s" does not exist. | ERRCODE_UNDEFINED_TABLE | RC_TABLE_NOT_FOUND |

| Index "%s" does not exist. | ERRCODE_UNDEFINED_TABLE | RC_INDEX_NOT_FOUND |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_LOCAL_ROW_FOUND |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_LOCAL_ROW_NOT_FOUND |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_LOCAL_ROW_DELETED |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_INSERT_ON_EXIST |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_INDEX_RETRY_INSERT |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_INDEX_DELETE |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_LOCAL_ROW_NOT_VISIBLE |

| Memory is temporarily unavailable. | ERRCODE_OUT_OF_LOGICAL_MEMORY | RC_MEMORY_ALLOCATION_ERROR |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_ILLEGAL_ROW_STATE |

| Null constraint violated.NULL value cannot be inserted into non-null column %s at table %s. | ERRCODE_FDW_ERROR | RC_NULL_VIOLATION |

| Critical error.Critical error: %s. | ERRCODE_FDW_ERROR | RC_PANIC |

| A checkpoint is in progress - cannot truncate table. | ERRCODE_FDW_OPERATION_NOT_SUPPORTED | RC_NA |

| Unknown error has occurred. | ERRCODE_FDW_ERROR | RC_MAX_VALUE |

| <recovery message> | - | ERRCODE_CONFIG_FILE_ERROR |

| <recovery message> | - | ERRCODE_INVALID_TABLE_DEFINITION |

| Memory engine - Failed to perform commit prepared. | - | ERRCODE_INVALID_TRANSACTION_STATE |

| Invalid option <option name> | - | ERRCODE_FDW_INVALID_OPTION_NAME |

| Invalid memory allocation request size. | - | ERRCODE_INVALID_PARAMETER_VALUE |

| Memory is temporarily unavailable. | - | ERRCODE_OUT_OF_LOGICAL_MEMORY |

| Could not serialize access due to concurrent update. | - | ERRCODE_T_R_SERIALIZATION_FAILURE |

| Alter table operation is not supported for memory table.Cannot create MOT tables while incremental checkpoint is enabled.Re-index is not supported for memory tables. | - | ERRCODE_FDW_OPERATION_NOT_SUPPORTED |

| Allocation of table metadata failed. | - | ERRCODE_OUT_OF_MEMORY |

| Database with OID %u does not exist. | - | ERRCODE_UNDEFINED_DATABASE |

| Value exceeds maximum precision: %d. | - | ERRCODE_NUMERIC_VALUE_OUT_OF_RANGE |

| You have reached a maximum logical capacity %lu of allowed %lu. | - | ERRCODE_OUT_OF_LOGICAL_MEMORY |