- 关于MogDB

- 快速入门

- MogDB实训平台

- 容器化安装

- 单节点安装

- 访问数据库

- 使用命令行访问MogDB

- 使用图形工具访问MogDB

- 使用中间件访问MogDB

- 使用编程语言访问MogDB

- 使用样本数据集Mogila

- 特性描述

- 概览

- 高性能

- 高可用

- 维护性

- 数据库安全

- 企业级特性

- 应用开发接口

- AI能力

- AI4DB:数据库自治运维

- DB4AI:数据库驱动AI

- AI in DB:数据库内AI功能

- ABO优化器

- 中间件

- 安装指南

- 管理指南

- 本地化

- 日常运维

- 主备管理

- MOT内存表管理

- 列存表管理

- 备份与恢复

- 两地三中心跨Region容灾

- 数据导出导入

- 升级指南

- AI特性指南

- AI特性概述

- AI4DB:数据库自治运维

- DBMind模式说明

- DBMind的支持组件

- DBMind的AI子功能

- DB4AI:数据库驱动AI

- AI in DB:数据库内AI功能

- ABO 优化器

- 安全指南

- 开发者指南

- 应用程序开发教程

- 开发规范

- 基于JDBC开发

- 概述

- JDBC包、驱动类和环境类

- 开发流程

- 加载驱动

- 连接数据库

- 连接数据库(以SSL方式)

- 连接数据库(UDS方式)

- 执行SQL语句

- 处理结果集

- 关闭连接

- 日志管理

- 示例:常用操作

- 示例:重新执行应用SQL

- 示例:通过本地文件导入导出数据

- 示例:从MY向MogDB进行数据迁移

- 示例:逻辑复制代码示例

- 示例:不同场景下连接数据库参数配置

- JDBC接口参考

- java.sql.Connection

- java.sql.CallableStatement

- java.sql.DatabaseMetaData

- java.sql.Driver

- java.sql.PreparedStatement

- java.sql.ResultSet

- java.sql.ResultSetMetaData

- java.sql.Statement

- javax.sql.ConnectionPoolDataSource

- javax.sql.DataSource

- javax.sql.PooledConnection

- javax.naming.Context

- javax.naming.spi.InitialContextFactory

- CopyManager

- JDBC常用参数参考

- 基于ODBC开发

- 基于libpq开发

- libpq使用依赖的头文件

- 开发流程

- 示例

- 链接参数

- libpq接口参考

- 数据库连接控制函数

- 数据库执行语句函数

- 异步命令处理

- 取消正在处理的查询

- 基于Psycopg开发

- 调试

- 存储过程

- 用户自定义函数

- PL/pgSQL-SQL过程语言

- 定时任务

- 自治事务

- 逻辑复制

- Extension

- PostGIS Extension

- Foreign Data Wrapper

- orafce

- pg_bulkload

- pg_prewarm

- pg_repack

- pg_trgm

- wal2json

- whale

- 物化视图

- 分区管理

- 应用程序开发教程

- 性能优化指南

- 参考指南

- 系统表及系统视图

- 系统表和系统视图概述

- 系统表

- GS_ASP

- GS_AUDITING_POLICY

- GS_AUDITING_POLICY_ACCESS

- GS_AUDITING_POLICY_FILTERS

- GS_AUDITING_POLICY_PRIVILEGES

- GS_CLIENT_GLOBAL_KEYS

- GS_CLIENT_GLOBAL_KEYS_ARGS

- GS_COLUMN_KEYS

- GS_COLUMN_KEYS_ARGS

- GS_DB_PRIVILEGE

- GS_ENCRYPTED_COLUMNS

- GS_ENCRYPTED_PROC

- GS_GLOBAL_CHAIN

- GS_GLOBAL_CONFIG

- GS_MASKING_POLICY

- GS_MASKING_POLICY_ACTIONS

- GS_MASKING_POLICY_FILTERS

- GS_MATVIEW

- GS_MATVIEW_DEPENDENCY

- GS_MODEL_WAREHOUSE

- GS_OPT_MODEL

- GS_PACKAGE

- GS_POLICY_LABEL

- GS_RECYCLEBIN

- GS_TXN_SNAPSHOT

- GS_UID

- GS_WLM_EC_OPERATOR_INFO

- GS_WLM_INSTANCE_HISTORY

- GS_WLM_OPERATOR_INFO

- GS_WLM_PLAN_ENCODING_TABLE

- GS_WLM_PLAN_OPERATOR_INFO

- GS_WLM_SESSION_QUERY_INFO_ALL

- GS_WLM_USER_RESOURCE_HISTORY

- PG_AGGREGATE

- PG_AM

- PG_AMOP

- PG_AMPROC

- PG_APP_WORKLOADGROUP_MAPPING

- PG_ATTRDEF

- PG_ATTRIBUTE

- PG_AUTH_HISTORY

- PG_AUTH_MEMBERS

- PG_AUTHID

- PG_CAST

- PG_CLASS

- PG_COLLATION

- PG_CONSTRAINT

- PG_CONVERSION

- PG_DATABASE

- PG_DB_ROLE_SETTING

- PG_DEFAULT_ACL

- PG_DEPEND

- PG_DESCRIPTION

- PG_DIRECTORY

- PG_ENUM

- PG_EXTENSION

- PG_EXTENSION_DATA_SOURCE

- PG_FOREIGN_DATA_WRAPPER

- PG_FOREIGN_SERVER

- PG_FOREIGN_TABLE

- PG_HASHBUCKET

- PG_INDEX

- PG_INHERITS

- PG_JOB

- PG_JOB_PROC

- PG_LANGUAGE

- PG_LARGEOBJECT

- PG_LARGEOBJECT_METADATA

- PG_NAMESPACE

- PG_OBJECT

- PG_OPCLASS

- PG_OPERATOR

- PG_OPFAMILY

- PG_PARTITION

- PG_PLTEMPLATE

- PG_PROC

- PG_PUBLICATION

- PG_PUBLICATION_REL

- PG_RANGE

- PG_REPLICATION_ORIGIN

- PG_RESOURCE_POOL

- PG_RLSPOLICY

- PG_SECLABEL

- PG_SET

- PG_SHDEPEND

- PG_SHDESCRIPTION

- PG_SHSECLABEL

- PG_STATISTIC

- PG_STATISTIC_EXT

- PG_SUBSCRIPTION

- PG_SYNONYM

- PG_TABLESPACE

- PG_TRIGGER

- PG_TS_CONFIG

- PG_TS_CONFIG_MAP

- PG_TS_DICT

- PG_TS_PARSER

- PG_TS_TEMPLATE

- PG_TYPE

- PG_USER_MAPPING

- PG_USER_STATUS

- PG_WORKLOAD_GROUP

- PGXC_CLASS

- PGXC_GROUP

- PGXC_NODE

- PGXC_SLICE

- PLAN_TABLE_DATA

- STATEMENT_HISTORY

- 系统视图

- DV_SESSION_LONGOPS

- DV_SESSIONS

- GET_GLOBAL_PREPARED_XACTS(废弃)

- GS_AUDITING

- GS_AUDITING_ACCESS

- GS_AUDITING_PRIVILEGE

- GS_ASYNC_SUBMIT_SESSIONS_STATUS

- GS_CLUSTER_RESOURCE_INFO

- GS_COMPRESSION

- GS_DB_PRIVILEGES

- GS_FILE_STAT

- GS_GSC_MEMORY_DETAIL

- GS_INSTANCE_TIME

- GS_LABELS

- GS_LSC_MEMORY_DETAIL

- GS_MASKING

- GS_MATVIEWS

- GS_OS_RUN_INFO

- GS_REDO_STAT

- GS_SESSION_CPU_STATISTICS

- GS_SESSION_MEMORY

- GS_SESSION_MEMORY_CONTEXT

- GS_SESSION_MEMORY_DETAIL

- GS_SESSION_MEMORY_STATISTICS

- GS_SESSION_STAT

- GS_SESSION_TIME

- GS_SQL_COUNT

- GS_STAT_SESSION_CU

- GS_THREAD_MEMORY_CONTEXT

- GS_TOTAL_MEMORY_DETAIL

- GS_WLM_CGROUP_INFO

- GS_WLM_EC_OPERATOR_STATISTICS

- GS_WLM_OPERATOR_HISTORY

- GS_WLM_OPERATOR_STATISTICS

- GS_WLM_PLAN_OPERATOR_HISTORY

- GS_WLM_REBUILD_USER_RESOURCE_POOL

- GS_WLM_RESOURCE_POOL

- GS_WLM_SESSION_HISTORY

- GS_WLM_SESSION_INFO

- GS_WLM_SESSION_INFO_ALL

- GS_WLM_SESSION_STATISTICS

- GS_WLM_USER_INFO

- GS_WRITE_TERM_LOG

- MPP_TABLES

- PG_AVAILABLE_EXTENSION_VERSIONS

- PG_AVAILABLE_EXTENSIONS

- PG_COMM_DELAY

- PG_COMM_RECV_STREAM

- PG_COMM_SEND_STREAM

- PG_COMM_STATUS

- PG_CONTROL_GROUP_CONFIG

- PG_CURSORS

- PG_EXT_STATS

- PG_GET_INVALID_BACKENDS

- PG_GET_SENDERS_CATCHUP_TIME

- PG_GROUP

- PG_GTT_ATTACHED_PIDS

- PG_GTT_RELSTATS

- PG_GTT_STATS

- PG_INDEXES

- PG_LOCKS

- PG_NODE_ENV

- PG_OS_THREADS

- PG_PREPARED_STATEMENTS

- PG_PREPARED_XACTS

- PG_PUBLICATION_TABLES

- PG_REPLICATION_ORIGIN_STATUS

- PG_REPLICATION_SLOTS

- PG_RLSPOLICIES

- PG_ROLES

- PG_RULES

- PG_RUNNING_XACTS

- PG_SECLABELS

- PG_SESSION_IOSTAT

- PG_SESSION_WLMSTAT

- PG_SETTINGS

- PG_SHADOW

- PG_STAT_ACTIVITY

- PG_STAT_ACTIVITY_NG

- PG_STAT_ALL_INDEXES

- PG_STAT_ALL_TABLES

- PG_STAT_BAD_BLOCK

- PG_STAT_BGWRITER

- PG_STAT_DATABASE

- PG_STAT_DATABASE_CONFLICTS

- PG_STAT_REPLICATION

- PG_STAT_SUBSCRIPTION

- PG_STAT_SYS_INDEXES

- PG_STAT_SYS_TABLES

- PG_STAT_USER_FUNCTIONS

- PG_STAT_USER_INDEXES

- PG_STAT_USER_TABLES

- PG_STAT_XACT_ALL_TABLES

- PG_STAT_XACT_SYS_TABLES

- PG_STAT_XACT_USER_FUNCTIONS

- PG_STAT_XACT_USER_TABLES

- PG_STATIO_ALL_INDEXES

- PG_STATIO_ALL_SEQUENCES

- PG_STATIO_ALL_TABLES

- PG_STATIO_SYS_INDEXES

- PG_STATIO_SYS_SEQUENCES

- PG_STATIO_SYS_TABLES

- PG_STATIO_USER_INDEXES

- PG_STATIO_USER_SEQUENCES

- PG_STATIO_USER_TABLES

- PG_STATS

- PG_TABLES

- PG_TDE_INFO

- PG_THREAD_WAIT_STATUS

- PG_TIMEZONE_ABBREVS

- PG_TIMEZONE_NAMES

- PG_TOTAL_MEMORY_DETAIL

- PG_TOTAL_USER_RESOURCE_INFO

- PG_TOTAL_USER_RESOURCE_INFO_OID

- PG_USER

- PG_USER_MAPPINGS

- PG_VARIABLE_INFO

- PG_VIEWS

- PG_WLM_STATISTICS

- PGXC_PREPARED_XACTS

- PLAN_TABLE

- 系统函数

- 逻辑操作符

- 比较操作符

- 字符处理函数和操作符

- 二进制字符串函数和操作符

- 位串函数和操作符

- 模式匹配操作符

- 数字操作函数和操作符

- 时间和日期处理函数和操作符

- 类型转换函数

- 几何函数和操作符

- 网络地址函数和操作符

- 文本检索函数和操作符

- JSON/JSONB函数和操作符

- HLL函数和操作符

- SEQUENCE函数

- 数组函数和操作符

- 范围函数和操作符

- 聚集函数

- 窗口函数(分析函数)

- 安全函数

- 账本数据库的函数

- 密态等值的函数

- 返回集合的函数

- 条件表达式函数

- 系统信息函数

- 系统管理函数

- 统计信息函数

- 触发器函数

- HashFunc函数

- 提示信息函数

- 全局临时表函数

- 故障注入系统函数

- AI特性函数

- 动态数据脱敏函数

- 其他系统函数

- 内部函数

- Global SysCache特性函数

- 数据损坏检测修复函数

- 废弃函数

- 支持的数据类型

- SQL语法

- ABORT

- ALTER AGGREGATE

- ALTER AUDIT POLICY

- ALTER DATABASE

- ALTER DATA SOURCE

- ALTER DEFAULT PRIVILEGES

- ALTER DIRECTORY

- ALTER EXTENSION

- ALTER FOREIGN TABLE

- ALTER FUNCTION

- ALTER GLOBAL CONFIGURATION

- ALTER GROUP

- ALTER INDEX

- ALTER LANGUAGE

- ALTER LARGE OBJECT

- ALTER MASKING POLICY

- ALTER MATERIALIZED VIEW

- ALTER PACKAGE

- ALTER PROCEDURE

- ALTER PUBLICATION

- ALTER RESOURCE LABEL

- ALTER RESOURCE POOL

- ALTER ROLE

- ALTER ROW LEVEL SECURITY POLICY

- ALTER RULE

- ALTER SCHEMA

- ALTER SEQUENCE

- ALTER SERVER

- ALTER SESSION

- ALTER SUBSCRIPTION

- ALTER SYNONYM

- ALTER SYSTEM KILL SESSION

- ALTER SYSTEM SET

- ALTER TABLE

- ALTER TABLE PARTITION

- ALTER TABLE SUBPARTITION

- ALTER TABLESPACE

- ALTER TEXT SEARCH CONFIGURATION

- ALTER TEXT SEARCH DICTIONARY

- ALTER TRIGGER

- ALTER TYPE

- ALTER USER

- ALTER USER MAPPING

- ALTER VIEW

- ANALYZE | ANALYSE

- BEGIN

- CALL

- CHECKPOINT

- CLEAN CONNECTION

- CLOSE

- CLUSTER

- COMMENT

- COMMIT | END

- COMMIT PREPARED

- CONNECT BY

- COPY

- CREATE AGGREGATE

- CREATE AUDIT POLICY

- CREATE CAST

- CREATE CLIENT MASTER KEY

- CREATE COLUMN ENCRYPTION KEY

- CREATE DATABASE

- CREATE DATA SOURCE

- CREATE DIRECTORY

- CREATE EXTENSION

- CREATE FOREIGN TABLE

- CREATE FUNCTION

- CREATE GROUP

- CREATE INCREMENTAL MATERIALIZED VIEW

- CREATE INDEX

- CREATE LANGUAGE

- CREATE MASKING POLICY

- CREATE MATERIALIZED VIEW

- CREATE MODEL

- CREATE OPERATOR

- CREATE PACKAGE

- CREATE PROCEDURE

- CREATE PUBLICATION

- CREATE RESOURCE LABEL

- CREATE RESOURCE POOL

- CREATE ROLE

- CREATE ROW LEVEL SECURITY POLICY

- CREATE RULE

- CREATE SCHEMA

- CREATE SEQUENCE

- CREATE SERVER

- CREATE SUBSCRIPTION

- CREATE SYNONYM

- CREATE TABLE

- CREATE TABLE AS

- CREATE TABLE PARTITION

- CREATE TABLE SUBPARTITION

- CREATE TABLESPACE

- CREATE TEXT SEARCH CONFIGURATION

- CREATE TEXT SEARCH DICTIONARY

- CREATE TRIGGER

- CREATE TYPE

- CREATE USER

- CREATE USER MAPPING

- CREATE VIEW

- CREATE WEAK PASSWORD DICTIONARY

- CURSOR

- DEALLOCATE

- DECLARE

- DELETE

- DO

- DROP AGGREGATE

- DROP AUDIT POLICY

- DROP CAST

- DROP CLIENT MASTER KEY

- DROP COLUMN ENCRYPTION KEY

- DROP DATABASE

- DROP DATA SOURCE

- DROP DIRECTORY

- DROP EXTENSION

- DROP FOREIGN TABLE

- DROP FUNCTION

- DROP GLOBAL CONFIGURATION

- DROP GROUP

- DROP INDEX

- DROP LANGUAGE

- DROP MASKING POLICY

- DROP MATERIALIZED VIEW

- DROP MODEL

- DROP OPERATOR

- DROP OWNED

- DROP PACKAGE

- DROP PROCEDURE

- DROP PUBLICATION

- DROP RESOURCE LABEL

- DROP RESOURCE POOL

- DROP ROLE

- DROP ROW LEVEL SECURITY POLICY

- DROP RULE

- DROP SCHEMA

- DROP SEQUENCE

- DROP SERVER

- DROP SUBSCRIPTION

- DROP SYNONYM

- DROP TABLE

- DROP TABLESPACE

- DROP TEXT SEARCH CONFIGURATION

- DROP TEXT SEARCH DICTIONARY

- DROP TRIGGER

- DROP TYPE

- DROP USER

- DROP USER MAPPING

- DROP VIEW

- DROP WEAK PASSWORD DICTIONARY

- EXECUTE

- EXECUTE DIRECT

- EXPLAIN

- EXPLAIN PLAN

- FETCH

- GRANT

- INSERT

- LOCK

- MERGE INTO

- MOVE

- PREDICT BY

- PREPARE

- PREPARE TRANSACTION

- PURGE

- REASSIGN OWNED

- REFRESH INCREMENTAL MATERIALIZED VIEW

- REFRESH MATERIALIZED VIEW

- REINDEX

- RELEASE SAVEPOINT

- RESET

- REVOKE

- ROLLBACK

- ROLLBACK PREPARED

- ROLLBACK TO SAVEPOINT

- SAVEPOINT

- SELECT

- SELECT INTO

- SET

- SET CONSTRAINTS

- SET ROLE

- SET SESSION AUTHORIZATION

- SET TRANSACTION

- SHOW

- SHUTDOWN

- SNAPSHOT

- START TRANSACTION

- TIMECAPSULE TABLE

- TRUNCATE

- UPDATE

- VACUUM

- VALUES

- SHRINK

- SQL参考

- GUC参数说明

- Schema

- 概述

- Information Schema

- DBE_PERF

- 概述

- OS

- Instance

- Memory

- File

- Object

- STAT_USER_TABLES

- SUMMARY_STAT_USER_TABLES

- GLOBAL_STAT_USER_TABLES

- STAT_USER_INDEXES

- SUMMARY_STAT_USER_INDEXES

- GLOBAL_STAT_USER_INDEXES

- STAT_SYS_TABLES

- SUMMARY_STAT_SYS_TABLES

- GLOBAL_STAT_SYS_TABLES

- STAT_SYS_INDEXES

- SUMMARY_STAT_SYS_INDEXES

- GLOBAL_STAT_SYS_INDEXES

- STAT_ALL_TABLES

- SUMMARY_STAT_ALL_TABLES

- GLOBAL_STAT_ALL_TABLES

- STAT_ALL_INDEXES

- SUMMARY_STAT_ALL_INDEXES

- GLOBAL_STAT_ALL_INDEXES

- STAT_DATABASE

- SUMMARY_STAT_DATABASE

- GLOBAL_STAT_DATABASE

- STAT_DATABASE_CONFLICTS

- SUMMARY_STAT_DATABASE_CONFLICTS

- GLOBAL_STAT_DATABASE_CONFLICTS

- STAT_XACT_ALL_TABLES

- SUMMARY_STAT_XACT_ALL_TABLES

- GLOBAL_STAT_XACT_ALL_TABLES

- STAT_XACT_SYS_TABLES

- SUMMARY_STAT_XACT_SYS_TABLES

- GLOBAL_STAT_XACT_SYS_TABLES

- STAT_XACT_USER_TABLES

- SUMMARY_STAT_XACT_USER_TABLES

- GLOBAL_STAT_XACT_USER_TABLES

- STAT_XACT_USER_FUNCTIONS

- SUMMARY_STAT_XACT_USER_FUNCTIONS

- GLOBAL_STAT_XACT_USER_FUNCTIONS

- STAT_BAD_BLOCK

- SUMMARY_STAT_BAD_BLOCK

- GLOBAL_STAT_BAD_BLOCK

- STAT_USER_FUNCTIONS

- SUMMARY_STAT_USER_FUNCTIONS

- GLOBAL_STAT_USER_FUNCTIONS

- Workload

- Session/Thread

- SESSION_STAT

- GLOBAL_SESSION_STAT

- SESSION_TIME

- GLOBAL_SESSION_TIME

- SESSION_MEMORY

- GLOBAL_SESSION_MEMORY

- SESSION_MEMORY_DETAIL

- GLOBAL_SESSION_MEMORY_DETAIL

- SESSION_STAT_ACTIVITY

- GLOBAL_SESSION_STAT_ACTIVITY

- THREAD_WAIT_STATUS

- GLOBAL_THREAD_WAIT_STATUS

- LOCAL_THREADPOOL_STATUS

- GLOBAL_THREADPOOL_STATUS

- SESSION_CPU_RUNTIME

- SESSION_MEMORY_RUNTIME

- STATEMENT_IOSTAT_COMPLEX_RUNTIME

- LOCAL_ACTIVE_SESSION

- Transaction

- Query

- STATEMENT

- SUMMARY_STATEMENT

- STATEMENT_COUNT

- GLOBAL_STATEMENT_COUNT

- SUMMARY_STATEMENT_COUNT

- GLOBAL_STATEMENT_COMPLEX_HISTORY

- GLOBAL_STATEMENT_COMPLEX_HISTORY_TABLE

- GLOBAL_STATEMENT_COMPLEX_RUNTIME

- STATEMENT_RESPONSETIME_PERCENTILE

- STATEMENT_COMPLEX_RUNTIME

- STATEMENT_COMPLEX_HISTORY_TABLE

- STATEMENT_COMPLEX_HISTORY

- STATEMENT_WLMSTAT_COMPLEX_RUNTIME

- STATEMENT_HISTORY

- Cache/IO

- STATIO_USER_TABLES

- SUMMARY_STATIO_USER_TABLES

- GLOBAL_STATIO_USER_TABLES

- STATIO_USER_INDEXES

- SUMMARY_STATIO_USER_INDEXES

- GLOBAL_STATIO_USER_INDEXES

- STATIO_USER_SEQUENCES

- SUMMARY_STATIO_USER_SEQUENCES

- GLOBAL_STATIO_USER_SEQUENCES

- STATIO_SYS_TABLES

- SUMMARY_STATIO_SYS_TABLES

- GLOBAL_STATIO_SYS_TABLES

- STATIO_SYS_INDEXES

- SUMMARY_STATIO_SYS_INDEXES

- GLOBAL_STATIO_SYS_INDEXES

- STATIO_SYS_SEQUENCES

- SUMMARY_STATIO_SYS_SEQUENCES

- GLOBAL_STATIO_SYS_SEQUENCES

- STATIO_ALL_TABLES

- SUMMARY_STATIO_ALL_TABLES

- GLOBAL_STATIO_ALL_TABLES

- STATIO_ALL_INDEXES

- SUMMARY_STATIO_ALL_INDEXES

- GLOBAL_STATIO_ALL_INDEXES

- STATIO_ALL_SEQUENCES

- SUMMARY_STATIO_ALL_SEQUENCES

- GLOBAL_STATIO_ALL_SEQUENCES

- GLOBAL_STAT_DB_CU

- GLOBAL_STAT_SESSION_CU

- Utility

- REPLICATION_STAT

- GLOBAL_REPLICATION_STAT

- REPLICATION_SLOTS

- GLOBAL_REPLICATION_SLOTS

- BGWRITER_STAT

- GLOBAL_BGWRITER_STAT

- GLOBAL_CKPT_STATUS

- GLOBAL_DOUBLE_WRITE_STATUS

- GLOBAL_PAGEWRITER_STATUS

- GLOBAL_RECORD_RESET_TIME

- GLOBAL_REDO_STATUS

- GLOBAL_RECOVERY_STATUS

- CLASS_VITAL_INFO

- USER_LOGIN

- SUMMARY_USER_LOGIN

- GLOBAL_GET_BGWRITER_STATUS

- GLOBAL_SINGLE_FLUSH_DW_STATUS

- GLOBAL_CANDIDATE_STATUS

- Lock

- Wait Events

- Configuration

- Operator

- Workload Manager

- Global Plancache

- RTO

- DBE_PLDEBUGGER Schema

- DBE_PLDEBUGGER Schema概述

- DBE_PLDEBUGGER.turn_on

- DBE_PLDEBUGGER.turn_off

- DBE_PLDEBUGGER.local_debug_server_info

- DBE_PLDEBUGGER.attach

- DBE_PLDEBUGGER.info_locals

- DBE_PLDEBUGGER.next

- DBE_PLDEBUGGER.continue

- DBE_PLDEBUGGER.abort

- DBE_PLDEBUGGER.print_var

- DBE_PLDEBUGGER.info_code

- DBE_PLDEBUGGER.step

- DBE_PLDEBUGGER.add_breakpoint

- DBE_PLDEBUGGER.delete_breakpoint

- DBE_PLDEBUGGER.info_breakpoints

- DBE_PLDEBUGGER.backtrace

- DBE_PLDEBUGGER.disable_breakpoint

- DBE_PLDEBUGGER.enable_breakpoint

- DBE_PLDEBUGGER.finish

- DBE_PLDEBUGGER.set_var

- DB4AI Schema

- DBE_PLDEVELOPER

- 工具参考

- 数据库报错信息

- SQL标准错误码说明

- 第三方库错误码说明

- GAUSS-00001 - GAUSS-00100

- GAUSS-00101 - GAUSS-00200

- GAUSS 00201 - GAUSS 00300

- GAUSS 00301 - GAUSS 00400

- GAUSS 00401 - GAUSS 00500

- GAUSS 00501 - GAUSS 00600

- GAUSS 00601 - GAUSS 00700

- GAUSS 00701 - GAUSS 00800

- GAUSS 00801 - GAUSS 00900

- GAUSS 00901 - GAUSS 01000

- GAUSS 01001 - GAUSS 01100

- GAUSS 01101 - GAUSS 01200

- GAUSS 01201 - GAUSS 01300

- GAUSS 01301 - GAUSS 01400

- GAUSS 01401 - GAUSS 01500

- GAUSS 01501 - GAUSS 01600

- GAUSS 01601 - GAUSS 01700

- GAUSS 01701 - GAUSS 01800

- GAUSS 01801 - GAUSS 01900

- GAUSS 01901 - GAUSS 02000

- GAUSS 02001 - GAUSS 02100

- GAUSS 02101 - GAUSS 02200

- GAUSS 02201 - GAUSS 02300

- GAUSS 02301 - GAUSS 02400

- GAUSS 02401 - GAUSS 02500

- GAUSS 02501 - GAUSS 02600

- GAUSS 02601 - GAUSS 02700

- GAUSS 02701 - GAUSS 02800

- GAUSS 02801 - GAUSS 02900

- GAUSS 02901 - GAUSS 03000

- GAUSS 03001 - GAUSS 03100

- GAUSS 03101 - GAUSS 03200

- GAUSS 03201 - GAUSS 03300

- GAUSS 03301 - GAUSS 03400

- GAUSS 03401 - GAUSS 03500

- GAUSS 03501 - GAUSS 03600

- GAUSS 03601 - GAUSS 03700

- GAUSS 03701 - GAUSS 03800

- GAUSS 03801 - GAUSS 03900

- GAUSS 03901 - GAUSS 04000

- GAUSS 04001 - GAUSS 04100

- GAUSS 04101 - GAUSS 04200

- GAUSS 04201 - GAUSS 04300

- GAUSS 04301 - GAUSS 04400

- GAUSS 04401 - GAUSS 04500

- GAUSS 04501 - GAUSS 04600

- GAUSS 04601 - GAUSS 04700

- GAUSS 04701 - GAUSS 04800

- GAUSS 04801 - GAUSS 04900

- GAUSS 04901 - GAUSS 05000

- GAUSS 05001 - GAUSS 05100

- GAUSS 05101 - GAUSS 05200

- GAUSS 05201 - GAUSS 05300

- GAUSS 05301 - GAUSS 05400

- GAUSS 05401 - GAUSS 05500

- GAUSS 05501 - GAUSS 05600

- GAUSS 05601 - GAUSS 05700

- GAUSS 05701 - GAUSS 05800

- GAUSS 05801 - GAUSS 05900

- GAUSS 05901 - GAUSS 06000

- GAUSS 06001 - GAUSS 06100

- GAUSS 06101 - GAUSS 06200

- GAUSS 06201 - GAUSS 06300

- GAUSS 06301 - GAUSS 06400

- GAUSS 06401 - GAUSS 06500

- GAUSS 06501 - GAUSS 06600

- GAUSS 06601 - GAUSS 06700

- GAUSS 06701 - GAUSS 06800

- GAUSS 06801 - GAUSS 06900

- GAUSS 06901 - GAUSS 07000

- GAUSS 07001 - GAUSS 07100

- GAUSS 07101 - GAUSS 07200

- GAUSS 07201 - GAUSS 07300

- GAUSS 07301 - GAUSS 07400

- GAUSS 07401 - GAUSS 07480

- GAUSS 50000 - GAUSS 50999

- GAUSS 51000 - GAUSS 51999

- GAUSS 52000 - GAUSS 52999

- GAUSS 53000 - GAUSS 53799

- 错误日志信息参考

- 系统表及系统视图

- 故障诊断指南

- 常见故障定位手段

- 常见故障定位案例

- core问题定位

- 权限/会话/数据类型问题定位

- 服务/高可用/并发问题定位

- 表/分区表问题定位

- 文件系统/磁盘/内存问题定位

- SQL问题定位

- 索引问题定位

- CM两节点故障问题定位

- 源码解析

- 常见问题解答 (FAQs)

- 术语表

- 通信矩阵

- Mogeaver

TPCC测试优化指南

本章节主要介绍MogDB数据库TPCC测试方法,以及为达到最佳tpmC性能所依赖的关键系统级调优。

硬件环境

-

服务器

- 最佳TPCC结果是使用4路鲲鹏服务器(256C, 512G-1024G内存) + 一个2路鲲鹏服务器

- 常规可使用2个2路鲲鹏服务器,128C, 512G-1024G内存

- 2台X86也可以,但测试指南未使用NUMA优化

-

硬盘

- 数据库端尽可能使用两块NVME闪存卡

- 其次使用3-4块SSD硬盘

-

网卡

- 鲲鹏配套的Hi1822

- X86尽可能使用万兆网卡

软件环境

-

数据库:MogDB 2.1.1

-

TPCC客户端:使用tidb优化过的BenchmarkSQL 5.0(https://github.com/pingcap/benchmarksql)

-

依赖包

所需软件 建议版本 numactl – jdk 1.8.0-242 ant 1.10.5 htop –

测试步骤

-

安装MogDB。参考PTK方式安装,单机部署即可。

-

初始化参数设置,并重启数据库,使参数生效。参考推荐参数设置及新建测试库。

-

下载TPCC标准测试工具BenchmarkSQL5.0。

[root@node151 ~]# git clone -b 5.0-mysql-support-opt-2.1 https://github.com/pingcap/benchmarksql.git Cloning into 'tpcc-mysql'... remote: Enumerating objects: 106, done. remote: Total 106 (delta 0), reused 0 (delta 0), pack-reused 106 Receiving objects: 100% (106/106), 64.46 KiB | 225.00 KiB/s, done. Resolving deltas: 100% (30/30), done. -

下载安装JDK和ant依赖包。

[root@node151 ~]# rpm -ivh ant-1.10.5-6.oe1.noarch.rpm jdk-8u281-linux-aarch64.rpm --force --nodeps warning: ant-1.10.5-6.oe1.noarch.rpm: Header V3 RSA/SHA1 Signature, key ID b25e7f66: NOKEY warning: jdk-8u281-linux-aarch64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:jdk1.8-2000:1.8.0_281-fcs ################################# [ 50%] Unpacking JAR files... tools.jar... rt.jar... jsse.jar... charsets.jar... localedata.jar... 2:ant-0:1.10.5-6.oe1 ################################# [100%] -

配置JAVA环境变量

[root@node151 ~]# tail -3 /root/.bashrc export JAVA_HOME=/usr/java/jdk1.8.0_281-aarch64 export PATH=$JAVA_HOME/bin:$PATH export CLASSPATH=.:JAVA_HOME/lib:$BENCHMARKSQLPATH/run/ojdbc7.jar -

在BenchmarkSQL所在目录下输入ant命令进行编译,编译成功后会生成build和dist两个目录。

[root@node151 benchmarksql-5.0-mysql-support-opt-2.1]# pwd /tmp/benchmarksql-5.0-mysql-support-opt-2.1 [root@node151 benchmarksql-5.0-mysql-support-opt-2.1]# ant Buildfile: /tmp/benchmarksql-5.0-mysql-support-opt-2.1/build.xml init: [mkdir] Created dir: /tmp/benchmarksql-5.0-mysql-support-opt-2.1/build compile: [javac] Compiling 12 source files to /tmp/benchmarksql-5.0-mysql-support-opt-2.1/build dist: [mkdir] Created dir: /tmp/benchmarksql-5.0-mysql-support-opt-2.1/dist [jar] Building jar: /tmp/benchmarksql-5.0-mysql-support-opt-2.1/dist/BenchmarkSQL-5.0.jar BUILD SUCCESSFUL Total time: 1 second -

根据您的系统架构下载对应的JDBC驱动至BenchmarkSQL目录的lib/postgresql文件夹,并解压,删除自带的JDBC驱动。

[root@node151 postgres]# pwd /tmp/benchmarksql-5.0-mysql-support-opt-2.1/lib/postgres/ [root@node151 postgres]# ls openGauss-2.0.0-JDBC.tar.gz postgresql-9.3-1102.jdbc41.jar [root@node151 postgres]# rm -f postgresql-9.3-1102.jdbc41.jar [root@node151 postgres]# tar -xf openGauss-2.0.0-JDBC.tar.gz [root@node151 postgres]# ls openGauss-2.0.0-JDBC.tar.gz postgresql.jar -

数据库端准备,创建数据库tpcc_db及用户tpcc。

[omm@node151 ~]$ gsql -d postgres -p 26000 -r postgres=# create database tpcc_db; CREATE DATABASE postgres=# \q [omm@node151 ~]$ gsql -d tpcc_db -p 26000 -r tpcc_db=# CREATE USER tpcc WITH PASSWORD "tpcc@123"; CREATE ROLE tpcc_db=# GRANT ALL ON schema public TO tpcc; GRANT tpcc_db=# ALTER User tpcc sysadmin; ALTER ROLE -

客户端准备,进入BenchmarkSQL目录下的run文件夹,编辑benchmarksql配置文件,修改测试参数,包括数据库用户名、密码、IP、端口、数据库。

[root@node151 db1]# cd /tmp/benchmarksql-5.0-mysql-support-opt-2.1/run [root@node151 run]# vim props.mogdb db=postgres driver=org.opengauss.Driver conn=jdbc:opengauss://172.16.0.176:26000/tpcc_db?prepareThreshold=1&batchMode=on&fetchsize=10&loggerLevel=off #修改连接字符串, 包含IP、端口号、数据库 user=tpcc #用户名 password=tpcc@123 #密码 warehouses=100 #仓位数 terminals=300 #并发数 runMins=5 #运行时间 runTxnsPerTerminal=0 loadWorkers=100 limitTxnsPerMin=0 terminalWarehouseFixed=false newOrderWeight=45 paymentWeight=43 orderStatusWeight=4 deliveryWeight=4 stockLevelWeight=4 -

初始化数据

[root@node151 run]# sh runDatabaseBuild.sh props.mogdb # ------------------------------------------------------------ # Loading SQL file ./sql.common/tableCreates.sql # ------------------------------------------------------------ create table bmsql_config ( cfg_name varchar(30) primary key, cfg_value varchar(50) );...... # ------------------------------------------------------------ # Loading SQL file ./sql.postgres/buildFinish.sql # ------------------------------------------------------------ -- ---- -- Extra commands to run after the tables are created, loaded, -- indexes built and extra's created. -- PostgreSQL version. -- ---- vacuum analyze; -

修改runBenchmark.sh文件中funcs.sh所在的实际路径。

[root@node151 run]# vim runBenchmark.sh #!/usr/bin/env bash if [ $# -ne 1 ] ; then echo "usage: $(basename $0) PROPS_FILE" >&2 exit 2 fi SEQ_FILE="./.jTPCC_run_seq.dat" if [ ! -f "${SEQ_FILE}" ] ; then echo "0" > "${SEQ_FILE}" fi SEQ=$(expr $(cat "${SEQ_FILE}") + 1) || exit 1 echo "${SEQ}" > "${SEQ_FILE}" source /tmp/benchmarksql-5.0-mysql-support-opt-2.1/run/funcs.sh $1 #将此处路径修改为文件所在的实际路径 setCP || exit 1 myOPTS="-Dprop=$1 -DrunID=${SEQ}" java -cp "$myCP" $myOPTS jTPCC -

开始测试,运行tpcc跑分,tpmC部分即为测试结果,结果同时保存在 runLog_mmdd-hh24miss.log 下。

[root@node151 run]# sh runBenchmark.sh props.mogdb| tee runLog_`date +%m%d-%H%M%S`.log ... 15:08:26,663 [Thread-16] INFO jTPCC : Term-00, Measured tpmC (NewOrders) = 106140.46 15:08:26,663 [Thread-16] INFO jTPCC : Term-00, Measured tpmTOTAL = 235800.39 15:08:26,664 [Thread-16] INFO jTPCC : Term-00, Session Start = 2021-08-04 15:03:26 15:08:26,664 [Thread-16] INFO jTPCC : Term-00, Session End = 2021-08-04 15:08:26 15:08:26,664 [Thread-16] INFO jTPCC : Term-00, Transaction Count = 1179449 15:08:26,664 [Thread-16] INFO jTPCC : executeTime[Payment]=29893614 15:08:26,664 [Thread-16] INFO jTPCC : executeTime[Order-Status]=2564424 15:08:26,664 [Thread-16] INFO jTPCC : executeTime[Delivery]=4438389 15:08:26,664 [Thread-16] INFO jTPCC : executeTime[Stock-Level]=4259325 15:08:26,664 [Thread-16] INFO jTPCC : executeTime[New-Order]=48509926调整props.mogdb,或者使用多个props文件,根据需要进行多次测试。

-

为了避免多次测试导致数据量太大,影响性能,可以把数据清空重新开始。

[root@node151 run]# sh runDatabaseDestroy.sh props.mogdb # ------------------------------------------------------------ # Loading SQL file ./sql.common/tableDrops.sql # ------------------------------------------------------------ drop table bmsql_config; drop table bmsql_new_order; drop table bmsql_order_line; drop table bmsql_oorder; drop table bmsql_history; drop table bmsql_customer; drop table bmsql_stock; drop table bmsql_item; drop table bmsql_district; drop table bmsql_warehouse; drop sequence bmsql_hist_id_seq;在调试时,可以一次Build, 多次Run,但是如果是正式测试,建议每次都是 Build / Run / Destroy。

调优

1. 主机优化(鲲鹏专享)

调整BIOS

- BIOS>Advanced>MISC Config,配置Support Smmu为Disabled

- BIOS>Advanced>MISC Config,配置CPU Prefetching Configuration为Disabled

- BIOS>Advanced>Memory Config,配置Die Interleaving为Disable

2. 操作系统优化(鲲鹏专享)

-

修改操作系统内核PAGESIZE为64KB(一般默认值)

-

关闭irqbalance

systemctl stop irqbalance -

调整numa_balance

echo 0 > /proc/sys/kernel/numa_balancing -

调整透明大页

echo 'never' > /sys/kernel/mm/transparent_hugepage/enabled echo 'never' > /sys/kernel/mm/transparent_hugepage/defrag -

针对nvme磁盘io队列调度机制设置。

echo none > /sys/block/nvme*n*/queue/scheduler

3. 文件系统配置

-

格式为xfs,数据库大小为8K

mkfs.xfs -b size=8192 /dev/nvme0n1 -f

4. 网络配置

-

网卡多中断队列设置

下载 IN500_solution_5.1.0.SPC401.zip 安装hinicadm

[root@node151 fc]# pwd /root/IN500_solution_5/tools/linux_arm/fc [root@node151 fc]# rpm -ivh hifcadm-2.4.1.0-1.aarch64.rpm Verifying... ################################# [100%] Preparing... ################################# [100%] package hifcadm-2.4.1.0-1.aarch64 is already installed [root@node151 fc]# -

修改系统支持的最大中断队列数

[root@node151 config]# pwd /root/IN500_solution_5/tools/linux_arm/nic/config [root@node151 config]# ./hinicconfig hinic0 -f std_sh_4x25ge_dpdk_cfg_template0.ini [root@node151 config]# reboot [root@node151 config]# ethtool -L enp3s0 combined 48不同平台,不同应用的优化值可能不同,当前128核的平台,服务器端调优值为12,客户端调优值为48。

-

中断调优开启tso,lro,gro,gso特性。

ethtool -K enp3s0 tso on ethtool -K enp3s0 lro on ethtool -K enp3s0 gro on ethtool -K enp3s0 gso on -

网卡固件确认与更新

[root@node151 ~]# ethtool -i enp3s0 driver: hinic version: 2.3.2.11 firmware-version: 2.4.1.0 expansion-rom-version: bus-info: 0000:03:00.0 supports-statistics: yes supports-test: yes supports-eeprom-access: no supports-register-dump: no supports-priv-flags: no网卡固件版本应为2.4.1.0

-

更新网卡固件。

[root@node151 cfg_data_nic_prd_1h_4x25G]# pwd /root/IN500_solution_5/firmware/update_bin/cfg_data_nic_prd_1h_4x25G [root@node151 cfg_data_nic_prd_1h_4x25G]# hinicadm updatefw -i enp3s0 -f /root/IN500_solution_5/firmware/update_bin/cfg_data_nic_prd_1h_4x25G/Hi1822_nic_prd_1h_4x25G.bin重启服务器,再确认网卡固件版本成功更新为2.4.1.0。

5. 数据库服务端及客户端绑核

-

鲲鹏上numa绑定优化(128核)

-

数据库主机和客户端主机安装numa

yum install numa* -y网卡驱动安装(包括数据库主机和客户端主机),参考上文网络配置部分。

-

数据库主机

cp `find /opt -name "bind*.sh"|head -1 ` /root sh /root/bind_net_irq.sh 12设置数据库参数:

thread_pool_attr = '345,4,(cpubind:1-28,32-60,64-92,96-124)' enable_thread_pool = on关闭数据库 启动数据库命令替换成:

numactl -C 1-28,32-60,64-92,96-124 mogdb --single_node -D /opt/data/db2/ -p 26000 & -

客户端 拷贝/root/bind_net_irq.sh 到客户端

sh /root/bind_net_irq.sh 48benchmark启动命令改成

numactl -C 0-19,32-51,64-83,96-115 sh runBenchmark.sh props.mog

-

-

鲲鹏上numa绑定优化(256核)

-

数据库主机和客户端主机安装numa

yum install numa* -y网卡驱动安装(包括数据库主机和客户端主机),参考上文网络配置部分。

-

数据库主机

cp `find /opt -name "bind*.sh"|head -1 ` /root sh /root/bind_net_irq.sh 24设置数据库参数:

thread_pool_attr = '696,4,(cpubind:1-28,32-60,64-92,96-124,128-156,160-188,192-220,224-252)' enable_thread_pool = on关闭数据库 启动数据库命令替换成:

numactl -C 1-28,32-60,64-92,96-124,128-156,160-188,192-220,224-252 mogdb --single_node -D /opt/data/db2/ -p 26000 & -

客户端 拷贝/root/bind_net_irq.sh 到客户端

sh /root/bind_net_irq.sh 48benchmark启动命令改成

numactl -C 0-19,32-51,64-83,96-115 sh runBenchmark.sh props.mog

-

6. 数据库参数优化(通用)

修改PGDATA下的postgresql.conf,并重启

max_connections = 4096

allow_concurrent_tuple_update = true

audit_enabled = off

cstore_buffers =16MB

enable_alarm = off

enable_codegen = false

enable_data_replicate = off

full_page_writes = off

max_files_per_process = 100000

max_prepared_transactions = 2048

shared_buffers = 350GB

use_workload_manager = off

wal_buffers = 1GB

work_mem = 1MB

transaction_isolation = 'read committed'

default_transaction_isolation = 'read committed'

synchronous_commit = on

fsync = on

maintenance_work_mem = 2GB

vacuum_cost_limit = 2000

autovacuum = on

autovacuum_mode = vacuum

autovacuum_vacuum_cost_delay =10

xloginsert_locks = 48

update_lockwait_timeout =20min

enable_mergejoin = off

enable_nestloop = off

enable_hashjoin = off

enable_bitmapscan = on

enable_material = off

wal_log_hints = off

log_duration = off

checkpoint_timeout = 15min

autovacuum_vacuum_scale_factor = 0.1

autovacuum_analyze_scale_factor = 0.02

enable_save_datachanged_timestamp =FALSE

log_timezone = 'PRC'

timezone = 'PRC'

lc_messages = 'C'

lc_monetary = 'C'

lc_numeric = 'C'

lc_time = 'C'

enable_double_write = on

enable_incremental_checkpoint = on

enable_opfusion = on

numa_distribute_mode = 'all'

track_activities = off

enable_instr_track_wait = off

enable_instr_rt_percentile = off

track_counts =on

track_sql_count = off

enable_instr_cpu_timer = off

plog_merge_age = 0

session_timeout = 0

enable_instance_metric_persistent = off

enable_logical_io_statistics = off

enable_user_metric_persistent =off

enable_xlog_prune = off

enable_resource_track = off

instr_unique_sql_count = 0

enable_beta_opfusion = on

enable_thread_pool = on

#0核用于walwriter线程绑核

enable_partition_opfusion=off

wal_writer_cpu=0

xlog_idle_flushes_before_sleep = 500000000

max_io_capacity = 2GB

dirty_page_percent_max = 0.1

candidate_buf_percent_target = 0.7

bgwriter_delay = 500

pagewriter_sleep = 30

checkpoint_segments =10240

advance_xlog_file_num = 100

autovacuum_max_workers = 20

autovacuum_naptime = 5s

bgwriter_flush_after = 256kB

data_replicate_buffer_size = 16MB

enable_stmt_track = off

remote_read_mode=non_authentication

wal_level = archive

hot_standby = off

hot_standby_feedback = off

client_min_messages = ERROR

log_min_messages = FATAL

enable_asp = off

enable_bbox_dump = off

enable_ffic_log = off

enable_twophase_commit = off

minimum_pool_size = 200

wal_keep_segments = 1025

incremental_checkpoint_timeout = 5min

max_process_memory = 12GB

vacuum_cost_limit = 10000

xloginsert_locks = 8

wal_writer_delay = 100

wal_file_init_num = 30

wal_level=minimal

max_wal_senders=0

fsync=off

synchronous_commit = off

enable_indexonlyscan=on

thread_pool_attr = '345,4,(cpubind:1-28,32-60,64-92,96-124)'

enable_page_lsn_check = off

enable_double_write = off7. benchmarksql调优

-

连接串

conn=jdbc:opengauss://10.10.10.40:26000/tpcc?prepareThreshold=1&batchMode=on&fetchsize=10&loggerLevel=off -

修改文件内容将数据分散,调整FILLFACTOR,数据分区。

[root@node151 ~]# ls benchmarksql-5.0-mysql-support-opt-2.1/run/sql.common/tableCreates.sql benchmarksql-5.0-mysql-support-opt-2.1/run/sql.common/tableCreates.sql [root@node151 sql.common]# cat tableCreates.sql CREATE TABLESPACE example2 relative location 'tablespace2'; CREATE TABLESPACE example3 relative location 'tablespace3'; create table bmsql_config ( cfg_name varchar(30), cfg_value varchar(50) ); create table bmsql_warehouse ( w_id integer not null, w_ytd decimal(12,2), w_tax decimal(4,4), w_name varchar(10), w_street_1 varchar(20), w_street_2 varchar(20), w_city varchar(20), w_state char(2), w_zip char(9) ) WITH (FILLFACTOR=80); create table bmsql_district ( d_w_id integer not null, d_id integer not null, d_ytd decimal(12,2), d_tax decimal(4,4), d_next_o_id integer, d_name varchar(10), d_street_1 varchar(20), d_street_2 varchar(20), d_city varchar(20), d_state char(2), d_zip char(9) ) WITH (FILLFACTOR=80); create table bmsql_customer ( c_w_id integer not null, c_d_id integer not null, c_id integer not null, c_discount decimal(4,4), c_credit char(2), c_last varchar(16), c_first varchar(16), c_credit_lim decimal(12,2), c_balance decimal(12,2), c_ytd_payment decimal(12,2), c_payment_cnt integer, c_delivery_cnt integer, c_street_1 varchar(20), c_street_2 varchar(20), c_city varchar(20), c_state char(2), c_zip char(9), c_phone char(16), c_since timestamp, c_middle char(2), c_data varchar(500) ) WITH (FILLFACTOR=80) tablespace example2; create sequence bmsql_hist_id_seq; create table bmsql_history ( hist_id integer, h_c_id integer, h_c_d_id integer, h_c_w_id integer, h_d_id integer, h_w_id integer, h_date timestamp, h_amount decimal(6,2), h_data varchar(24) ) WITH (FILLFACTOR=80); create table bmsql_new_order ( no_w_id integer not null, no_d_id integer not null, no_o_id integer not null ) WITH (FILLFACTOR=80); create table bmsql_oorder ( o_w_id integer not null, o_d_id integer not null, o_id integer not null, o_c_id integer, o_carrier_id integer, o_ol_cnt integer, o_all_local integer, o_entry_d timestamp ) WITH (FILLFACTOR=80); create table bmsql_order_line ( ol_w_id integer not null, ol_d_id integer not null, ol_o_id integer not null, ol_number integer not null, ol_i_id integer not null, ol_delivery_d timestamp, ol_amount decimal(6,2), ol_supply_w_id integer, ol_quantity integer, ol_dist_info char(24) ) WITH (FILLFACTOR=80); create table bmsql_item ( i_id integer not null, i_name varchar(24), i_price decimal(5,2), i_data varchar(50), i_im_id integer ); create table bmsql_stock ( s_w_id integer not null, s_i_id integer not null, s_quantity integer, s_ytd integer, s_order_cnt integer, s_remote_cnt integer, s_data varchar(50), s_dist_01 char(24), s_dist_02 char(24), s_dist_03 char(24), s_dist_04 char(24), s_dist_05 char(24), s_dist_06 char(24), s_dist_07 char(24), s_dist_08 char(24), s_dist_09 char(24), s_dist_10 char(24) ) WITH (FILLFACTOR=80) tablespace example3;

8.数据库文件位置优化(通用)

通过将默认主目录、xlog、example2、example3分开多个底层磁盘来避免I/O瓶颈。 如果只有2个性能好的盘,优先移走xlog,如果有3个,优先xlog+ example2。 分开的示例方法如下:

PGDATA=/opt/data/mogdb

cd $PGDATA

mv pg_xlog /tpccdir1

ln -s /tpccdir1/pg_xlog .

cd pg_location

mv tablespace2 /tpccdir2

ln -s /tpccdir2/tablespace2 .

mv tablespace3 /tpccdir3

ln -s /tpccdir3/tablespace3 .9.观察系统资源工具

-

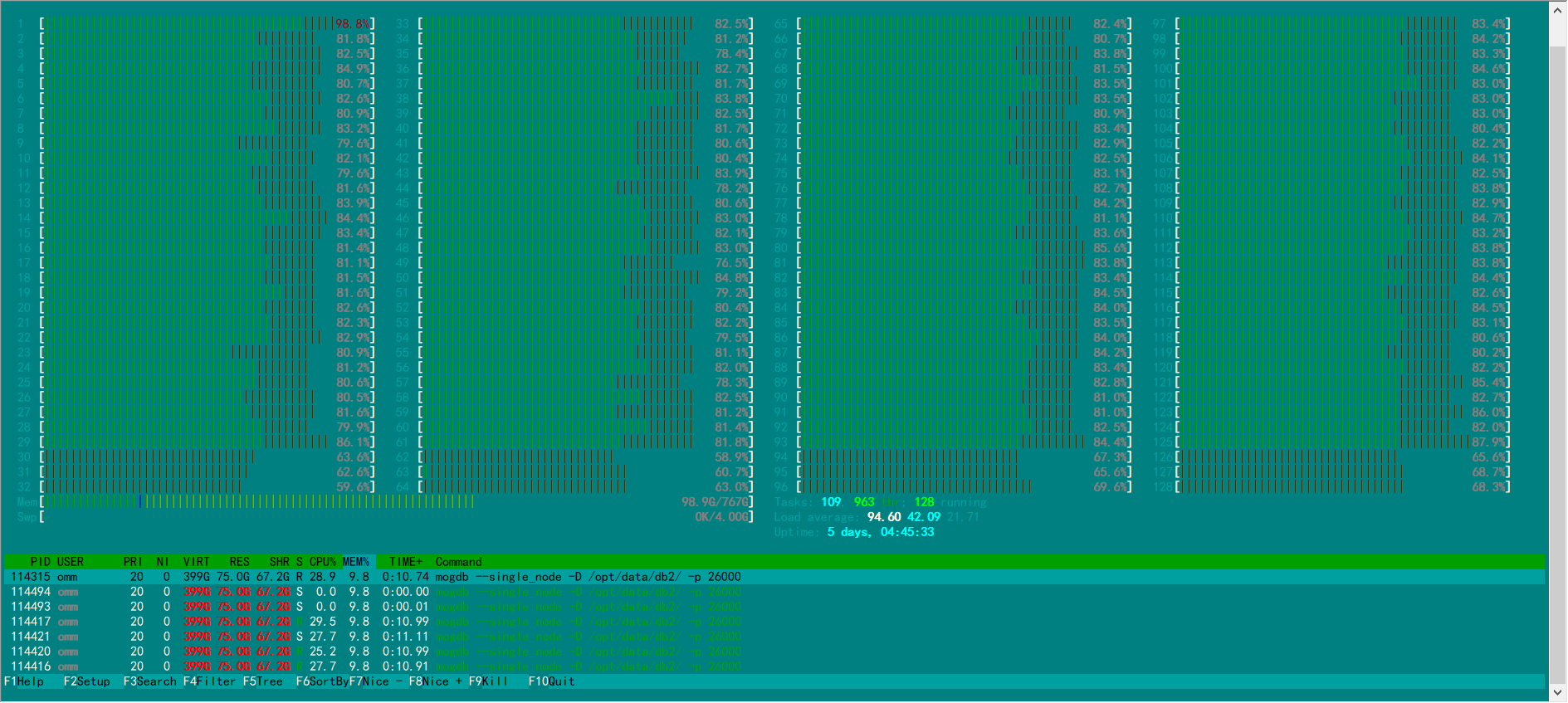

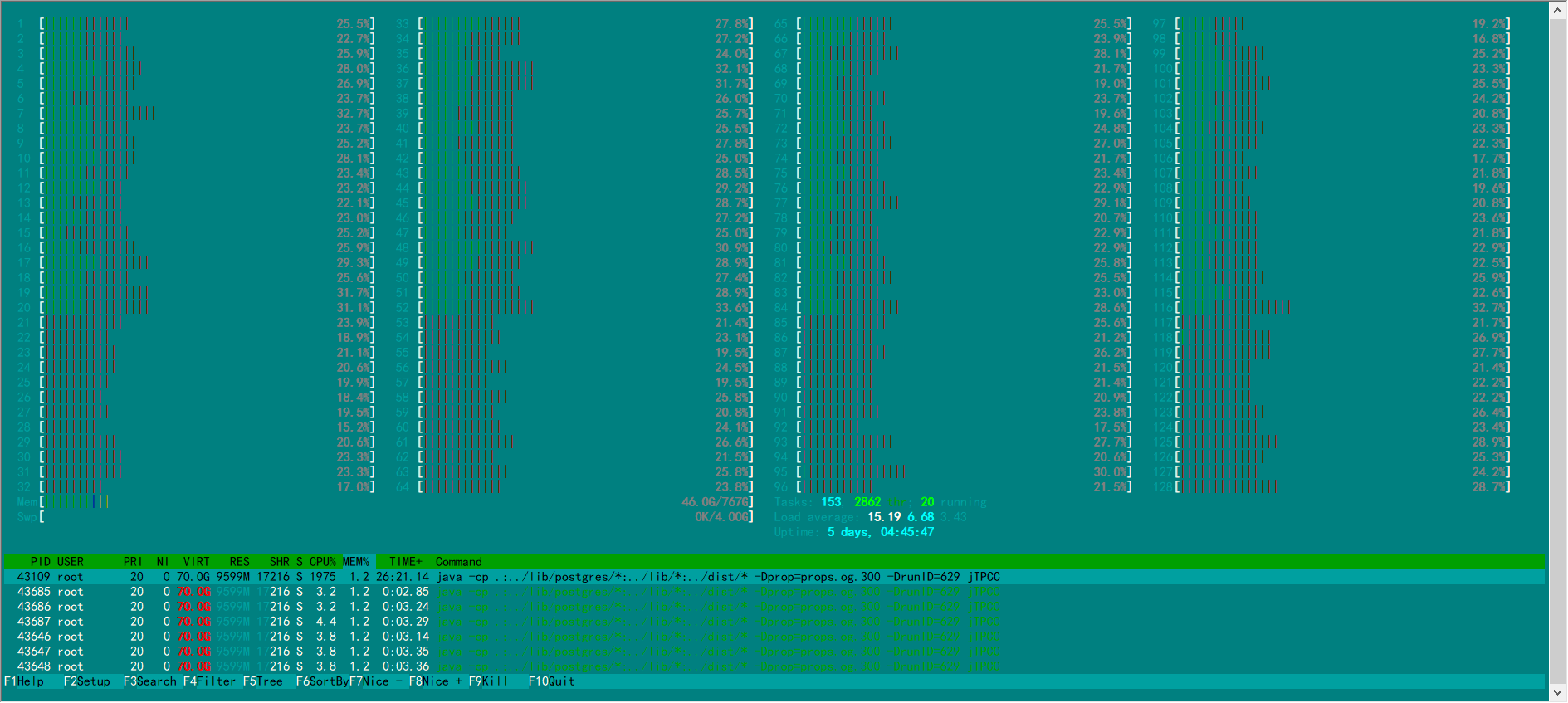

htop 观察CPU使用情况,arm平台需要从源码编译。

使用htop监控数据库服务端和tpcc客户端CPU利用情况,最佳性能测试情况下,各个业务CPU的占用率都非常高(> 90%)。如果有CPU占用率没有达标,可能是绑核方式不对或其他问题,需要定位找到根因进行调整。

-

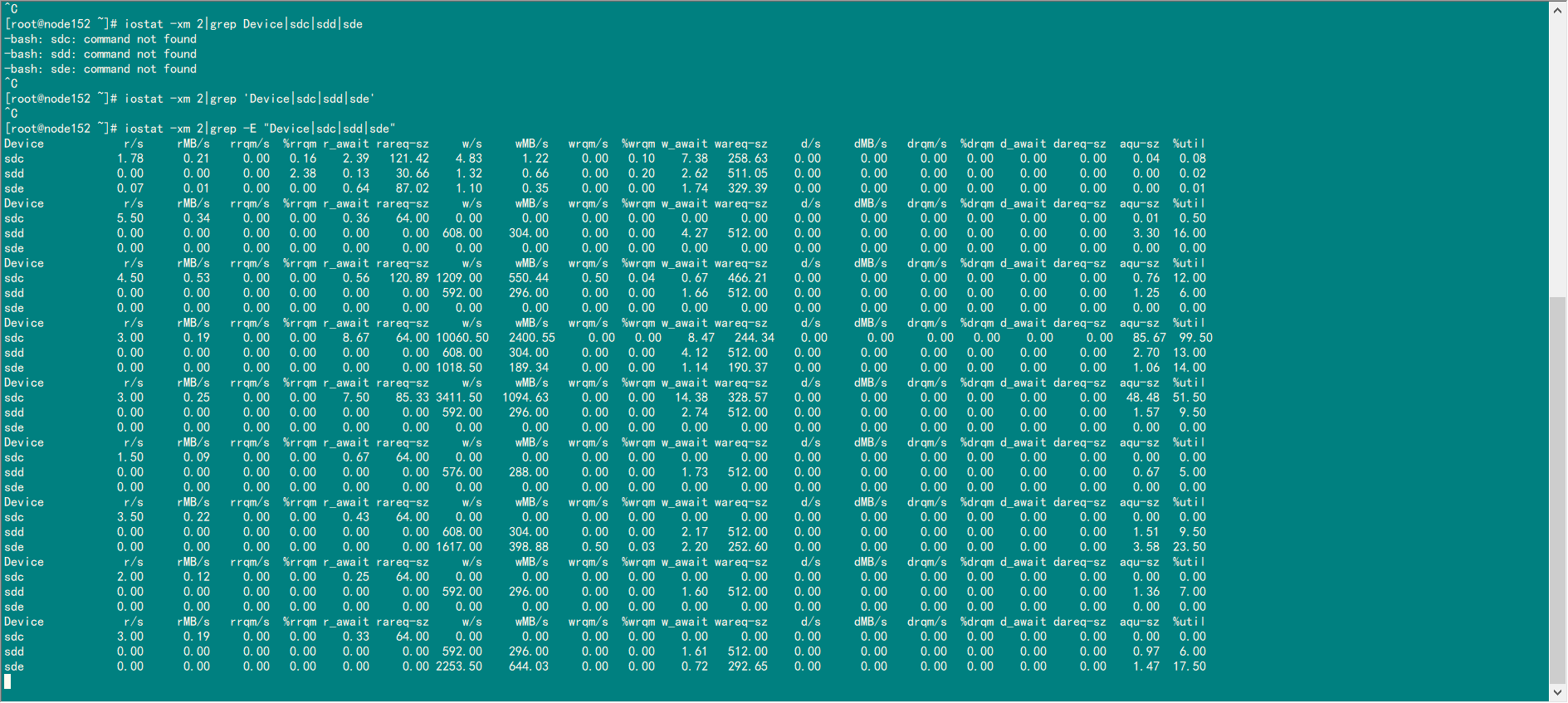

iostat 查看系统IO使用情况。

-

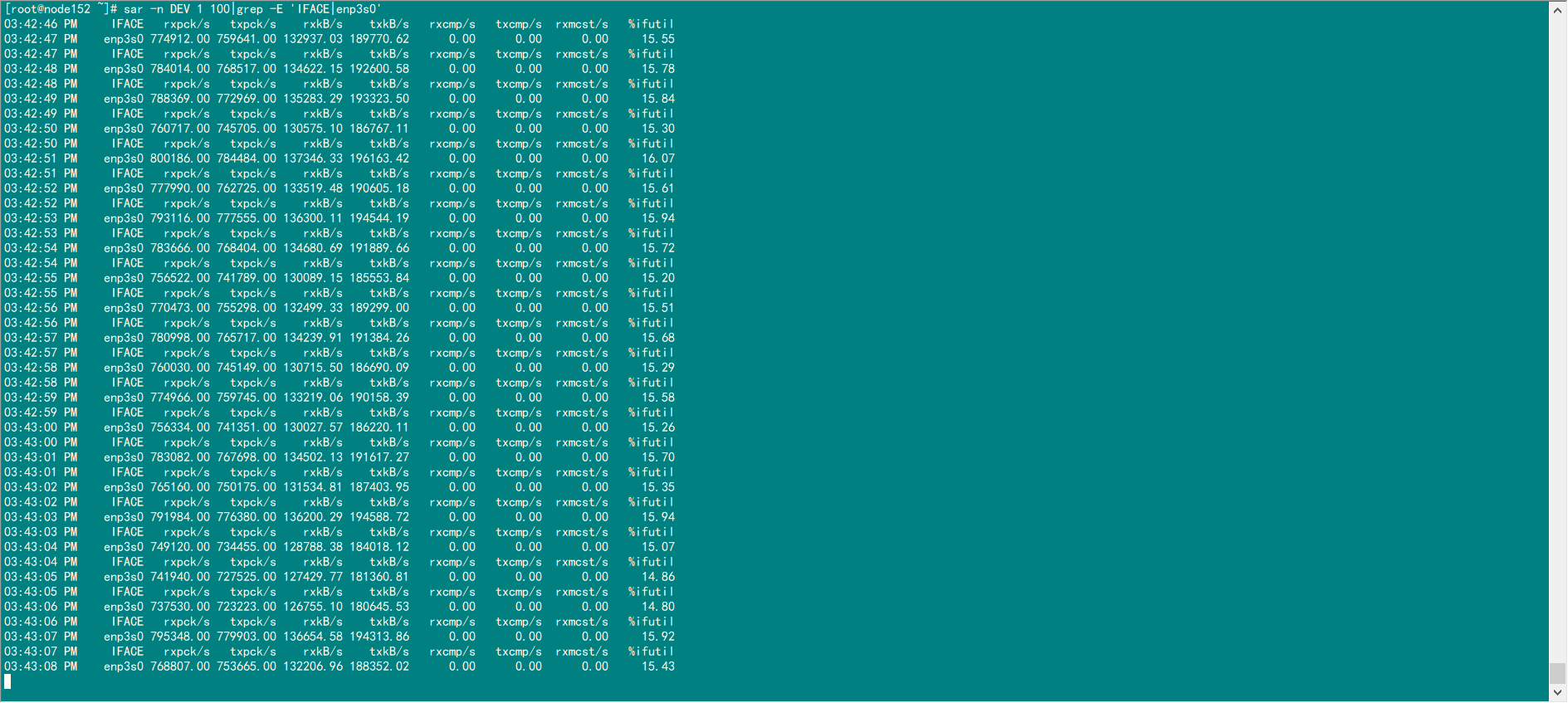

sar 查看网络使用情况。

-

nmon 作为系统资源整体监控。

部分数据截图

-

数据库htop

-

客户端htop

-

iostat 查看系统IO使用情况

-

sar 查看网络使用情况

理想结果

4路鲲鹏 256C, 1000仓500并发:250W TPMC

2路鲲鹏服务器 100仓100并发:90W

2路鲲鹏服务器 100仓300并发:140W