- About MogDB

- MogDB Introduction

- Comparison Between MogDB and openGauss

- MogDB Release Notes

- High Availability and Performance

- Open Source Components

- Usage Limitations

- Terms of Use

- Quick Start

- Installation Guide

- Container Installation

- Simplified Installation Process

- Standard Installation

- Manual Installation

- Administrator Guide

- Routine Maintenance

- Starting and Stopping MogDB

- Using the gsql Client for Connection

- Routine Maintenance

- Checking OS Parameters

- Checking MogDB Health Status

- Checking Database Performance

- Checking and Deleting Logs

- Checking Time Consistency

- Checking The Number of Application Connections

- Routinely Maintaining Tables

- Routinely Recreating an Index

- Data Security Maintenance Suggestions

- Log Reference

- Primary and Standby Management

- MOT Engine

- Introducing MOT

- Using MOT

- Concepts of MOT

- Appendix

- Column-store Tables Management

- Backup and Restoration

- Importing and Exporting Data

- Importing Data

- Exporting Data

- Upgrade Guide

- Common Fault Locating Cases

- Core Fault Locating

- When the TPC-C is running and a disk to be injected is full, the TPC-C stops responding

- Standby Node in the Need Repair (WAL) State

- Insufficient Memory

- Service Startup Failure

- "Error:No space left on device" Is Displayed

- After You Run the du Command to Query Data File Size In the XFS File System, the Query Result Is Greater than the Actual File Size

- File Is Damaged in the XFS File System

- Primary Node Is Hung in Demoting During a Switchover

- Disk Space Usage Reaches the Threshold and the Database Becomes Read-only

- Slow Response to a Query Statement

- Analyzing the Status of a Query Statement

- Forcibly Terminating a Session

- Analyzing Whether a Query Statement Is Blocked

- Low Query Efficiency

- "Lock wait timeout" Is Displayed When a User Executes an SQL Statement

- Table Size Does not Change After VACUUM FULL Is Executed on the Table

- An Error Is Reported When the Table Partition Is Modified

- Different Data Is Displayed for the Same Table Queried By Multiple Users

- When a User Specifies Only an Index Name to Modify the Index, A Message Indicating That the Index Does Not Exist Is Displayed

- Reindexing Fails

- An Error Occurs During Integer Conversion

- "too many clients already" Is Reported or Threads Failed To Be Created in High Concurrency Scenarios

- B-tree Index Faults

- Routine Maintenance

- Security Guide

- Database Security Management

- Performance Tuning

- System Optimization

- SQL Optimization

- WDR Snapshot Schema

- TPCC Performance Tuning Guide

- Developer Guide

- Application Development Guide

- Development Specifications

- Development Based on JDBC

- Overview

- JDBC Package, Driver Class, and Environment Class

- Development Process

- Loading the Driver

- Connecting to a Database

- Connecting to the Database (Using SSL)

- Running SQL Statements

- Processing Data in a Result Set

- Closing a Connection

- Example: Common Operations

- Example: Retrying SQL Queries for Applications

- Example: Importing and Exporting Data Through Local Files

- Example 2: Migrating Data from a MY Database to MogDB

- Example: Logic Replication Code

- JDBC API Reference

- java.sql.Connection

- java.sql.CallableStatement

- java.sql.DatabaseMetaData

- java.sql.Driver

- java.sql.PreparedStatement

- java.sql.ResultSet

- java.sql.ResultSetMetaData

- java.sql.Statement

- javax.sql.ConnectionPoolDataSource

- javax.sql.DataSource

- javax.sql.PooledConnection

- javax.naming.Context

- javax.naming.spi.InitialContextFactory

- CopyManager

- Development Based on ODBC

- Development Based on libpq

- Development Based on libpq

- libpq API Reference

- Database Connection Control Functions

- Database Statement Execution Functions

- Functions for Asynchronous Command Processing

- Functions for Canceling Queries in Progress

- Example

- Connection Characters

- Commissioning

- Appendices

- Stored Procedure

- User Defined Functions

- Autonomous Transaction

- Logical Replication

- Logical Decoding

- Foreign Data Wrapper

- Materialized View

- Materialized View Overview

- Full Materialized View

- Incremental Materialized View

- AI Features

- Overview

- Predictor: AI Query Time Forecasting

- X-Tuner: Parameter Optimization and Diagnosis

- SQLdiag: Slow SQL Discovery

- A-Detection: Status Monitoring

- Index-advisor: Index Recommendation

- DeepSQL

- Application Development Guide

- Reference Guide

- System Catalogs and System Views

- Overview of System Catalogs and System Views

- System Catalogs

- GS_AUDITING_POLICY

- GS_AUDITING_POLICY_ACCESS

- GS_AUDITING_POLICY_FILTERS

- GS_AUDITING_POLICY_PRIVILEGES

- GS_CLIENT_GLOBAL_KEYS

- GS_CLIENT_GLOBAL_KEYS_ARGS

- GS_COLUMN_KEYS

- GS_COLUMN_KEYS_ARGS

- GS_ENCRYPTED_COLUMNS

- GS_MASKING_POLICY

- GS_MASKING_POLICY_ACTIONS

- GS_MASKING_POLICY_FILTERS

- GS_MATVIEW

- GS_MATVIEW_DEPENDENCY

- GS_OPT_MODEL

- GS_POLICY_LABEL

- GS_WLM_INSTANCE_HISTORY

- GS_WLM_OPERATOR_INFO

- GS_WLM_PLAN_ENCODING_TABLE

- GS_WLM_PLAN_OPERATOR_INFO

- GS_WLM_USER_RESOURCE_HISTORY

- PG_AGGREGATE

- PG_AM

- PG_AMOP

- PG_AMPROC

- PG_APP_WORKLOADGROUP_MAPPING

- PG_ATTRDEF

- PG_ATTRIBUTE

- PG_AUTHID

- PG_AUTH_HISTORY

- PG_AUTH_MEMBERS

- PG_CAST

- PG_CLASS

- PG_COLLATION

- PG_CONSTRAINT

- PG_CONVERSION

- PG_DATABASE

- PG_DB_ROLE_SETTING

- PG_DEFAULT_ACL

- PG_DEPEND

- PG_DESCRIPTION

- PG_DIRECTORY

- PG_ENUM

- PG_EXTENSION

- PG_EXTENSION_DATA_SOURCE

- PG_FOREIGN_DATA_WRAPPER

- PG_FOREIGN_SERVER

- PG_FOREIGN_TABLE

- PG_INDEX

- PG_INHERITS

- PG_JOB

- PG_JOB_PROC

- PG_LANGUAGE

- PG_LARGEOBJECT

- PG_LARGEOBJECT_METADATA

- PG_NAMESPACE

- PG_OBJECT

- PG_OPCLASS

- PG_OPERATOR

- PG_OPFAMILY

- PG_PARTITION

- PG_PLTEMPLATE

- PG_PROC

- PG_RANGE

- PG_RESOURCE_POOL

- PG_REWRITE

- PG_RLSPOLICY

- PG_SECLABEL

- PG_SHDEPEND

- PG_SHDESCRIPTION

- PG_SHSECLABEL

- PG_STATISTIC

- PG_STATISTIC_EXT

- PG_SYNONYM

- PG_TABLESPACE

- PG_TRIGGER

- PG_TS_CONFIG

- PG_TS_CONFIG_MAP

- PG_TS_DICT

- PG_TS_PARSER

- PG_TS_TEMPLATE

- PG_TYPE

- PG_USER_MAPPING

- PG_USER_STATUS

- PG_WORKLOAD_GROUP

- PLAN_TABLE_DATA

- STATEMENT_HISTORY

- System Views

- GS_AUDITING

- GS_AUDITING_ACCESS

- GS_AUDITING_PRIVILEGE

- GS_CLUSTER_RESOURCE_INFO

- GS_INSTANCE_TIME

- GS_LABELS

- GS_MASKING

- GS_MATVIEWS

- GS_SESSION_MEMORY

- GS_SESSION_CPU_STATISTICS

- GS_SESSION_MEMORY_CONTEXT

- GS_SESSION_MEMORY_DETAIL

- GS_SESSION_MEMORY_STATISTICS

- GS_SQL_COUNT

- GS_WLM_CGROUP_INFO

- GS_WLM_PLAN_OPERATOR_HISTORY

- GS_WLM_REBUILD_USER_RESOURCE_POOL

- GS_WLM_RESOURCE_POOL

- GS_WLM_USER_INFO

- GS_STAT_SESSION_CU

- GS_TOTAL_MEMORY_DETAIL

- MPP_TABLES

- PG_AVAILABLE_EXTENSION_VERSIONS

- PG_AVAILABLE_EXTENSIONS

- PG_COMM_DELAY

- PG_COMM_RECV_STREAM

- PG_COMM_SEND_STREAM

- PG_COMM_STATUS

- PG_CONTROL_GROUP_CONFIG

- PG_CURSORS

- PG_EXT_STATS

- PG_GET_INVALID_BACKENDS

- PG_GET_SENDERS_CATCHUP_TIME

- PG_GROUP

- PG_GTT_RELSTATS

- PG_GTT_STATS

- PG_GTT_ATTACHED_PIDS

- PG_INDEXES

- PG_LOCKS

- PG_NODE_ENV

- PG_OS_THREADS

- PG_PREPARED_STATEMENTS

- PG_PREPARED_XACTS

- PG_REPLICATION_SLOTS

- PG_RLSPOLICIES

- PG_ROLES

- PG_RULES

- PG_SECLABELS

- PG_SETTINGS

- PG_SHADOW

- PG_STATS

- PG_STAT_ACTIVITY

- PG_STAT_ALL_INDEXES

- PG_STAT_ALL_TABLES

- PG_STAT_BAD_BLOCK

- PG_STAT_BGWRITER

- PG_STAT_DATABASE

- PG_STAT_DATABASE_CONFLICTS

- PG_STAT_USER_FUNCTIONS

- PG_STAT_USER_INDEXES

- PG_STAT_USER_TABLES

- PG_STAT_REPLICATION

- PG_STAT_SYS_INDEXES

- PG_STAT_SYS_TABLES

- PG_STAT_XACT_ALL_TABLES

- PG_STAT_XACT_SYS_TABLES

- PG_STAT_XACT_USER_FUNCTIONS

- PG_STAT_XACT_USER_TABLES

- PG_STATIO_ALL_INDEXES

- PG_STATIO_ALL_SEQUENCES

- PG_STATIO_ALL_TABLES

- PG_STATIO_SYS_INDEXES

- PG_STATIO_SYS_SEQUENCES

- PG_STATIO_SYS_TABLES

- PG_STATIO_USER_INDEXES

- PG_STATIO_USER_SEQUENCES

- PG_STATIO_USER_TABLES

- PG_TABLES

- PG_TDE_INFO

- PG_THREAD_WAIT_STATUS

- PG_TIMEZONE_ABBREVS

- PG_TIMEZONE_NAMES

- PG_TOTAL_MEMORY_DETAIL

- PG_TOTAL_USER_RESOURCE_INFO

- PG_TOTAL_USER_RESOURCE_INFO_OID

- PG_USER

- PG_USER_MAPPINGS

- PG_VARIABLE_INFO

- PG_VIEWS

- PLAN_TABLE

- GS_FILE_STAT

- GS_OS_RUN_INFO

- GS_REDO_STAT

- GS_SESSION_STAT

- GS_SESSION_TIME

- GS_THREAD_MEMORY_CONTEXT

- Functions and Operators

- Logical Operators

- Comparison Operators

- Character Processing Functions and Operators

- Binary String Functions and Operators

- Bit String Functions and Operators

- Mode Matching Operators

- Mathematical Functions and Operators

- Date and Time Processing Functions and Operators

- Type Conversion Functions

- Geometric Functions and Operators

- Network Address Functions and Operators

- Text Search Functions and Operators

- JSON Functions

- HLL Functions and Operators

- SEQUENCE Functions

- Array Functions and Operators

- Range Functions and Operators

- Aggregate Functions

- Window Functions

- Security Functions

- Encrypted Equality Functions

- Set Returning Functions

- Conditional Expression Functions

- System Information Functions

- System Administration Functions

- Statistics Information Functions

- Trigger Functions

- Global Temporary Table Functions

- AI Feature Functions

- Other System Functions

- Internal Functions

- Obsolete Functions

- Supported Data Types

- SQL Syntax

- ABORT

- ALTER AGGREGATE

- ALTER AUDIT POLICY

- ALTER DATABASE

- ALTER DATA SOURCE

- ALTER DEFAULT PRIVILEGES

- ALTER DIRECTORY

- ALTER EXTENSION

- ALTER FOREIGN TABLE

- ALTER FUNCTION

- ALTER GROUP

- ALTER INDEX

- ALTER LANGUAGE

- ALTER LARGE OBJECT

- ALTER MASKING POLICY

- ALTER MATERIALIZED VIEW

- ALTER OPERATOR

- ALTER RESOURCE LABEL

- ALTER ROLE

- ALTER ROW LEVEL SECURITY POLICY

- ALTER RULE

- ALTER SCHEMA

- ALTER SEQUENCE

- ALTER SERVER

- ALTER SESSION

- ALTER SYNONYM

- ALTER SYSTEM KILL SESSION

- ALTER SYSTEM SET

- ALTER TABLE

- ALTER TABLE PARTITION

- ALTER TABLESPACE

- ALTER TEXT SEARCH CONFIGURATION

- ALTER TEXT SEARCH DICTIONARY

- ALTER TRIGGER

- ALTER TYPE

- ALTER USER

- ALTER USER MAPPING

- ALTER VIEW

- ANALYZE | ANALYSE

- BEGIN

- CALL

- CHECKPOINT

- CLOSE

- CLUSTER

- COMMENT

- COMMIT | END

- COMMIT PREPARED

- COPY

- CREATE AGGREGATE

- CREATE AUDIT POLICY

- CREATE CAST

- CREATE CLIENT MASTER KEY

- CREATE COLUMN ENCRYPTION KEY

- CREATE DATABASE

- CREATE DATA SOURCE

- CREATE DIRECTORY

- CREATE EXTENSION

- CREATE FOREIGN TABLE

- CREATE FUNCTION

- CREATE GROUP

- CREATE INCREMENTAL MATERIALIZED VIEW

- CREATE INDEX

- CREATE LANGUAGE

- CREATE MASKING POLICY

- CREATE MATERIALIZED VIEW

- CREATE OPERATOR

- CREATE ROW LEVEL SECURITY POLICY

- CREATE PROCEDURE

- CREATE RESOURCE LABEL

- CREATE ROLE

- CREATE RULE

- CREATE SCHEMA

- CREATE SEQUENCE

- CREATE SERVER

- CREATE SYNONYM

- CREATE TABLE

- CREATE TABLE AS

- CREATE TABLE PARTITION

- CREATE TABLESPACE

- CREATE TEXT SEARCH CONFIGURATION

- CREATE TEXT SEARCH DICTIONARY

- CREATE TRIGGER

- CREATE TYPE

- CREATE USER

- CREATE USER MAPPING

- CREATE VIEW

- CURSOR

- DEALLOCATE

- DECLARE

- DELETE

- DO

- DROP AGGREGATE

- DROP AUDIT POLICY

- DROP CAST

- DROP CLIENT MASTER KEY

- DROP COLUMN ENCRYPTION KEY

- DROP DATABASE

- DROP DATA SOURCE

- DROP DIRECTORY

- DROP EXTENSION

- DROP FOREIGN TABLE

- DROP FUNCTION

- DROP GROUP

- DROP INDEX

- DROP LANGUAGE

- DROP MASKING POLICY

- DROP MATERIALIZED VIEW

- DROP OPERATOR

- DROP OWNED

- DROP RESOURCE LABEL

- DROP ROW LEVEL SECURITY POLICY

- DROP PROCEDURE

- DROP ROLE

- DROP RULE

- DROP SCHEMA

- DROP SEQUENCE

- DROP SERVER

- DROP SYNONYM

- DROP TABLE

- DROP TABLESPACE

- DROP TEXT SEARCH CONFIGURATION

- DROP TEXT SEARCH DICTIONARY

- DROP TRIGGER

- DROP TYPE

- DROP USER

- DROP USER MAPPING

- DROP VIEW

- EXECUTE

- EXPLAIN

- EXPLAIN PLAN

- FETCH

- GRANT

- INSERT

- LOCK

- MOVE

- MERGE INTO

- PREPARE

- PREPARE TRANSACTION

- REASSIGN OWNED

- REFRESH INCREMENTAL MATERIALIZED VIEW

- REFRESH MATERIALIZED VIEW

- REINDEX

- RELEASE SAVEPOINT

- RESET

- REVOKE

- ROLLBACK

- ROLLBACK PREPARED

- ROLLBACK TO SAVEPOINT

- SAVEPOINT

- SELECT

- SELECT INTO

- SET

- SET CONSTRAINTS

- SET ROLE

- SET SESSION AUTHORIZATION

- SET TRANSACTION

- SHOW

- SHUTDOWN

- START TRANSACTION

- TRUNCATE

- UPDATE

- VACUUM

- VALUES

- SQL Reference

- MogDB SQL

- Keywords

- Constant and Macro

- Expressions

- Type Conversion

- Full Text Search

- Introduction

- Tables and Indexes

- Controlling Text Search

- Additional Features

- Parser

- Dictionaries

- Configuration Examples

- Testing and Debugging Text Search

- Limitations

- System Operation

- Controlling Transactions

- DDL Syntax Overview

- DML Syntax Overview

- DCL Syntax Overview

- Appendix

- GUC Parameters

- GUC Parameter Usage

- File Location

- Connection and Authentication

- Resource Consumption

- Parallel Import

- Write Ahead Log

- HA Replication

- Memory Table

- Query Planning

- Error Reporting and Logging

- Alarm Detection

- Statistics During the Database Running

- Load Management

- Automatic Vacuuming

- Default Settings of Client Connection

- Lock Management

- Version and Platform Compatibility

- Faut Tolerance

- Connection Pool Parameters

- MogDB Transaction

- Developer Options

- Auditing

- Upgrade Parameters

- Miscellaneous Parameters

- Wait Events

- Query

- System Performance Snapshot

- Equality Query in a Fully-encrypted Database

- Global Temporary Table

- Scheduled Task

- Thread Pool

- Appendix

- Information Schema

- DBE_PERF

- DBE_PERF Overview

- OS

- Instance

- Memory

- File

- Object

- STAT_USER_TABLES

- SUMMARY_STAT_USER_TABLES

- GLOBAL_STAT_USER_TABLES

- STAT_USER_INDEXES

- SUMMARY_STAT_USER_INDEXES

- GLOBAL_STAT_USER_INDEXES

- STAT_SYS_TABLES

- SUMMARY_STAT_SYS_TABLES

- GLOBAL_STAT_SYS_TABLES

- STAT_SYS_INDEXES

- SUMMARY_STAT_SYS_INDEXES

- GLOBAL_STAT_SYS_INDEXES

- STAT_ALL_TABLES

- SUMMARY_STAT_ALL_TABLES

- GLOBAL_STAT_ALL_TABLES

- STAT_ALL_INDEXES

- SUMMARY_STAT_ALL_INDEXES

- GLOBAL_STAT_ALL_INDEXES

- STAT_DATABASE

- SUMMARY_STAT_DATABASE

- GLOBAL_STAT_DATABASE

- STAT_DATABASE_CONFLICTS

- SUMMARY_STAT_DATABASE_CONFLICTS

- GLOBAL_STAT_DATABASE_CONFLICTS

- STAT_XACT_ALL_TABLES

- SUMMARY_STAT_XACT_ALL_TABLES

- GLOBAL_STAT_XACT_ALL_TABLES

- STAT_XACT_SYS_TABLES

- SUMMARY_STAT_XACT_SYS_TABLES

- GLOBAL_STAT_XACT_SYS_TABLES

- STAT_XACT_USER_TABLES

- SUMMARY_STAT_XACT_USER_TABLES

- GLOBAL_STAT_XACT_USER_TABLES

- STAT_XACT_USER_FUNCTIONS

- SUMMARY_STAT_XACT_USER_FUNCTIONS

- GLOBAL_STAT_XACT_USER_FUNCTIONS

- STAT_BAD_BLOCK

- SUMMARY_STAT_BAD_BLOCK

- GLOBAL_STAT_BAD_BLOCK

- STAT_USER_FUNCTIONS

- SUMMARY_STAT_USER_FUNCTIONS

- GLOBAL_STAT_USER_FUNCTIONS

- Workload

- Session/Thread

- SESSION_STAT

- GLOBAL_SESSION_STAT

- SESSION_TIME

- GLOBAL_SESSION_TIME

- SESSION_MEMORY

- GLOBAL_SESSION_MEMORY

- SESSION_MEMORY_DETAIL

- GLOBAL_SESSION_MEMORY_DETAIL

- SESSION_STAT_ACTIVITY

- GLOBAL_SESSION_STAT_ACTIVITY

- THREAD_WAIT_STATUS

- GLOBAL_THREAD_WAIT_STATUS

- LOCAL_THREADPOOL_STATUS

- GLOBAL_THREADPOOL_STATUS

- SESSION_CPU_RUNTIME

- SESSION_MEMORY_RUNTIME

- STATEMENT_IOSTAT_COMPLEX_RUNTIME

- Transaction

- Query

- STATEMENT

- SUMMARY_STATEMENT

- STATEMENT_COUNT

- GLOBAL_STATEMENT_COUNT

- SUMMARY_STATEMENT_COUNT

- GLOBAL_STATEMENT_COMPLEX_HISTORY

- GLOBAL_STATEMENT_COMPLEX_HISTORY_TABLE

- GLOBAL_STATEMENT_COMPLEX_RUNTIME

- STATEMENT_RESPONSETIME_PERCENTILE

- STATEMENT_USER_COMPLEX_HISTORY

- STATEMENT_COMPLEX_RUNTIME

- STATEMENT_COMPLEX_HISTORY_TABLE

- STATEMENT_COMPLEX_HISTORY

- STATEMENT_WLMSTAT_COMPLEX_RUNTIME

- STATEMENT_HISTORY

- Cache/IO

- STATIO_USER_TABLES

- SUMMARY_STATIO_USER_TABLES

- GLOBAL_STATIO_USER_TABLES

- STATIO_USER_INDEXES

- SUMMARY_STATIO_USER_INDEXES

- GLOBAL_STATIO_USER_INDEXES

- STATIO_USER_SEQUENCES

- SUMMARY_STATIO_USER_SEQUENCES

- GLOBAL_STATIO_USER_SEQUENCES

- STATIO_SYS_TABLES

- SUMMARY_STATIO_SYS_TABLES

- GLOBAL_STATIO_SYS_TABLES

- STATIO_SYS_INDEXES

- SUMMARY_STATIO_SYS_INDEXES

- GLOBAL_STATIO_SYS_INDEXES

- STATIO_SYS_SEQUENCES

- SUMMARY_STATIO_SYS_SEQUENCES

- GLOBAL_STATIO_SYS_SEQUENCES

- STATIO_ALL_TABLES

- SUMMARY_STATIO_ALL_TABLES

- GLOBAL_STATIO_ALL_TABLES

- STATIO_ALL_INDEXES

- SUMMARY_STATIO_ALL_INDEXES

- GLOBAL_STATIO_ALL_INDEXES

- STATIO_ALL_SEQUENCES

- SUMMARY_STATIO_ALL_SEQUENCES

- GLOBAL_STATIO_ALL_SEQUENCES

- GLOBAL_STAT_DB_CU

- GLOBAL_STAT_SESSION_CU

- Utility

- REPLICATION_STAT

- GLOBAL_REPLICATION_STAT

- REPLICATION_SLOTS

- GLOBAL_REPLICATION_SLOTS

- BGWRITER_STAT

- GLOBAL_BGWRITER_STAT

- GLOBAL_CKPT_STATUS

- GLOBAL_DOUBLE_WRITE_STATUS

- GLOBAL_PAGEWRITER_STATUS

- GLOBAL_RECORD_RESET_TIME

- GLOBAL_REDO_STATUS

- GLOBAL_RECOVERY_STATUS

- CLASS_VITAL_INFO

- USER_LOGIN

- SUMMARY_USER_LOGIN

- GLOBAL_GET_BGWRITER_STATUS

- Lock

- Wait Events

- Configuration

- Operator

- Workload Manager

- Global Plancache

- Appendix

- Tool Reference

- Tool Overview

- Client Tool

- Server Tools

- Tools Used in the Internal System

- Error Code Reference

- Description of SQL Error Codes

- Third-Party Library Error Codes

- GAUSS-00001 - GAUSS-00100

- GAUSS-00101 - GAUSS-00200

- GAUSS 00201 - GAUSS 00300

- GAUSS 00301 - GAUSS 00400

- GAUSS 00401 - GAUSS 00500

- GAUSS 00501 - GAUSS 00600

- GAUSS 00601 - GAUSS 00700

- GAUSS 00701 - GAUSS 00800

- GAUSS 00801 - GAUSS 00900

- GAUSS 00901 - GAUSS 01000

- GAUSS 01001 - GAUSS 01100

- GAUSS 01101 - GAUSS 01200

- GAUSS 01201 - GAUSS 01300

- GAUSS 01301 - GAUSS 01400

- GAUSS 01401 - GAUSS 01500

- GAUSS 01501 - GAUSS 01600

- GAUSS 01601 - GAUSS 01700

- GAUSS 01701 - GAUSS 01800

- GAUSS 01801 - GAUSS 01900

- GAUSS 01901 - GAUSS 02000

- GAUSS 02001 - GAUSS 02100

- GAUSS 02101 - GAUSS 02200

- GAUSS 02201 - GAUSS 02300

- GAUSS 02301 - GAUSS 02400

- GAUSS 02401 - GAUSS 02500

- GAUSS 02501 - GAUSS 02600

- GAUSS 02601 - GAUSS 02700

- GAUSS 02701 - GAUSS 02800

- GAUSS 02801 - GAUSS 02900

- GAUSS 02901 - GAUSS 03000

- GAUSS 03001 - GAUSS 03100

- GAUSS 03101 - GAUSS 03200

- GAUSS 03201 - GAUSS 03300

- GAUSS 03301 - GAUSS 03400

- GAUSS 03401 - GAUSS 03500

- GAUSS 03501 - GAUSS 03600

- GAUSS 03601 - GAUSS 03700

- GAUSS 03701 - GAUSS 03800

- GAUSS 03801 - GAUSS 03900

- GAUSS 03901 - GAUSS 04000

- GAUSS 04001 - GAUSS 04100

- GAUSS 04101 - GAUSS 04200

- GAUSS 04201 - GAUSS 04300

- GAUSS 04301 - GAUSS 04400

- GAUSS 04401 - GAUSS 04500

- GAUSS 04501 - GAUSS 04600

- GAUSS 04601 - GAUSS 04700

- GAUSS 04701 - GAUSS 04800

- GAUSS 04801 - GAUSS 04900

- GAUSS 04901 - GAUSS 05000

- GAUSS 05001 - GAUSS 05100

- GAUSS 05101 - GAUSS 05200

- GAUSS 05201 - GAUSS 05300

- GAUSS 05301 - GAUSS 05400

- GAUSS 05401 - GAUSS 05500

- GAUSS 05501 - GAUSS 05600

- GAUSS 05601 - GAUSS 05700

- GAUSS 05701 - GAUSS 05800

- GAUSS 05801 - GAUSS 05900

- GAUSS 05901 - GAUSS 06000

- GAUSS 06001 - GAUSS 06100

- GAUSS 06101 - GAUSS 06200

- GAUSS 06201 - GAUSS 06300

- GAUSS 06301 - GAUSS 06400

- GAUSS 06401 - GAUSS 06500

- GAUSS 06501 - GAUSS 06600

- GAUSS 06601 - GAUSS 06700

- GAUSS 06701 - GAUSS 06800

- GAUSS 06801 - GAUSS 06900

- GAUSS 06901 - GAUSS 07000

- GAUSS 07001 - GAUSS 07100

- GAUSS 07101 - GAUSS 07200

- GAUSS 07201 - GAUSS 07300

- GAUSS 07301 - GAUSS 07400

- GAUSS 07401 - GAUSS 07480

- GAUSS 50000 - GAUSS 50999

- GAUSS 51000 - GAUSS 51999

- GAUSS 52000 - GAUSS 52999

- GAUSS 53000 - GAUSS 53699

- System Catalogs and System Views

- FAQs

- Glossary

Upgrade Guide

Overview

This document provides guidance on version upgrade and rollback process. It also offers common problem resolving and troubleshooting methods.

Intended Audience

This document is mainly intended for upgrade operators. They must have the following experience and skills:

- Be familiar with the networking of the current network and versions of related NEs (network elements).

- Have maintenance experience of the related devices and be familiar with their operation and maintenance methods.

Upgrade Scheme

This section provides guidance on selection of the upgrade modes.

The user determines whether to upgrade the current system according to the new features of MogDB and database situations.

The supported upgrade modes include in-place upgrade and gray upgrade. The upgrade strategies include major upgrade and minor upgrade.

After the upgrade mode is determined, the system will automatically determine and choose the suitable upgrade strategy.

-

In-place upgrade: All services need to be stopped during the upgrade. All nodes are upgraded at a time.

-

Gray upgrade: supports full-service operations. All nodes are also upgraded at a time. (Currently, only the gray upgrade from version 1.1.0 to 2.0.0 is supported.)

Version Requirements Before the Upgrade (Upgrade Path)

Table 1 lists the MogDB upgrade version requirements.

Table 1 Version requirements before the upgrade (upgrade path)

| Version | Description |

|---|---|

| MogDB 1.1.0 or later | Be able to be upgraded to any version later than MogDB 1.1.0. |

NOTE: You can run the following command to check the version before the upgrade:

$ gsql -V | --version

Impact and Constraints

The following precautions need to be considered during the upgrade:

- The upgrade cannot be performed with capacity expansion and reduction concurrently.

- VIP (virtual IP) is not supported.

- During the upgrade, you are not allowed to modify the wal_level, max_connections, max_prepared_transactions, and max_locks_per_transaction GUC parameters. Otherwise, the instance will be started abnormally after rollback.

- It is recommended that the upgrade is performed when the database system is under the light workload. You can determine the off-peak hours according to your experience, such as holidays and festivals.

- Before the upgrade, make sure that the database is normal. You can run the gs_om -t status command to check the database status. If the returned value of cluster_state is Normal, the database is normal.

- Before the upgrade, make sure that mutual trust is established between database nodes. You can run the ssh hostname command on any node to connect to another node to verify whether the mutual trust has been established. If mutual connection between any two nodes does not require a password, the mutual trust is normal. (Generally, when the database status is normal, mutual trust is normal.)

- Before and after the upgrade, the database deployment mode must be kept consistent. Before the upgrade, the database deployment mode will be verified. If it is changed after the upgrade, an error will occur.

- Before the upgrade, make sure that the OS is normal. You can check the OS status using the gs_checkos tool.

- In-place upgrade requires stopping of services. Gray upgrade supports full-service operations.

- The database is running normally and the data of the primary domain name (DN) is fully synchronized to the standby DN.

- During the upgrade, the kerberos is not allowed to be enabled.

- You are not allowed to modify the version.cfg file decompressed from the installation package.

- During the upgrade, if an error causes upgrade failure, you need to perform rollback operations manually. The next upgrade can be performed only after the rollback is successful.

- After the rollback, if the next upgrade is successful, GUC parameters set before the upgrade is submitted will become invalid.

- During the upgrade, you are not allowed to set GUC parameters manually.

- During the gray upgrade, service interruption will occur and lasts less than 10s.

- During the upgrade, OM operations can be performed only when the kernel and OM versions are consistent. This consistency refers that the kernel code and OM code are from the same software package. If the pre-installation script of the installation package is executed but the upgrade is not performed, or the pre-installation script of the baseline package after the rollback is not performed, the kernel code will be inconsistent with the OM code.

- During the upgrade, if new fields are added to a system table but they cannot be found by running the \d command after the upgrade, you can run the select command to check the new fields.

- The upgrade is allowed only when the value of enable_stream_replication is on.

- During the gray upgrade, the number of concurrent read/write services must be less than 200.

- If the MOT is used in MogDB 1.1.0, MogDB 1.1.0 cannot be upgraded to MogDB 2.0.0.

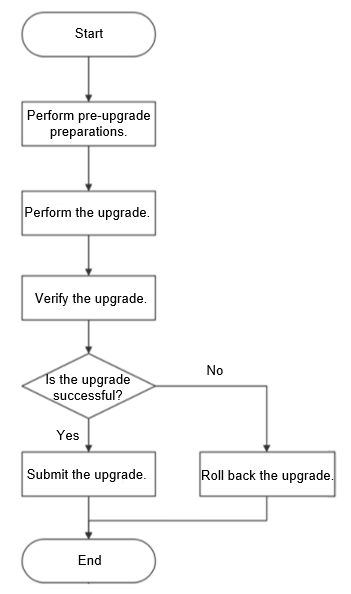

Upgrade Process

This section describes the upgrade process.

Figure 1 Upgrade process

NOTE: The time listed in the following table is for reference only. The actual time required depends on the upgrade environment.

Table 2 Estimated upgrade efficiency

| Procedure | Recommended Start Time | Time Required (Day/Hour/Minute) | Service Interruption Time | Remarks |

| Perform the pre-upgrade preparations and check operations. | One day before the upgrade | About 2 to 3 hours | No impact on services | Pre-upgrade check, data backup, and software package verification |

| Perform the upgrade. | Off-peak hours | The time is mainly spent in starting and stopping the database and modifying the system table of each database. The upgrade usually takes less than 30 minutes. | The service interruption time is the same as the upgrade time. Generally, the time taken is not greater than 30 minutes. | Performed based on the Upgrade Guide |

| Verify the upgrade. | Off-peak hours | About 30 minutes | The service interruption time is the same as the upgrade verification time, about 30 minutes. | - |

| Submit the upgrade. | Off-peak hours | The upgrade submission usually takes less than 10 minutes. | The service interruption time is the same as the upgrade submission time. Generally, the time taken is not greater than 10 minutes. | - |

| Roll back the upgrade. | Off-peak hours | The rollback usually takes less than 30 minutes. | The service interruption time is the same as the rollback time. Generally, the time taken is not greater than 30 minutes. | - |

Pre-Upgrade Preparations and Check

Pre-Upgrade Preparations and Checklist

Table 3 Pre-upgrade preparations and checklist

| No. | Item to Be Prepared for the Upgrade | Preparation Content | Recommended Start Time | Time Required (Day/Hour/Minute) |

| 1 | Collect node information. | Obtain the name, IP address, and passwords of users root and omm of related database nodes | One day before the upgrade | 1 hour |

| 2 | Set remote login as user root. | Set the configuration file that allows remote login as user root | One day before the upgrade | 2 hours |

| 3 | Back up data. | For details, see the Backup and Restoration section in the Administrator Guide. | One day before the upgrade | The time taken varies depends on the volume of data to be backed up and the backup strategy. |

| 4 | Obtain and verify the installation package. | Obtain the installation package and verify the package integrity. | One day before the upgrade | 0.5 hour |

| 5 | Perform the health check. | Check the OS status using the gs_checkos tool | One day before the upgrade | 0.5 hour |

| 6 | Check the disk usage of each database node. | Check the disk usage by running the df command. | One day before the upgrade | 0.5 hour |

| 7 | Check the database status. | Check the database status using the gs_om tool. | One day before the upgrade | 0.5 hour |

NOTE: Time Required varies depends on the environment, including data volume, server performance, and other factors.

Collecting Node Information

You can contact the system administrator to obtain the environment information, such as name, IP address, and passwords of users root and omm of the database node.

| No. | Node Name | IP Address of the Node | Password of User root | Password of User omm | Remarks |

| 1 | - | - | - | - | - |

Backing Up Data

Once the upgrade fails, services will be affected. Therefore, you need to back up data in advance so that services can be quickly restored once the risk occurs.

For details about data backup, see the Backup and Restoration section in the Administrator Guide.

Obtaining the Installation Package

You can obtain the installation package from this website.

Performing the Health Check

The gs_checkos tool can be used to check the OS status.

Prerequisites

- The current hardware and network environment is normal.

- The mutual trust between the root users of all hosts is normal.

- The gs_checkos command can be executed only as user root.

Procedure

-

Log in to the primary database node as user root.

-

Run the following command to check the server OS parameters:

# gs_checkos -i AChecking the OS parameters aims to ensure that the database can be pre-installed normally and can be run safely and efficiently after being installed.

Checking the Disk Usage of the Database Node

It is recommended that the upgrade is performed when the disk usage of the database node is less than 80%.

Checking the Database Status

This section introduces how to check the database status.

Procedure

-

Log in to the primary database node as user omm and run the source command to reload environment variables.

# su - omm $ source /home/omm/.bashrc -

Run the following command to check the database status:

$ gs_om -t status -

Ensure that the database status is normal.

Upgrade Procedure

This section introduces details about in-place upgrade and gray upgrade.

Procedure

-

Log in to the primary database node as user root.

-

Create a directory for storing the new package.

# mkdir -p /opt/software/mogdb_upgrade -

Upload the new package to the /opt/software/mogdb_upgrade directory and decompress the package.

-

Found the script file.

# cd /opt/software/mogdb_upgrade/script -

Before the in-place or gray upgrade, execute the pre-installation script by running the gs_preinstall command.

# ./gs_preinstall -U omm -G dbgrp -X /opt/software/mogdb/clusterconfig.xml -

Switch to user omm.

# su - omm -

After ensuring that the database status is normal, run the required command to perform the in-place upgrade or gray upgrade.

Example one: Execute the gs_upgradectl script to perform the in-place upgrade.

$ gs_upgradectl -t auto-upgrade -X /opt/software/mogdb/clusterconfig.xmlExample two: Execute the gs_upgradectl script to perform the gray upgrade.

$ gs_upgradectl -t auto-upgrade -X /opt/software/mogdb/clusterconfig.xml --grey

Upgrade Verification

This section introduces upgrade verification and provides detailed use cases and operations.

Verifying the Checklist of the Project

Table 5 Verification item checklist

| No. | Verification Item | Check Standard | Check Result |

| 1 | Version check | Check whether the version is correct after the upgrade. | - |

| 2 | Health check | Use the gs_checkos tool to check the OS status. | - |

| 3 | Database status | Use the gs_om tool to check the database status. | - |

Querying the Upgrade Version

This section introduces how to check the version.

Procedure

-

Log in to the primary database node as user omm and run the source command to reload environment variables.

# su - omm $ source /home/omm/.bashrc -

Run the following command to check the version information of all nodes:

$ gs_ssh -c "gsql -V"

Checking the Database Status

This section introduces how to check the database status.

Procedure

-

Log in to the primary database node as user omm.

# su - omm -

Run the following command to check the database status:

$ gs_om -t statusIf the value of cluster_state is Normal, the database is normal.

Upgrade Submission

After the upgrade, if the verification is successful, the subsequent operation is to submit the upgrade.

NOTE: Once the upgrade is submitted, it cannot be rolled back.

Procedure

-

Log in to the primary database node as user omm.

# su - omm -

Run the following command to submit the upgrade:

$ gs_upgradectl -t commit-upgrade -X /opt/software/mogdb/clusterconfig.xml

Version Rollback

This section introduces how to roll back the upgrade.

Procedure

-

Log in to the primary database node as user omm.

# su - omm -

Run the following command to perform the rollback operation (rolling back the kernel code). After the rollback, if you need to keep the versions of the kernel code and OM code consistent, execute the pre-installation script in the old package. (For details, see the execute the pre-installtion script step.)

$ gs_upgradectl -t auto-rollback -X /opt/software/mogdb/clusterconfig.xml NOTE: If the database is abnormal, run the following command to perform the forcible rollback operation:

NOTE: If the database is abnormal, run the following command to perform the forcible rollback operation:$ gs_upgradectl -t auto-rollback -X /opt/software/mogdb/clusterconfig.xml --force -

Check the version after the rollback.

$ gs_om -V | --versionIf the upgrade fails, perform the following operations to resolve the issue:

a. Check whether the environment is abnormal.

For example, the disk is fully occupied, the network is faulty, or the installation package or upgrade version is incorrect. After the problem is located and resolved, try to perform the upgrade again.

b. If no environment issue is found or the upgrade fails again, collect related logs and contact technical engineers.

Run the following command to collect logs:

$ gs_collector -begin-time='20200724 00:00' -end-time='20200725 00:00'If permitted, you are advised to retain the environment.