- About MogDB

- Quick Start

- MogDB Playground

- Container-based MogDB Installation

- Installation on a Single Node

- MogDB Access

- Use CLI to Access MogDB

- Use GUI to Access MogDB

- Use Middleware to Access MogDB

- Use Programming Language to Access MogDB

- Using Sample Dataset Mogila

- Characteristic Description

- Overview

- High Performance

- High Availability (HA)

- Primary/Standby

- Logical Replication

- Online Node Replacement

- Logical Backup

- Physical Backup

- Automatic Job Retry upon Failure

- Ultimate RTO

- Cascaded Standby Server

- Delayed Replay

- Adding or Deleting a Standby Server

- Delaying Entering the Maximum Availability Mode

- Parallel Logical Decoding

- DCF

- CM

- Global SysCache

- Using a Standby Node to Build a Standby Node

- Maintainability

- Database Security

- Access Control Model

- Separation of Control and Access Permissions

- Database Encryption Authentication

- Data Encryption and Storage

- Database Audit

- Network Communication Security

- Resource Label

- Unified Audit

- Dynamic Data Anonymization

- Row-Level Access Control

- Password Strength Verification

- Equality Query in a Fully-encrypted Database

- Ledger Database Mechanism

- Transparent Data Encryption

- Enterprise-Level Features

- Support for Functions and Stored Procedures

- SQL Hints

- Full-Text Indexing

- Copy Interface for Error Tolerance

- Partitioning

- Support for Advanced Analysis Functions

- Materialized View

- HyperLogLog

- Creating an Index Online

- Autonomous Transaction

- Global Temporary Table

- Pseudocolumn ROWNUM

- Stored Procedure Debugging

- JDBC Client Load Balancing and Read/Write Isolation

- In-place Update Storage Engine

- Publication-Subscription

- Foreign Key Lock Enhancement

- Data Compression in OLTP Scenarios

- Transaction Async Submit

- Index Creation Parallel Control

- Dynamic Partition Pruning

- COPY Import Optimization

- SQL Running Status Observation

- BRIN Index

- BLOOM Index

- Application Development Interfaces

- AI Capabilities

- Middleware

- Installation Guide

- Installation Preparation

- Container Installation

- PTK-based Installation

- OM-based Installation

- Manual Installation

- Recommended Parameter Settings

- Administrator Guide

- Localization

- Routine Maintenance

- Starting and Stopping MogDB

- Using the gsql Client for Connection

- Routine Maintenance

- Checking OS Parameters

- Checking MogDB Health Status

- Checking Database Performance

- Checking and Deleting Logs

- Checking Time Consistency

- Checking The Number of Application Connections

- Routinely Maintaining Tables

- Routinely Recreating an Index

- Data Security Maintenance Suggestions

- Slow SQL Diagnosis

- Log Reference

- Primary and Standby Management

- MOT Engine

- Introducing MOT

- Using MOT

- Concepts of MOT

- Appendix

- Column-store Tables Management

- Backup and Restoration

- Importing and Exporting Data

- Importing Data

- Exporting Data

- Upgrade Guide

- AI Features Guide

- AI Features Overview

- AI4DB: Autonomous Database O&M

- DBMind Mode

- Components that Support DBMind

- AI Sub-functions of the DBMind

- X-Tuner: Parameter Tuning and Diagnosis

- Index-advisor: Index Recommendation

- AI4DB: Root Cause Analysis for Slow SQL Statements

- AI4DB: Trend Prediction

- SQLdiag: Slow SQL Discovery

- DB4AI: Database-driven AI

- AI in DB

- Intelligence Explain: SQL Statement Query Time Prediction

- Security Guide

- Developer Guide

- Application Development Guide

- Development Specifications

- Development Based on JDBC

- Overview

- JDBC Package, Driver Class, and Environment Class

- Development Process

- Loading the Driver

- Connecting to a Database

- Connecting to the Database (Using SSL)

- Running SQL Statements

- Processing Data in a Result Set

- Closing a Connection

- Managing Logs

- Example: Common Operations

- Example: Retrying SQL Queries for Applications

- Example: Importing and Exporting Data Through Local Files

- Example 2: Migrating Data from a MY Database to MogDB

- Example: Logic Replication Code

- Example: Parameters for Connecting to the Database in Different Scenarios

- JDBC API Reference

- java.sql.Connection

- java.sql.CallableStatement

- java.sql.DatabaseMetaData

- java.sql.Driver

- java.sql.PreparedStatement

- java.sql.ResultSet

- java.sql.ResultSetMetaData

- java.sql.Statement

- javax.sql.ConnectionPoolDataSource

- javax.sql.DataSource

- javax.sql.PooledConnection

- javax.naming.Context

- javax.naming.spi.InitialContextFactory

- CopyManager

- Development Based on ODBC

- Development Based on libpq

- Dependent Header Files of libpq

- Development Process

- Example

- Link Parameters

- libpq API Reference

- Database Connection Control Functions

- Database Statement Execution Functions

- Functions for Asynchronous Command Processing

- Functions for Canceling Queries in Progress

- Psycopg-Based Development

- Commissioning

- Stored Procedure

- User Defined Functions

- PL/pgSQL-SQL Procedural Language

- Scheduled Jobs

- Autonomous Transaction

- Logical Replication

- Foreign Data Wrapper

- Materialized View

- Materialized View Overview

- Full Materialized View

- Incremental Materialized View

- Partition Management

- Partition Pruning

- Recommendations For Choosing A Partitioning Strategy

- Application Development Guide

- Performance Tuning Guide

- System Optimization

- SQL Optimization

- WDR Snapshot

- Using the Vectorized Executor for Tuning

- TPC-C Performance Tunning Guide

- Reference Guide

- System Catalogs and System Views

- Overview of System Catalogs and System Views

- System Catalogs

- GS_ASP

- GS_AUDITING_POLICY

- GS_AUDITING_POLICY_ACCESS

- GS_AUDITING_POLICY_FILTERS

- GS_AUDITING_POLICY_PRIVILEGES

- GS_CLIENT_GLOBAL_KEYS

- GS_CLIENT_GLOBAL_KEYS_ARGS

- GS_COLUMN_KEYS

- GS_COLUMN_KEYS_ARGS

- GS_DB_PRIVILEGE

- GS_ENCRYPTED_COLUMNS

- GS_ENCRYPTED_PROC

- GS_GLOBAL_CHAIN

- GS_GLOBAL_CONFIG

- GS_MASKING_POLICY

- GS_MASKING_POLICY_ACTIONS

- GS_MASKING_POLICY_FILTERS

- GS_MATVIEW

- GS_MATVIEW_DEPENDENCY

- GS_MODEL_WAREHOUSE

- GS_OPT_MODEL

- GS_PACKAGE

- GS_POLICY_LABEL

- GS_RECYCLEBIN

- GS_TXN_SNAPSHOT

- GS_UID

- GS_WLM_EC_OPERATOR_INFO

- GS_WLM_INSTANCE_HISTORY

- GS_WLM_OPERATOR_INFO

- GS_WLM_PLAN_ENCODING_TABLE

- GS_WLM_PLAN_OPERATOR_INFO

- GS_WLM_SESSION_QUERY_INFO_ALL

- GS_WLM_USER_RESOURCE_HISTORY

- PG_AGGREGATE

- PG_AM

- PG_AMOP

- PG_AMPROC

- PG_APP_WORKLOADGROUP_MAPPING

- PG_ATTRDEF

- PG_ATTRIBUTE

- PG_AUTH_HISTORY

- PG_AUTH_MEMBERS

- PG_AUTHID

- PG_CAST

- PG_CLASS

- PG_COLLATION

- PG_CONSTRAINT

- PG_CONVERSION

- PG_DATABASE

- PG_DB_ROLE_SETTING

- PG_DEFAULT_ACL

- PG_DEPEND

- PG_DESCRIPTION

- PG_DIRECTORY

- PG_ENUM

- PG_EXTENSION

- PG_EXTENSION_DATA_SOURCE

- PG_FOREIGN_DATA_WRAPPER

- PG_FOREIGN_SERVER

- PG_FOREIGN_TABLE

- PG_HASHBUCKET

- PG_INDEX

- PG_INHERITS

- PG_JOB

- PG_JOB_PROC

- PG_LANGUAGE

- PG_LARGEOBJECT

- PG_LARGEOBJECT_METADATA

- PG_NAMESPACE

- PG_OBJECT

- PG_OPCLASS

- PG_OPERATOR

- PG_OPFAMILY

- PG_PARTITION

- PG_PLTEMPLATE

- PG_PROC

- PG_PUBLICATION

- PG_PUBLICATION_REL

- PG_RANGE

- PG_REPLICATION_ORIGIN

- PG_RESOURCE_POOL

- PG_REWRITE

- PG_RLSPOLICY

- PG_SECLABEL

- PG_SHDEPEND

- PG_SHDESCRIPTION

- PG_SHSECLABEL

- PG_STATISTIC

- PG_STATISTIC_EXT

- PG_SUBSCRIPTION

- PG_SYNONYM

- PG_TABLESPACE

- PG_TRIGGER

- PG_TS_CONFIG

- PG_TS_CONFIG_MAP

- PG_TS_DICT

- PG_TS_PARSER

- PG_TS_TEMPLATE

- PG_TYPE

- PG_USER_MAPPING

- PG_USER_STATUS

- PG_WORKLOAD_GROUP

- PGXC_CLASS

- PGXC_GROUP

- PGXC_NODE

- PGXC_SLICE

- PLAN_TABLE_DATA

- STATEMENT_HISTORY

- System Views

- DV_SESSION_LONGOPS

- DV_SESSIONS

- GET_GLOBAL_PREPARED_XACTS(Discarded)

- GS_AUDITING

- GS_AUDITING_ACCESS

- GS_AUDITING_PRIVILEGE

- GS_ASYNC_SUBMIT_SESSIONS_STATUS

- GS_CLUSTER_RESOURCE_INFO

- GS_DB_PRIVILEGES

- GS_FILE_STAT

- GS_GSC_MEMORY_DETAIL

- GS_INSTANCE_TIME

- GS_LABELS

- GS_LSC_MEMORY_DETAIL

- GS_MASKING

- GS_MATVIEWS

- GS_OS_RUN_INFO

- GS_REDO_STAT

- GS_SESSION_CPU_STATISTICS

- GS_SESSION_MEMORY

- GS_SESSION_MEMORY_CONTEXT

- GS_SESSION_MEMORY_DETAIL

- GS_SESSION_MEMORY_STATISTICS

- GS_SESSION_STAT

- GS_SESSION_TIME

- GS_SQL_COUNT

- GS_STAT_SESSION_CU

- GS_THREAD_MEMORY_CONTEXT

- GS_TOTAL_MEMORY_DETAIL

- GS_WLM_CGROUP_INFO

- GS_WLM_EC_OPERATOR_STATISTICS

- GS_WLM_OPERATOR_HISTORY

- GS_WLM_OPERATOR_STATISTICS

- GS_WLM_PLAN_OPERATOR_HISTORY

- GS_WLM_REBUILD_USER_RESOURCE_POOL

- GS_WLM_RESOURCE_POOL

- GS_WLM_SESSION_HISTORY

- GS_WLM_SESSION_INFO

- GS_WLM_SESSION_INFO_ALL

- GS_WLM_SESSION_STATISTICS

- GS_WLM_USER_INFO

- GS_WRITE_TERM_LOG

- MPP_TABLES

- PG_AVAILABLE_EXTENSION_VERSIONS

- PG_AVAILABLE_EXTENSIONS

- PG_COMM_DELAY

- PG_COMM_RECV_STREAM

- PG_COMM_SEND_STREAM

- PG_COMM_STATUS

- PG_CONTROL_GROUP_CONFIG

- PG_CURSORS

- PG_EXT_STATS

- PG_GET_INVALID_BACKENDS

- PG_GET_SENDERS_CATCHUP_TIME

- PG_GROUP

- PG_GTT_ATTACHED_PIDS

- PG_GTT_RELSTATS

- PG_GTT_STATS

- PG_INDEXES

- PG_LOCKS

- PG_NODE_ENV

- PG_OS_THREADS

- PG_PREPARED_STATEMENTS

- PG_PREPARED_XACTS

- PG_PUBLICATION_TABLES

- PG_REPLICATION_ORIGIN_STATUS

- PG_REPLICATION_SLOTS

- PG_RLSPOLICIES

- PG_ROLES

- PG_RULES

- PG_RUNNING_XACTS

- PG_SECLABELS

- PG_SESSION_IOSTAT

- PG_SESSION_WLMSTAT

- PG_SETTINGS

- PG_SHADOW

- PG_STAT_ACTIVITY

- PG_STAT_ACTIVITY_NG

- PG_STAT_ALL_INDEXES

- PG_STAT_ALL_TABLES

- PG_STAT_BAD_BLOCK

- PG_STAT_BGWRITER

- PG_STAT_DATABASE

- PG_STAT_DATABASE_CONFLICTS

- PG_STAT_REPLICATION

- PG_STAT_SUBSCRIPTION

- PG_STAT_SYS_INDEXES

- PG_STAT_SYS_TABLES

- PG_STAT_USER_FUNCTIONS

- PG_STAT_USER_INDEXES

- PG_STAT_USER_TABLES

- PG_STAT_XACT_ALL_TABLES

- PG_STAT_XACT_SYS_TABLES

- PG_STAT_XACT_USER_FUNCTIONS

- PG_STAT_XACT_USER_TABLES

- PG_STATIO_ALL_INDEXES

- PG_STATIO_ALL_SEQUENCES

- PG_STATIO_ALL_TABLES

- PG_STATIO_SYS_INDEXES

- PG_STATIO_SYS_SEQUENCES

- PG_STATIO_SYS_TABLES

- PG_STATIO_USER_INDEXES

- PG_STATIO_USER_SEQUENCES

- PG_STATIO_USER_TABLES

- PG_STATS

- PG_TABLES

- PG_TDE_INFO

- PG_THREAD_WAIT_STATUS

- PG_TIMEZONE_ABBREVS

- PG_TIMEZONE_NAMES

- PG_TOTAL_MEMORY_DETAIL

- PG_TOTAL_USER_RESOURCE_INFO

- PG_TOTAL_USER_RESOURCE_INFO_OID

- PG_USER

- PG_USER_MAPPINGS

- PG_VARIABLE_INFO

- PG_VIEWS

- PG_WLM_STATISTICS

- PGXC_PREPARED_XACTS

- PLAN_TABLE

- Functions and Operators

- Logical Operators

- Comparison Operators

- Character Processing Functions and Operators

- Binary String Functions and Operators

- Bit String Functions and Operators

- Mode Matching Operators

- Mathematical Functions and Operators

- Date and Time Processing Functions and Operators

- Type Conversion Functions

- Geometric Functions and Operators

- Network Address Functions and Operators

- Text Search Functions and Operators

- JSON/JSONB Functions and Operators

- HLL Functions and Operators

- SEQUENCE Functions

- Array Functions and Operators

- Range Functions and Operators

- Aggregate Functions

- Window Functions(Analysis Functions)

- Security Functions

- Ledger Database Functions

- Encrypted Equality Functions

- Set Returning Functions

- Conditional Expression Functions

- System Information Functions

- System Administration Functions

- Configuration Settings Functions

- Universal File Access Functions

- Server Signal Functions

- Backup and Restoration Control Functions

- Snapshot Synchronization Functions

- Database Object Functions

- Advisory Lock Functions

- Logical Replication Functions

- Segment-Page Storage Functions

- Other Functions

- Undo System Functions

- Statistics Information Functions

- Trigger Functions

- Hash Function

- Prompt Message Function

- Global Temporary Table Functions

- Fault Injection System Function

- AI Feature Functions

- Dynamic Data Masking Functions

- Other System Functions

- Internal Functions

- Global SysCache Feature Functions

- Data Damage Detection and Repair Functions

- Obsolete Functions

- Supported Data Types

- Numeric Types

- Monetary Types

- Boolean Types

- Enumerated Types

- Character Types

- Binary Types

- Date/Time Types

- Geometric

- Network Address Types

- Bit String Types

- Text Search Types

- UUID

- JSON/JSONB Types

- HLL

- Array Types

- Range

- OID Types

- Pseudo-Types

- Data Types Supported by Column-store Tables

- XML Types

- Data Type Used by the Ledger Database

- SQL Syntax

- ABORT

- ALTER AGGREGATE

- ALTER AUDIT POLICY

- ALTER DATABASE

- ALTER DATA SOURCE

- ALTER DEFAULT PRIVILEGES

- ALTER DIRECTORY

- ALTER EXTENSION

- ALTER FOREIGN TABLE

- ALTER FUNCTION

- ALTER GLOBAL CONFIGURATION

- ALTER GROUP

- ALTER INDEX

- ALTER LANGUAGE

- ALTER LARGE OBJECT

- ALTER MASKING POLICY

- ALTER MATERIALIZED VIEW

- ALTER PACKAGE

- ALTER PROCEDURE

- ALTER PUBLICATION

- ALTER RESOURCE LABEL

- ALTER RESOURCE POOL

- ALTER ROLE

- ALTER ROW LEVEL SECURITY POLICY

- ALTER RULE

- ALTER SCHEMA

- ALTER SEQUENCE

- ALTER SERVER

- ALTER SESSION

- ALTER SUBSCRIPTION

- ALTER SYNONYM

- ALTER SYSTEM KILL SESSION

- ALTER SYSTEM SET

- ALTER TABLE

- ALTER TABLE PARTITION

- ALTER TABLE SUBPARTITION

- ALTER TABLESPACE

- ALTER TEXT SEARCH CONFIGURATION

- ALTER TEXT SEARCH DICTIONARY

- ALTER TRIGGER

- ALTER TYPE

- ALTER USER

- ALTER USER MAPPING

- ALTER VIEW

- ANALYZE | ANALYSE

- BEGIN

- CALL

- CHECKPOINT

- CLEAN CONNECTION

- CLOSE

- CLUSTER

- COMMENT

- COMMIT | END

- COMMIT PREPARED

- CONNECT BY

- COPY

- CREATE AGGREGATE

- CREATE AUDIT POLICY

- CREATE CAST

- CREATE CLIENT MASTER KEY

- CREATE COLUMN ENCRYPTION KEY

- CREATE DATABASE

- CREATE DATA SOURCE

- CREATE DIRECTORY

- CREATE EXTENSION

- CREATE FOREIGN TABLE

- CREATE FUNCTION

- CREATE GROUP

- CREATE INCREMENTAL MATERIALIZED VIEW

- CREATE INDEX

- CREATE LANGUAGE

- CREATE MASKING POLICY

- CREATE MATERIALIZED VIEW

- CREATE MODEL

- CREATE OPERATOR

- CREATE PACKAGE

- CREATE PROCEDURE

- CREATE PUBLICATION

- CREATE RESOURCE LABEL

- CREATE RESOURCE POOL

- CREATE ROLE

- CREATE ROW LEVEL SECURITY POLICY

- CREATE RULE

- CREATE SCHEMA

- CREATE SEQUENCE

- CREATE SERVER

- CREATE SUBSCRIPTION

- CREATE SYNONYM

- CREATE TABLE

- CREATE TABLE AS

- CREATE TABLE PARTITION

- CREATE TABLE SUBPARTITION

- CREATE TABLESPACE

- CREATE TEXT SEARCH CONFIGURATION

- CREATE TEXT SEARCH DICTIONARY

- CREATE TRIGGER

- CREATE TYPE

- CREATE USER

- CREATE USER MAPPING

- CREATE VIEW

- CREATE WEAK PASSWORD DICTIONARY

- CURSOR

- DEALLOCATE

- DECLARE

- DELETE

- DO

- DROP AGGREGATE

- DROP AUDIT POLICY

- DROP CAST

- DROP CLIENT MASTER KEY

- DROP COLUMN ENCRYPTION KEY

- DROP DATABASE

- DROP DATA SOURCE

- DROP DIRECTORY

- DROP EXTENSION

- DROP FOREIGN TABLE

- DROP FUNCTION

- DROP GLOBAL CONFIGURATION

- DROP GROUP

- DROP INDEX

- DROP LANGUAGE

- DROP MASKING POLICY

- DROP MATERIALIZED VIEW

- DROP MODEL

- DROP OPERATOR

- DROP OWNED

- DROP PACKAGE

- DROP PROCEDURE

- DROP PUBLICATION

- DROP RESOURCE LABEL

- DROP RESOURCE POOL

- DROP ROLE

- DROP ROW LEVEL SECURITY POLICY

- DROP RULE

- DROP SCHEMA

- DROP SEQUENCE

- DROP SERVER

- DROP SUBSCRIPTION

- DROP SYNONYM

- DROP TABLE

- DROP TABLESPACE

- DROP TEXT SEARCH CONFIGURATION

- DROP TEXT SEARCH DICTIONARY

- DROP TRIGGER

- DROP TYPE

- DROP USER

- DROP USER MAPPING

- DROP VIEW

- DROP WEAK PASSWORD DICTIONARY

- EXECUTE

- EXECUTE DIRECT

- EXPLAIN

- EXPLAIN PLAN

- FETCH

- GRANT

- INSERT

- LOCK

- MERGE INTO

- MOVE

- PREDICT BY

- PREPARE

- PREPARE TRANSACTION

- PURGE

- REASSIGN OWNED

- REFRESH INCREMENTAL MATERIALIZED VIEW

- REFRESH MATERIALIZED VIEW

- REINDEX

- RELEASE SAVEPOINT

- RESET

- REVOKE

- ROLLBACK

- ROLLBACK PREPARED

- ROLLBACK TO SAVEPOINT

- SAVEPOINT

- SELECT

- SELECT INTO

- SET

- SET CONSTRAINTS

- SET ROLE

- SET SESSION AUTHORIZATION

- SET TRANSACTION

- SHOW

- SHUTDOWN

- SNAPSHOT

- START TRANSACTION

- TIMECAPSULE TABLE

- TRUNCATE

- UPDATE

- VACUUM

- VALUES

- SQL Reference

- MogDB SQL

- Keywords

- Constant and Macro

- Expressions

- Type Conversion

- Full Text Search

- Introduction

- Tables and Indexes

- Controlling Text Search

- Additional Features

- Parser

- Dictionaries

- Configuration Examples

- Testing and Debugging Text Search

- Limitations

- System Operation

- Controlling Transactions

- DDL Syntax Overview

- DML Syntax Overview

- DCL Syntax Overview

- Appendix

- GUC Parameters

- GUC Parameter Usage

- GUC Parameter List

- File Location

- Connection and Authentication

- Resource Consumption

- Write Ahead Log

- HA Replication

- Memory Table

- Query Planning

- Error Reporting and Logging

- Alarm Detection

- Statistics During the Database Running

- Load Management

- Automatic Vacuuming

- Default Settings of Client Connection

- Lock Management

- Version and Platform Compatibility

- Faut Tolerance

- Connection Pool Parameters

- MogDB Transaction

- Developer Options

- Auditing

- SQL Mode

- Upgrade Parameters

- Miscellaneous Parameters

- Wait Events

- Query

- System Performance Snapshot

- Security Configuration

- Global Temporary Table

- HyperLogLog

- Scheduled Task

- Thread Pool

- User-defined Functions

- Backup and Restoration

- DCF Parameters Settings

- Flashback

- Rollback Parameters

- Reserved Parameters

- AI Features

- Global SysCache Parameters

- Appendix

- Schema

- Information Schema

- DBE_PERF

- Overview

- OS

- Instance

- Memory

- File

- Object

- STAT_USER_TABLES

- SUMMARY_STAT_USER_TABLES

- GLOBAL_STAT_USER_TABLES

- STAT_USER_INDEXES

- SUMMARY_STAT_USER_INDEXES

- GLOBAL_STAT_USER_INDEXES

- STAT_SYS_TABLES

- SUMMARY_STAT_SYS_TABLES

- GLOBAL_STAT_SYS_TABLES

- STAT_SYS_INDEXES

- SUMMARY_STAT_SYS_INDEXES

- GLOBAL_STAT_SYS_INDEXES

- STAT_ALL_TABLES

- SUMMARY_STAT_ALL_TABLES

- GLOBAL_STAT_ALL_TABLES

- STAT_ALL_INDEXES

- SUMMARY_STAT_ALL_INDEXES

- GLOBAL_STAT_ALL_INDEXES

- STAT_DATABASE

- SUMMARY_STAT_DATABASE

- GLOBAL_STAT_DATABASE

- STAT_DATABASE_CONFLICTS

- SUMMARY_STAT_DATABASE_CONFLICTS

- GLOBAL_STAT_DATABASE_CONFLICTS

- STAT_XACT_ALL_TABLES

- SUMMARY_STAT_XACT_ALL_TABLES

- GLOBAL_STAT_XACT_ALL_TABLES

- STAT_XACT_SYS_TABLES

- SUMMARY_STAT_XACT_SYS_TABLES

- GLOBAL_STAT_XACT_SYS_TABLES

- STAT_XACT_USER_TABLES

- SUMMARY_STAT_XACT_USER_TABLES

- GLOBAL_STAT_XACT_USER_TABLES

- STAT_XACT_USER_FUNCTIONS

- SUMMARY_STAT_XACT_USER_FUNCTIONS

- GLOBAL_STAT_XACT_USER_FUNCTIONS

- STAT_BAD_BLOCK

- SUMMARY_STAT_BAD_BLOCK

- GLOBAL_STAT_BAD_BLOCK

- STAT_USER_FUNCTIONS

- SUMMARY_STAT_USER_FUNCTIONS

- GLOBAL_STAT_USER_FUNCTIONS

- Workload

- Session/Thread

- SESSION_STAT

- GLOBAL_SESSION_STAT

- SESSION_TIME

- GLOBAL_SESSION_TIME

- SESSION_MEMORY

- GLOBAL_SESSION_MEMORY

- SESSION_MEMORY_DETAIL

- GLOBAL_SESSION_MEMORY_DETAIL

- SESSION_STAT_ACTIVITY

- GLOBAL_SESSION_STAT_ACTIVITY

- THREAD_WAIT_STATUS

- GLOBAL_THREAD_WAIT_STATUS

- LOCAL_THREADPOOL_STATUS

- GLOBAL_THREADPOOL_STATUS

- SESSION_CPU_RUNTIME

- SESSION_MEMORY_RUNTIME

- STATEMENT_IOSTAT_COMPLEX_RUNTIME

- LOCAL_ACTIVE_SESSION

- Transaction

- Query

- STATEMENT

- SUMMARY_STATEMENT

- STATEMENT_COUNT

- GLOBAL_STATEMENT_COUNT

- SUMMARY_STATEMENT_COUNT

- GLOBAL_STATEMENT_COMPLEX_HISTORY

- GLOBAL_STATEMENT_COMPLEX_HISTORY_TABLE

- GLOBAL_STATEMENT_COMPLEX_RUNTIME

- STATEMENT_RESPONSETIME_PERCENTILE

- STATEMENT_USER_COMPLEX_HISTORY

- STATEMENT_COMPLEX_RUNTIME

- STATEMENT_COMPLEX_HISTORY_TABLE

- STATEMENT_COMPLEX_HISTORY

- STATEMENT_WLMSTAT_COMPLEX_RUNTIME

- STATEMENT_HISTORY

- Cache/IO

- STATIO_USER_TABLES

- SUMMARY_STATIO_USER_TABLES

- GLOBAL_STATIO_USER_TABLES

- STATIO_USER_INDEXES

- SUMMARY_STATIO_USER_INDEXES

- GLOBAL_STATIO_USER_INDEXES

- STATIO_USER_SEQUENCES

- SUMMARY_STATIO_USER_SEQUENCES

- GLOBAL_STATIO_USER_SEQUENCES

- STATIO_SYS_TABLES

- SUMMARY_STATIO_SYS_TABLES

- GLOBAL_STATIO_SYS_TABLES

- STATIO_SYS_INDEXES

- SUMMARY_STATIO_SYS_INDEXES

- GLOBAL_STATIO_SYS_INDEXES

- STATIO_SYS_SEQUENCES

- SUMMARY_STATIO_SYS_SEQUENCES

- GLOBAL_STATIO_SYS_SEQUENCES

- STATIO_ALL_TABLES

- SUMMARY_STATIO_ALL_TABLES

- GLOBAL_STATIO_ALL_TABLES

- STATIO_ALL_INDEXES

- SUMMARY_STATIO_ALL_INDEXES

- GLOBAL_STATIO_ALL_INDEXES

- STATIO_ALL_SEQUENCES

- SUMMARY_STATIO_ALL_SEQUENCES

- GLOBAL_STATIO_ALL_SEQUENCES

- GLOBAL_STAT_DB_CU

- GLOBAL_STAT_SESSION_CU

- Utility

- REPLICATION_STAT

- GLOBAL_REPLICATION_STAT

- REPLICATION_SLOTS

- GLOBAL_REPLICATION_SLOTS

- BGWRITER_STAT

- GLOBAL_BGWRITER_STAT

- GLOBAL_CKPT_STATUS

- GLOBAL_DOUBLE_WRITE_STATUS

- GLOBAL_PAGEWRITER_STATUS

- GLOBAL_RECORD_RESET_TIME

- GLOBAL_REDO_STATUS

- GLOBAL_RECOVERY_STATUS

- CLASS_VITAL_INFO

- USER_LOGIN

- SUMMARY_USER_LOGIN

- GLOBAL_GET_BGWRITER_STATUS

- GLOBAL_SINGLE_FLUSH_DW_STATUS

- GLOBAL_CANDIDATE_STATUS

- Lock

- Wait Events

- Configuration

- Operator

- Workload Manager

- Global Plancache

- RTO

- DBE_PLDEBUGGER Schema

- Overview

- DBE_PLDEBUGGER.turn_on

- DBE_PLDEBUGGER.turn_off

- DBE_PLDEBUGGER.local_debug_server_info

- DBE_PLDEBUGGER.attach

- DBE_PLDEBUGGER.info_locals

- DBE_PLDEBUGGER.next

- DBE_PLDEBUGGER.continue

- DBE_PLDEBUGGER.abort

- DBE_PLDEBUGGER.print_var

- DBE_PLDEBUGGER.info_code

- DBE_PLDEBUGGER.step

- DBE_PLDEBUGGER.add_breakpoint

- DBE_PLDEBUGGER.delete_breakpoint

- DBE_PLDEBUGGER.info_breakpoints

- DBE_PLDEBUGGER.backtrace

- DBE_PLDEBUGGER.disable_breakpoint

- DBE_PLDEBUGGER.enable_breakpoint

- DBE_PLDEBUGGER.finish

- DBE_PLDEBUGGER.set_var

- DB4AI Schema

- DBE_PLDEVELOPER

- Tool Reference

- Tool Overview

- Client Tool

- Server Tools

- Tools Used in the Internal System

- mogdb

- gs_backup

- gs_basebackup

- gs_ctl

- gs_initdb

- gs_install

- gs_install_plugin

- gs_install_plugin_local

- gs_preinstall

- gs_sshexkey

- gs_tar

- gs_uninstall

- gs_upgradectl

- gs_expansion

- gs_dropnode

- gs_probackup

- gstrace

- kdb5_util

- kadmin.local

- kinit

- klist

- krb5kdc

- kdestroy

- pg_config

- pg_controldata

- pg_recvlogical

- pg_resetxlog

- pg_archivecleanup

- pssh

- pscp

- transfer.py

- Unified Database Management Tool

- FAQ

- Functions of MogDB Executable Scripts

- System Catalogs and Views Supported by gs_collector

- Extension Reference

- Error Code Reference

- Description of SQL Error Codes

- Third-Party Library Error Codes

- GAUSS-00001 - GAUSS-00100

- GAUSS-00101 - GAUSS-00200

- GAUSS 00201 - GAUSS 00300

- GAUSS 00301 - GAUSS 00400

- GAUSS 00401 - GAUSS 00500

- GAUSS 00501 - GAUSS 00600

- GAUSS 00601 - GAUSS 00700

- GAUSS 00701 - GAUSS 00800

- GAUSS 00801 - GAUSS 00900

- GAUSS 00901 - GAUSS 01000

- GAUSS 01001 - GAUSS 01100

- GAUSS 01101 - GAUSS 01200

- GAUSS 01201 - GAUSS 01300

- GAUSS 01301 - GAUSS 01400

- GAUSS 01401 - GAUSS 01500

- GAUSS 01501 - GAUSS 01600

- GAUSS 01601 - GAUSS 01700

- GAUSS 01701 - GAUSS 01800

- GAUSS 01801 - GAUSS 01900

- GAUSS 01901 - GAUSS 02000

- GAUSS 02001 - GAUSS 02100

- GAUSS 02101 - GAUSS 02200

- GAUSS 02201 - GAUSS 02300

- GAUSS 02301 - GAUSS 02400

- GAUSS 02401 - GAUSS 02500

- GAUSS 02501 - GAUSS 02600

- GAUSS 02601 - GAUSS 02700

- GAUSS 02701 - GAUSS 02800

- GAUSS 02801 - GAUSS 02900

- GAUSS 02901 - GAUSS 03000

- GAUSS 03001 - GAUSS 03100

- GAUSS 03101 - GAUSS 03200

- GAUSS 03201 - GAUSS 03300

- GAUSS 03301 - GAUSS 03400

- GAUSS 03401 - GAUSS 03500

- GAUSS 03501 - GAUSS 03600

- GAUSS 03601 - GAUSS 03700

- GAUSS 03701 - GAUSS 03800

- GAUSS 03801 - GAUSS 03900

- GAUSS 03901 - GAUSS 04000

- GAUSS 04001 - GAUSS 04100

- GAUSS 04101 - GAUSS 04200

- GAUSS 04201 - GAUSS 04300

- GAUSS 04301 - GAUSS 04400

- GAUSS 04401 - GAUSS 04500

- GAUSS 04501 - GAUSS 04600

- GAUSS 04601 - GAUSS 04700

- GAUSS 04701 - GAUSS 04800

- GAUSS 04801 - GAUSS 04900

- GAUSS 04901 - GAUSS 05000

- GAUSS 05001 - GAUSS 05100

- GAUSS 05101 - GAUSS 05200

- GAUSS 05201 - GAUSS 05300

- GAUSS 05301 - GAUSS 05400

- GAUSS 05401 - GAUSS 05500

- GAUSS 05501 - GAUSS 05600

- GAUSS 05601 - GAUSS 05700

- GAUSS 05701 - GAUSS 05800

- GAUSS 05801 - GAUSS 05900

- GAUSS 05901 - GAUSS 06000

- GAUSS 06001 - GAUSS 06100

- GAUSS 06101 - GAUSS 06200

- GAUSS 06201 - GAUSS 06300

- GAUSS 06301 - GAUSS 06400

- GAUSS 06401 - GAUSS 06500

- GAUSS 06501 - GAUSS 06600

- GAUSS 06601 - GAUSS 06700

- GAUSS 06701 - GAUSS 06800

- GAUSS 06801 - GAUSS 06900

- GAUSS 06901 - GAUSS 07000

- GAUSS 07001 - GAUSS 07100

- GAUSS 07101 - GAUSS 07200

- GAUSS 07201 - GAUSS 07300

- GAUSS 07301 - GAUSS 07400

- GAUSS 07401 - GAUSS 07480

- GAUSS 50000 - GAUSS 50999

- GAUSS 51000 - GAUSS 51999

- GAUSS 52000 - GAUSS 52999

- GAUSS 53000 - GAUSS 53699

- Error Log Reference

- System Catalogs and System Views

- Common Faults and Identification

- Common Fault Locating Methods

- Common Fault Locating Cases

- Core Fault Locating

- Permission/Session/Data Type Fault Location

- Service/High Availability/Concurrency Fault Location

- Table/Partition Table Fault Location

- File System/Disk/Memory Fault Location

- After You Run the du Command to Query Data File Size In the XFS File System, the Query Result Is Greater than the Actual File Size

- File Is Damaged in the XFS File System

- Insufficient Memory

- "Error:No space left on device" Is Displayed

- When the TPC-C is running and a disk to be injected is full, the TPC-C stops responding

- Disk Space Usage Reaches the Threshold and the Database Becomes Read-only

- SQL Fault Location

- Index Fault Location

- Source Code Parsing

- FAQs

- Glossary

- Mogeaver

Typical SQL Optimization Methods

SQL optimization involves continuous analysis and trying. Queries are run before they are used for services to determine whether the performance meets requirements. If it does not, queries will be optimized by checking the execution plan and identifying the causes. Then, the queries will be run and optimized again until they meet the requirements.

Optimizing SQL Self-Diagnosis

Performance issues may occur when you query data or run the INSERT, DELETE, UPDATE, or CREATE TABLE AS statement. In this case, you can query the warning column in the PG_CONTROL_GROUP_CONFIG, GS_SESSION_MEMORY_DETAIL views to obtain reference for performance optimization.

Alarms that can trigger SQL self diagnosis depend on the settings of resource_track_level. If resource_track_level is set to query, alarms about the failures in collecting column statistics and pushing down SQL statements will trigger the diagnosis. If resource_track_level is set to operator, all alarms will trigger the diagnosis.

Whether a SQL plan will be diagnosed depends on the settings of resource_track_cost. A SQL plan will be diagnosed only if its execution cost is greater than resource_track_cost. You can use the EXPLAIN keyword to check the plan execution cost.

Alarms

Currently, performance alarms will be reported when statistics about one or multiple columns are not collected.

An alarm will be reported when statistics about one or multiple columns are not collected. For details about the optimization, see Updating Statistics and Optimizing Statistics.

Example alarms:

No statistics about a table are not collected.

Statistic Not Collect:

schema_test.t1The statistics about a single column are not collected.

Statistic Not Collect:

schema_test.t2(c1,c2)The statistics about multiple columns are not collected.

Statistic Not Collect:

schema_test.t3((c1,c2))The statistics about a single column and multiple columns are not collected.

Statistic Not Collect:

schema_test.t4(c1,c2) schema_test.t4((c1,c2))Restrictions

-

An alarm contains a maximum of 2048 characters. If the length of an alarm exceeds this value (for example, a large number of long table names and column names are displayed in the alarm when their statistics are not collected), a warning instead of an alarm will be reported.

WARNING, "Planner issue report is truncated, the rest of planner issues will be skipped" -

If a query statement contains the Limit operator, alarms of operators lower than Limit will not be reported.

Optimizing Subqueries

Background

When an application runs a SQL statement to operate the database, a large number of subqueries are used because they are more clear than table join. Especially in complicated query statements, subqueries have more complete and independent semantics, which makes SQL statements clearer and easier to understand. Therefore, subqueries are widely used.

In MogDB, subqueries can also be called sublinks based on the location of subqueries in SQL statements.

-

Subquery: corresponds to a range table (RangeTblEntry) in the query parse tree. That is, a subquery is a SELECT statement following immediately after the FROM keyword.

-

Sublink: corresponds to an expression in the query parsing tree. That is, a sublink is a statement in the WHERE or ON clause or in the target list.

In conclusion, a subquery is a RangeTblEntry and a sublink is an expression in the query parsing tree. A sublink can be found in constraint conditions and expressions. In MogDB, sublinks can be classified into the following types:

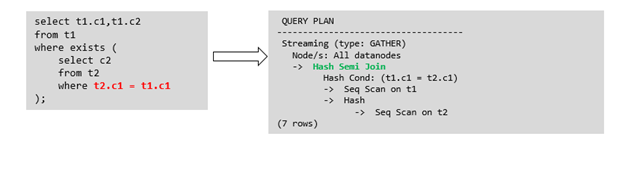

- exist_sublink: corresponds to the EXIST and NOT EXIST statements.

- any_sublink: corresponding to the op ALL(SELECT…) statement. op can be the IN, <, >, or = operator.

- all_sublink: corresponding to the op ALL(SELECT…) statement. op can be the IN, <, >, or = operator.

- rowcompare_sublink: corresponds to the RECORD op (SELECT…) statement.

- expr_sublink: corresponds to the (SELECT with a single target list item…) statement.

- array_sublink: corresponds to the ARRAY(SELECT…) statement.

- cte_sublink: corresponds to the WITH(…) query statement.

The sublinks commonly used in OLAP and HTAP are exist_sublink and any_sublink. The sublinks are pulled up by the optimization engine of MogDB. Because of the flexible use of subqueries in SQL statements, complex subqueries may affect query performance. Subqueries are classified into non-correlated subqueries and correlated subqueries.

-

Non-correlated subqueries

The execution of a subquery is independent from attributes of the outer query. In this way, a subquery can be executed before outer queries.

For example:

select t1.c1,t1.c2 from t1 where t1.c1 in ( select c2 from t2 where t2.c2 IN (2,3,4) ); QUERY PLAN ---------------------------------------------------------------- Hash Join Hash Cond: (t1.c1 = t2.c2) -> Seq Scan on t1 Filter: (c1 = ANY ('{2,3,4}'::integer[])) -> Hash -> HashAggregate Group By Key: t2.c2 -> Seq Scan on t2 Filter: (c2 = ANY ('{2,3,4}'::integer[])) (9 rows) -

Correlated subqueries

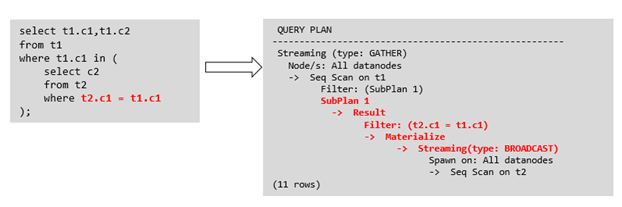

The execution of a subquery depends on some attributes (used as AND conditions of the subquery) of outer queries. In the following example, t1.c1 in the t2.c1 = t1.c1 condition is a correlated attribute. Such a subquery depends on outer queries and needs to be executed once for each outer query.

For example:

select t1.c1,t1.c2 from t1 where t1.c1 in ( select c2 from t2 where t2.c1 = t1.c1 AND t2.c2 in (2,3,4) ); QUERY PLAN ------------------------------------------------------------------------ Seq Scan on t1 Filter: (SubPlan 1) SubPlan 1 -> Seq Scan on t2 Filter: ((c1 = t1.c1) AND (c2 = ANY ('{2,3,4}'::integer[]))) (5 rows)

Sublink Optimization on MogDB

To optimize a sublink, a subquery is pulled up to join with tables in outer queries, preventing the subquery from being converted into a plan involving subplans and broadcast. You can run the EXPLAIN statement to check whether a sublink is converted into such a plan.

For example:

Replace the execution plan on the right of the arrow with the following execution plan:

QUERY PLAN

--------------------------------

Seq Scan on t1

Filter: (SubPlan 1)

SubPlan 1

-> Seq Scan on t2

Filter: (c1 = t1.c1)

(5 rows)-

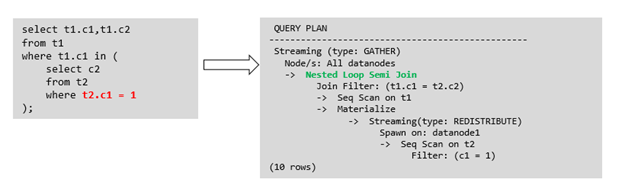

Sublink-release scenarios supported by MogDB

-

Pulling up the IN sublink

- The subquery cannot contain columns in the outer query (columns in more outer queries are allowed).

- The subquery cannot contain volatile functions.

Replace the execution plan on the right of the arrow with the following execution plan:

QUERY PLAN -------------------------------------- Hash Join Hash Cond: (t1.c1 = t2.c2) -> Seq Scan on t1 -> Hash -> HashAggregate Group By Key: t2.c2 -> Seq Scan on t2 Filter: (c1 = 1) (8 rows) -

Pulling up the EXISTS sublink

The WHERE clause must contain a column in the outer query. Other parts of the subquery cannot contain the column. Other restrictions are as follows:

- The subquery must contain the FROM clause.

- The subquery cannot contain the WITH clause.

- The subquery cannot contain aggregate functions.

- The subquery cannot contain a SET, SORT, LIMIT, WindowAgg, or HAVING operation.

- The subquery cannot contain volatile functions.

Replace the execution plan on the right of the arrow with the following execution plan:

QUERY PLAN ------ Hash Join Hash Cond: (t1.c1 = t2.c1) -> Seq Scan on t1 -> Hash -> HashAggregate Group By Key: t2.c1 -> Seq Scan on t2 (7 rows) -

Pulling up an equivalent correlated query containing aggregate functions

The WHERE condition of the subquery must contain a column from the outer query. Equivalence comparison must be performed between this column and related columns in tables of the subquery. These conditions must be connected using AND. Other parts of the subquery cannot contain the column. Other restrictions are as follows:

-

The columns in the expression in the WHERE condition of the subquery must exist in tables.

-

After the SELECT keyword of the subquery, there must be only one output column. The output column must be an aggregate function (for example, MAX), and the parameter (for example, t2.c2) of the aggregate function cannot be columns of a table (for example, t1) in outer queries. The aggregate function cannot be COUNT.

For example, the following subquery can be pulled up:

select * from t1 where c1 >( select max(t2.c1) from t2 where t2.c1=t1.c1 );The following subquery cannot be pulled up because the subquery has no aggregate function:

select * from t1 where c1 >( select t2.c1 from t2 where t2.c1=t1.c1 );The following subquery cannot be pulled up because the subquery has two output columns:

select * from t1 where (c1,c2) >( select max(t2.c1),min(t2.c2) from t2 where t2.c1=t1.c1 ); -

The subquery must be a FROM clause.

-

The subquery cannot contain a GROUP BY, HAVING, or SET operation.

-

The target list of the subquery cannot contain the function that returns a set.

-

The WHERE condition of the subquery must contain a column from the outer query. Equivalence comparison must be performed between this column and related columns in tables of the subquery. These conditions must be connected using AND. Other parts of the subquery cannot contain the column. For example, the following subquery can be pulled up:

select * from t3 where t3.c1=( select t1.c1 from t1 where c1 >( select max(t2.c1) from t2 where t2.c1=t1.c1 ));If another condition is added to the subquery in the previous example, the subquery cannot be pulled up because the subquery references to the column in the outer query. For example:

select * from t3 where t3.c1=( select t1.c1 from t1 where c1 >( select max(t2.c1) from t2 where t2.c1=t1.c1 and t3.c1>t2.c2 ));

-

-

Pulling up a sublink in the OR clause

If the WHERE condition contains an EXIST correlated sublink connected by OR,

for example:

select a, c from t1 where t1.a = (select avg(a) from t3 where t1.b = t3.b) or exists (select * from t4 where t1.c = t4.c);the process of pulling up such a sublink is as follows:

-

Extract opExpr from the OR clause in the WHERE condition. The value is t1.a = (select avg(a) from t3 where t1.b = t3.b).

-

The opExpr contains a subquery. If the subquery can be pulled up, the subquery is rewritten as select avg(a), t3.b from t3 group by t3.b, generating the NOT NULL condition t3.b is not null. The opExpr is replaced with this NOT NULL condition. In this case, the SQL statement changes to:

select a, c from t1 left join (select avg(a) avg, t3.b from t3 group by t3.b) as t3 on (t1.a = avg and t1.b = t3.b) where t3.b is not null or exists (select * from t4 where t1.c = t4.c); -

Extract the EXISTS sublink exists (select * from t4 where t1.c = t4.c) from the OR clause to check whether the sublink can be pulled up. If it can be pulled up, it is converted into select t4.c from t4 group by t4.c, generating the NOT NULL condition t4.c is not null. In this case, the SQL statement changes to:

select a, c from t1 left join (select avg(a) avg, t3.b from t3 group by t3.b) as t3 on (t1.a = avg and t1.b = t3.b) left join (select t4.c from t4 group by t4.c) where t3.b is not null or t4.c is not null;

-

-

-

Sublink-release scenarios not supported by MogDB

Except the sublinks described above, all the other sublinks cannot be pulled up. In this case, a join subquery is planned as the combination of subplans and broadcast. As a result, if tables in the subquery have a large amount of data, query performance may be poor.

If a correlated subquery joins with two tables in outer queries, the subquery cannot be pulled up. You need to change the outer query into a WITH clause and then perform the join.

For example:

select distinct t1.a, t2.a from t1 left join t2 on t1.a=t2.a and not exists (select a,b from test1 where test1.a=t1.a and test1.b=t2.a);The outer query is changed into:

with temp as ( select * from (select t1.a as a, t2.a as b from t1 left join t2 on t1.a=t2.a) ) select distinct a,b from temp where not exists (select a,b from test1 where temp.a=test1.a and temp.b=test1.b);-

The subquery (without COUNT) in the target list cannot be pulled up.

For example:

explain (costs off) select (select c2 from t2 where t1.c1 = t2.c1) ssq, t1.c2 from t1 where t1.c2 > 10;The execution plan is as follows:

explain (costs off) select (select c2 from t2 where t1.c1 = t2.c1) ssq, t1.c2 from t1 where t1.c2 > 10; QUERY PLAN -------------------------------- Seq Scan on t1 Filter: (c2 > 10) SubPlan 1 -> Seq Scan on t2 Filter: (t1.c1 = c1) (5 rows)The correlated subquery is displayed in the target list (query return list). Values need to be returned even if the condition t1.c1=t2.c1 is not met. Therefore, use left outer join to join T1 and T2 so that SSQ can return padding values when the condition t1.c1=t2.c1 is not met.

NOTE:

ScalarSubQuery (SSQ) and Correlated-ScalarSubQuery (CSSQ) are described as follows:

NOTE:

ScalarSubQuery (SSQ) and Correlated-ScalarSubQuery (CSSQ) are described as follows:- SSQ: a sublink that returns a scalar value of a single row with a single column

- CSSQ: an SSQ containing correlation conditions

The preceding SQL statement can be changed into:

with ssq as ( select t2.c2 from t2 ) select ssq.c2, t1.c2 from t1 left join ssq on t1.c1 = ssq.c2 where t1.c2 > 10;The execution plan after the change is as follows:

QUERY PLAN --------------------------------- Hash Right Join Hash Cond: (ssq.c2 = t1.c1) CTE ssq -> Seq Scan on t2 -> CTE Scan on ssq -> Hash -> Seq Scan on t1 Filter: (c2 > 10) (8 rows)In the preceding example, the SSQ in the target list is pulled up to right join, preventing poor performance caused by the plan involving subplans when the table (T2) in the subquery is too large.

-

The subquery (with COUNT) in the target list cannot be pulled up.

For example:

select (select count(*) from t2 where t2.c1=t1.c1) cnt, t1.c1, t3.c1 from t1,t3 where t1.c1=t3.c1 order by cnt, t1.c1;The execution plan is as follows:

QUERY PLAN -------------------------------------------- Sort Sort Key: ((SubPlan 1)), t1.c1 -> Hash Join Hash Cond: (t1.c1 = t3.c1) -> Seq Scan on t1 -> Hash -> Seq Scan on t3 SubPlan 1 -> Aggregate -> Seq Scan on t2 Filter: (c1 = t1.c1) (11 rows)The correlated subquery is displayed in the target list (query return list). Values need to be returned even if the condition t1.c1=t2.c1 is not met. Therefore, use left outer join to join T1 and T2 so that SSQ can return padding values when the condition t1.c1=t2.c1 is not met. However, COUNT is used, which requires that 0 is returned when the condition is not met. Therefore,

case-when NULL then 0 else count(*)can be used.The preceding SQL statement can be changed into:

with ssq as ( select count(*) cnt, c1 from t2 group by c1 ) select case when ssq.cnt is null then 0 else ssq.cnt end cnt, t1.c1, t3.c1 from t1 left join ssq on ssq.c1 = t1.c1,t3 where t1.c1 = t3.c1 order by ssq.cnt, t1.c1;The execution plan after the change is as follows:

QUERY PLAN ------------------------------------------- Sort Sort Key: ssq.cnt, t1.c1 CTE ssq -> HashAggregate Group By Key: t2.c1 -> Seq Scan on t2 -> Hash Join Hash Cond: (t1.c1 = t3.c1) -> Hash Left Join Hash Cond: (t1.c1 = ssq.c1) -> Seq Scan on t1 -> Hash -> CTE Scan on ssq -> Hash -> Seq Scan on t3 (15 rows) -

Non-equivalent correlated subqueries cannot be pulled up.

For example:

select t1.c1, t1.c2 from t1 where t1.c1 = (select agg() from t2.c2 > t1.c2);Non-equivalent correlated subqueries cannot be pulled up. You can perform join twice (one CorrelationKey and one rownum self-join) to rewrite the statement.

You can rewrite the statement in either of the following ways:

-

Subquery rewriting

select t1.c1, t1.c2 from t1, ( select t1.rowid, agg() aggref from t1,t2 where t1.c2 > t2.c2 group by t1.rowid ) dt /* derived table */ where t1.rowid = dt.rowid AND t1.c1 = dt.aggref; -

CTE rewriting

WITH dt as ( select t1.rowid, agg() aggref from t1,t2 where t1.c2 > t2.c2 group by t1.rowid ) select t1.c1, t1.c2 from t1, derived_table where t1.rowid = derived_table.rowid AND t1.c1 = derived_table.aggref;

NOTICE:

NOTICE:- If the AGG type is COUNT(\*), 0 is used for data padding when CASE-WHEN is not matched. If the type is not COUNT(\*), NULL is used.

- CTE rewriting works better by using share scan.

-

-

More Optimization Examples

Modify the SELECT statement by changing the subquery to a JOIN relationship between the primary table and the parent query or modifying the subquery to improve the query performance. Ensure that the subquery to be used is semantically correct.

explain (costs off) select * from t1 where t1.c1 in (select t2.c1 from t2 where t1.c1 = t2.c2);

QUERY PLAN

--------------------------------

Seq Scan on t1

Filter: (SubPlan 1)

SubPlan 1

-> Seq Scan on t2

Filter: (t1.c1 = c2)

(5 rows)In the preceding example, a subplan is used. To remove the subplan, you can modify the statement as follows:

explain (costs off) select * from t1 where exists (select t2.c1 from t2 where t1.c1 = t2.c2 and t1.c1 = t2.c1);

QUERY PLAN

------------------------------------------

Hash Join

Hash Cond: (t1.c1 = t2.c2)

-> Seq Scan on t1

-> Hash

-> HashAggregate

Group By Key: t2.c2, t2.c1

-> Seq Scan on t2

Filter: (c2 = c1)

(8 rows)In this way, the subplan is replaced by the hash-join between the two tables, greatly improving the execution efficiency.

Optimizing Statistics

Background

MogDB generates optimal execution plans based on the cost estimation. Optimizers need to estimate the number of data rows and the cost based on statistics collected using ANALYZE. Therefore, the statistics is vital for the estimation of the number of rows and cost. Global statistics are collected using ANALYZE: relpages and reltuples in the pg_class table; stadistinct, stanullfrac, stanumbersN, stavaluesN, and histogram_bounds in the pg_statistic table.

Example 1: Poor Query Performance Due to the Lack of Statistics

In most cases, the lack of statistics about tables or columns involved in the query greatly affects the query performance.

The table structure is as follows:

CREATE TABLE LINEITEM

(

L_ORDERKEY BIGINT NOT NULL

, L_PARTKEY BIGINT NOT NULL

, L_SUPPKEY BIGINT NOT NULL

, L_LINENUMBER BIGINT NOT NULL

, L_QUANTITY DECIMAL(15,2) NOT NULL

, L_EXTENDEDPRICE DECIMAL(15,2) NOT NULL

, L_DISCOUNT DECIMAL(15,2) NOT NULL

, L_TAX DECIMAL(15,2) NOT NULL

, L_RETURNFLAG CHAR(1) NOT NULL

, L_LINESTATUS CHAR(1) NOT NULL

, L_SHIPDATE DATE NOT NULL

, L_COMMITDATE DATE NOT NULL

, L_RECEIPTDATE DATE NOT NULL

, L_SHIPINSTRUCT CHAR(25) NOT NULL

, L_SHIPMODE CHAR(10) NOT NULL

, L_COMMENT VARCHAR(44) NOT NULL

) with (orientation = column, COMPRESSION = MIDDLE);

CREATE TABLE ORDERS

(

O_ORDERKEY BIGINT NOT NULL

, O_CUSTKEY BIGINT NOT NULL

, O_ORDERSTATUS CHAR(1) NOT NULL

, O_TOTALPRICE DECIMAL(15,2) NOT NULL

, O_ORDERDATE DATE NOT NULL

, O_ORDERPRIORITY CHAR(15) NOT NULL

, O_CLERK CHAR(15) NOT NULL

, O_SHIPPRIORITY BIGINT NOT NULL

, O_COMMENT VARCHAR(79) NOT NULL

)with (orientation = column, COMPRESSION = MIDDLE);The query statements are as follows:

explain verbose select

count(*) as numwait

from

lineitem l1,

orders

where

o_orderkey = l1.l_orderkey

and o_orderstatus = 'F'

and l1.l_receiptdate > l1.l_commitdate

and not exists (

select

*

from

lineitem l3

where

l3.l_orderkey = l1.l_orderkey

and l3.l_suppkey <> l1.l_suppkey

and l3.l_receiptdate > l3.l_commitdate

)

order by

numwait desc;If such an issue occurs, you can use the following methods to check whether statistics in tables or columns has been collected using ANALYZE.

-

Execute EXPLAIN VERBOSE to analyze the execution plan and check the warning information:

WARNING:Statistics in some tables or columns(public.lineitem.l_receiptdate, public.lineitem.l_commitdate, public.lineitem.l_orderkey, public.lineitem.l_suppkey, public.orders.o_orderstatus, public.orders.o_orderkey) are not collected. HINT:Do analyze for them in order to generate optimized plan. -

Check whether the following information exists in the log file in the pg_log directory. If it does, the poor query performance was caused by the lack of statistics in some tables or columns.

2017-06-14 17:28:30.336 CST 140644024579856 20971684 [BACKEND] LOG:Statistics in some tables or columns(public.lineitem.l_receiptdate, public.lineitem.l_commitdate, public.lineitem.l_orderkey, public.linei tem.l_suppkey, public.orders.o_orderstatus, public.orders.o_orderkey) are not collected. 2017-06-14 17:28:30.336 CST 140644024579856 20971684 [BACKEND] HINT:Do analyze for them in order to generate optimized plan.

By using any of the preceding methods, you can identify tables or columns whose statistics have not been collected using ANALYZE. You can execute ANALYZE to warnings or tables and columns recorded in logs to resolve the problem.

Optimizing Operators

Background

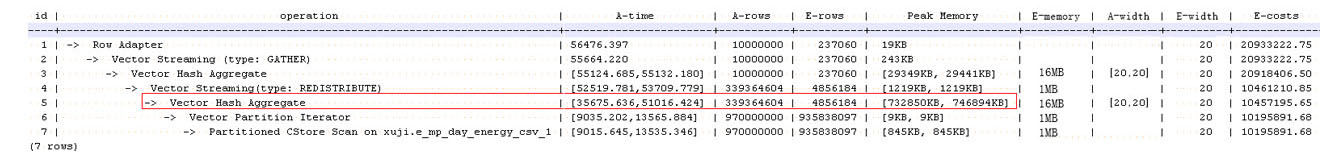

A query statement needs to go through multiple operator procedures to generate the final result. Sometimes, the overall query performance deteriorates due to long execution time of certain operators, which are regarded as bottleneck operators. In this case, you need to execute the EXPLAIN ANALYZE or EXPLAIN PERFORMANCE command to view the bottleneck operators, and then perform optimization.

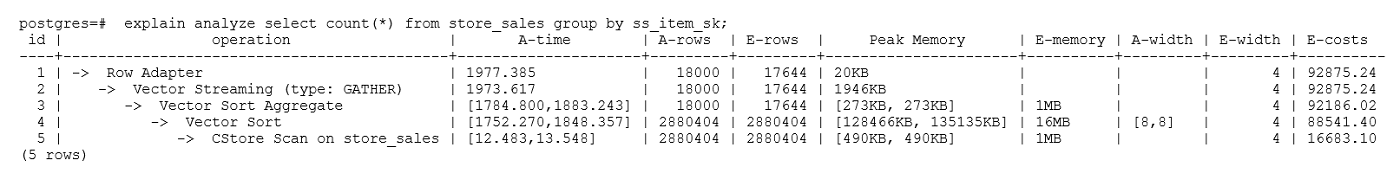

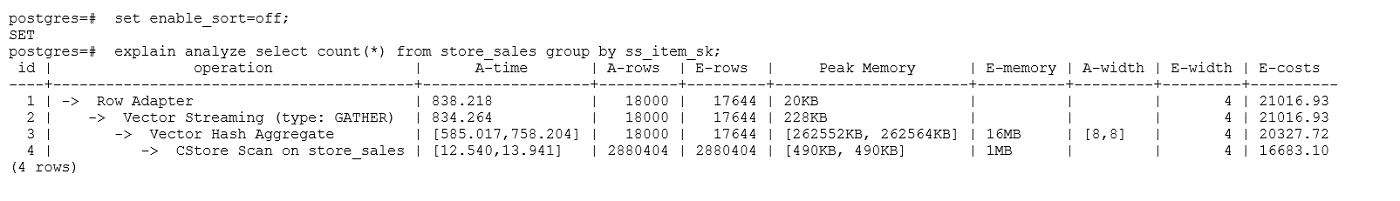

For example, in the following execution process, the execution time of the Hashagg operator accounts for about 66% [(51016-13535)/56476 ≈ 66%] of the total execution time. Therefore, the Hashagg operator is the bottleneck operator for this query. Optimize this operator first.

Example

-

Scan the base table. For queries requiring large volume of data filtering, such as point queries or queries that need range scanning, a full table scan using SeqScan will take a long time. To facilitate scanning, you can create indexes on the condition column and select IndexScan for index scanning.

mogdb=# explain (analyze on, costs off) select * from store_sales where ss_sold_date_sk = 2450944; id | operation | A-time | A-rows | Peak Memory | A-width ----+--------------------------------+---------------------+--------+--------------+--------- 1 | -> Streaming (type: GATHER) | 3666.020 | 3360 | 195KB | 2 | -> Seq Scan on store_sales | [3594.611,3594.611] | 3360 | [34KB, 34KB] | (2 rows) Predicate Information (identified by plan id) ----------------------------------------------- 2 --Seq Scan on store_sales Filter: (ss_sold_date_sk = 2450944) Rows Removed by Filter: 4968936mogdb=# create index idx on store_sales_row(ss_sold_date_sk); CREATE INDEX mogdb=# explain (analyze on, costs off) select * from store_sales_row where ss_sold_date_sk = 2450944; id | operation | A-time | A-rows | Peak Memory | A-width ----+------------------------------------------------+-----------------+--------+--------------+---------- 1 | -> Streaming (type: GATHER) | 81.524 | 3360 | 195KB | 2 | -> Index Scan using idx on store_sales_row | [13.352,13.352] | 3360 | [34KB, 34KB] | (2 rows)In this example, the full table scan filters much data and returns 3360 records. After an index has been created on the ss_sold_date_sk column, the scanning efficiency is significantly boosted from 3.6s to 13 ms by using IndexScan.

-

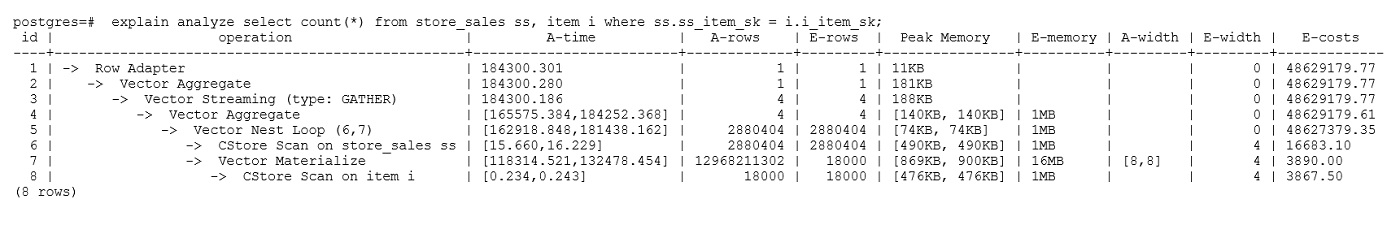

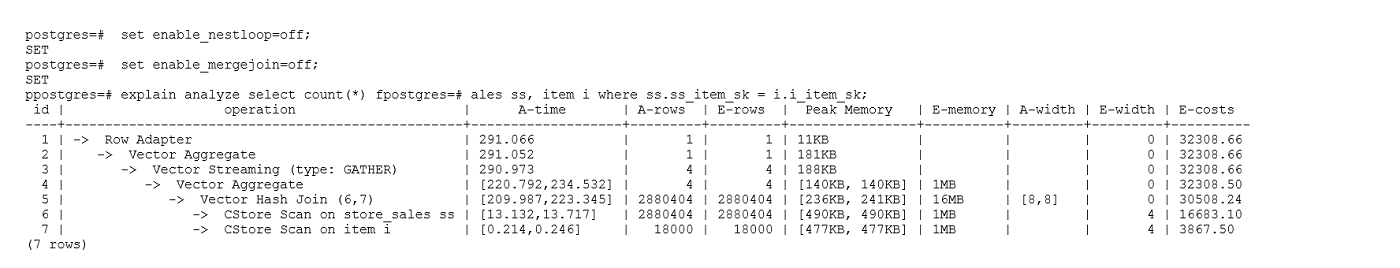

If NestLoop is used for joining tables with a large number of rows, the join may take a long time. In the following example, NestLoop takes 181s. If enable_mergejoin is set to off to disable merge join and enable_nestloop is set to off to disable NestLoop so that the optimizer selects hash join, the join takes more than 200 ms.

-

Generally, query performance can be improved by selecting HashAgg. If Sort and GroupAgg are used for a large result set, you need to set enable_sort to off. HashAgg consumes less time than Sort and GroupAgg.